Since summer 2023, website owners have had the ability to prevent OpenAI’s web crawler, GPTBot, from accessing and utilizing their website content for training its AI models, including ChatGPT (accessible through OpenAI’s website and Microsoft’s Bing chat, as well as various other Microsoft products).

This article explores the methods and implications of blocking GPTBot and other crawlers from your website.

Benefits of Blocking Crawlers

Preventing AI crawlers from accessing your website ensures your text and images won’t contribute to future training datasets for AI models like ChatGPT. This can be particularly beneficial for protecting proprietary information, unique content, or content you simply don’t wish to share with AI training models.

However, it’s crucial to understand that blocking GPTBot won’t retroactively remove your content from ChatGPT’s existing knowledge base. Additionally, other AI crawlers may not yet respect these directives, as OpenAI is currently the primary proponent of this blocking mechanism.

Robots.txt file example

Robots.txt file example

Using Robots.txt to Block GPTBot

The standard method for controlling crawler access is through a robots.txt file. This simple text file, placed in the root directory of your website, instructs crawlers which parts of your site they’re allowed to access.

To block GPTBot entirely, add the following lines to your robots.txt file:

User-agent: GPTBot

Disallow: /This denies GPTBot access to all pages on your site. You can also grant access to specific folders while restricting others:

User-agent: GPTBot

Allow: /folder-1/

Disallow: /folder-2/Replace “folder-1” and “folder-2” with the actual folder names on your server. To block all crawlers, use this configuration:

User-agent: *

Disallow: /More information on robots.txt can be found on OpenAI’s website and Google’s developer documentation.

Important Considerations for Robots.txt

While generally respected, robots.txt isn’t foolproof. Technically, determined crawlers can ignore these directives and still access your content. It relies on the ethical practices of the crawler operators.

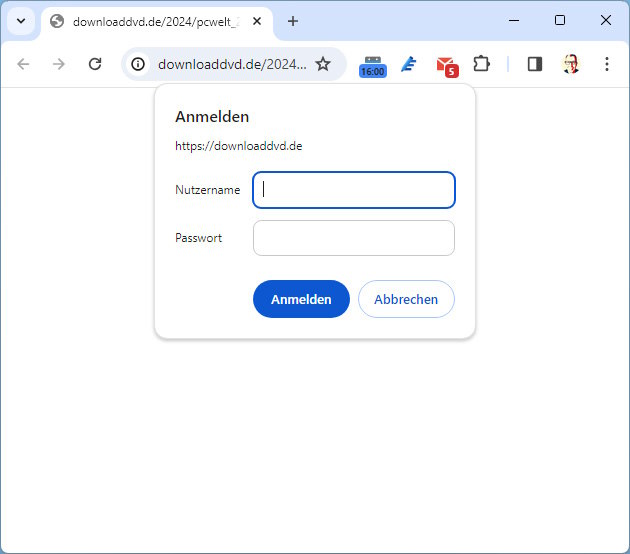

Password Protection: A More Secure Approach

For highly sensitive content, password protection offers a stronger defense against both AI and other crawlers. This restricts access to authorized users only. The downside is that this content becomes inaccessible to the public.

Password protection typically involves using .htpasswd and .htaccess files. The .htpasswd file stores encrypted passwords and usernames, while the .htaccess file specifies which directories or files require password authentication and where the .htpasswd file is located on the server. Information on configuring these files is readily available online.

Conclusion

Protecting your website content from unwanted crawling is an important consideration in today’s digital landscape. While robots.txt provides a convenient method for managing GPTBot access, password protection offers a more robust solution for securing sensitive information. Choosing the right approach depends on your specific needs and the level of security required.