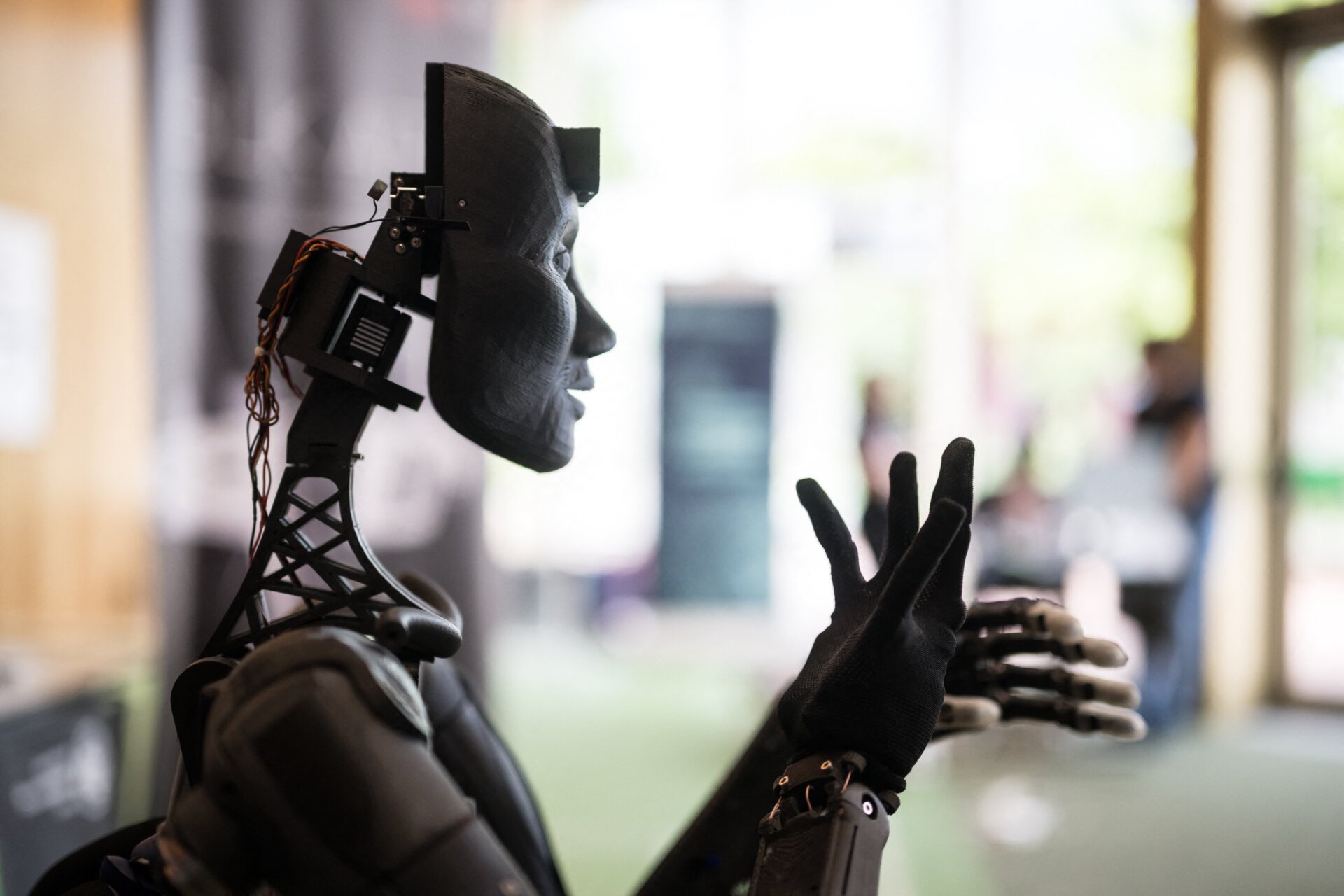

Artificial intelligence seems to enhance our lives, personalizing our social media feeds and curating our music playlists. However, behind this veneer of convenience lurks a growing threat: algorithmic harms. These harms aren’t always immediately apparent, subtly accumulating as AI systems make decisions about our lives, often without our awareness. This hidden power poses a significant risk to privacy, equality, autonomy, and safety.

AI systems permeate nearly every aspect of modern life, from suggesting movies on Netflix to influencing hiring decisions and even judicial sentencing. But what happens when these seemingly neutral systems create disadvantages for certain groups or cause real-world harm? The often-overlooked consequences of AI applications demand regulatory frameworks that can keep pace with this rapidly evolving technology. This article explores a legal framework designed to address these challenges.

The Slow Burn of Algorithmic Harm

Algorithmic harms are insidious, their cumulative impact often going unnoticed. These systems typically don’t directly violate privacy or autonomy in easily perceptible ways. Instead, they amass vast amounts of data, often without consent, using it to shape decisions that impact our lives. Sometimes, this manifests as minor annoyances like targeted advertising. However, as AI operates unchecked, these repetitive harms can escalate, causing significant cumulative damage across various groups.

Social media algorithms, designed to foster social interaction, exemplify this issue. Behind the scenes, they track user activity, compiling profiles of political beliefs, professional affiliations, and personal lives. This data fuels systems that make consequential decisions, from identifying jaywalkers to influencing job applications and assessing suicide risk. Furthermore, the addictive design of these platforms can trap teenagers in cycles of overuse, exacerbating mental health issues like anxiety, depression, and self-harm. By the time the full extent of the harm is realized, it’s often too late – privacy has been compromised, opportunities limited by biased algorithms, and the safety of vulnerable individuals undermined, all without their knowledge. This represents what can be termed “intangible, cumulative harm”: AI systems operating in the background with devastating, yet invisible, consequences.

Researcher Kumba Sennaar describes how AI systems perpetuate and exacerbate biases.

Researcher Kumba Sennaar describes how AI systems perpetuate and exacerbate biases.

Why Regulation Lags Behind

Despite the growing dangers, legal frameworks globally struggle to keep pace with AI advancements. In the United States, a regulatory approach prioritizing innovation hinders the implementation of strict standards governing AI usage across diverse contexts. Courts and regulatory bodies, accustomed to addressing concrete harms like physical injury or financial loss, find it challenging to grapple with the subtle, cumulative, and often undetectable nature of algorithmic harms. Existing regulations often fail to address the broader, long-term impacts of AI systems. For instance, social media algorithms can gradually erode users’ mental health. However, because these harms develop slowly, they are difficult to address within current legal frameworks.

Four Key Areas of Algorithmic Harm

Algorithmic harms can be categorized into four legal domains: privacy, autonomy, equality, and safety. Each area is susceptible to the subtle yet often unchecked influence of AI systems.

-

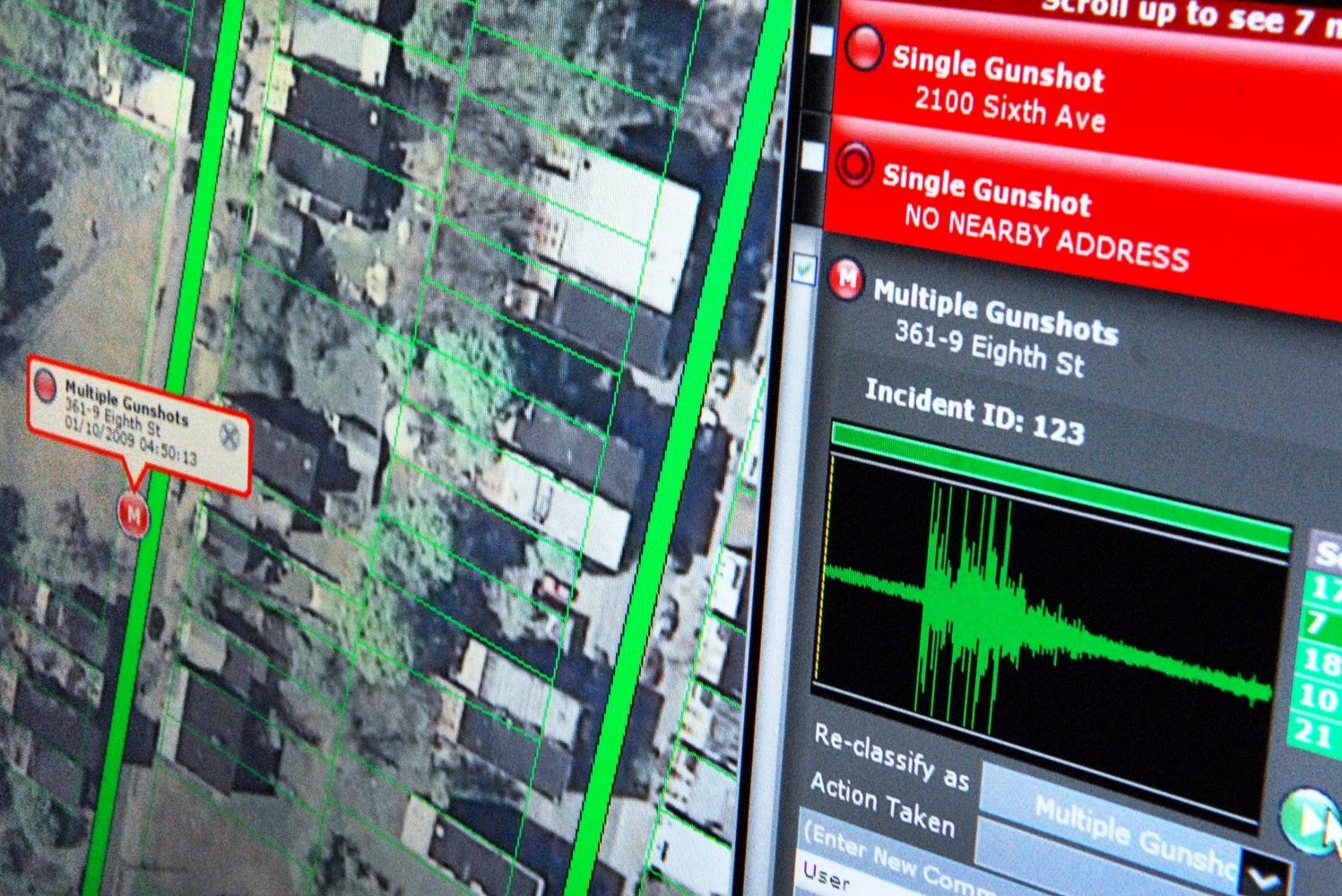

Privacy Erosion: AI systems collect, process, and share vast amounts of data, compromising privacy in ways that may not be immediately obvious but have significant long-term implications. Facial recognition systems, for example, can track individuals in public and private spaces, normalizing mass surveillance.

-

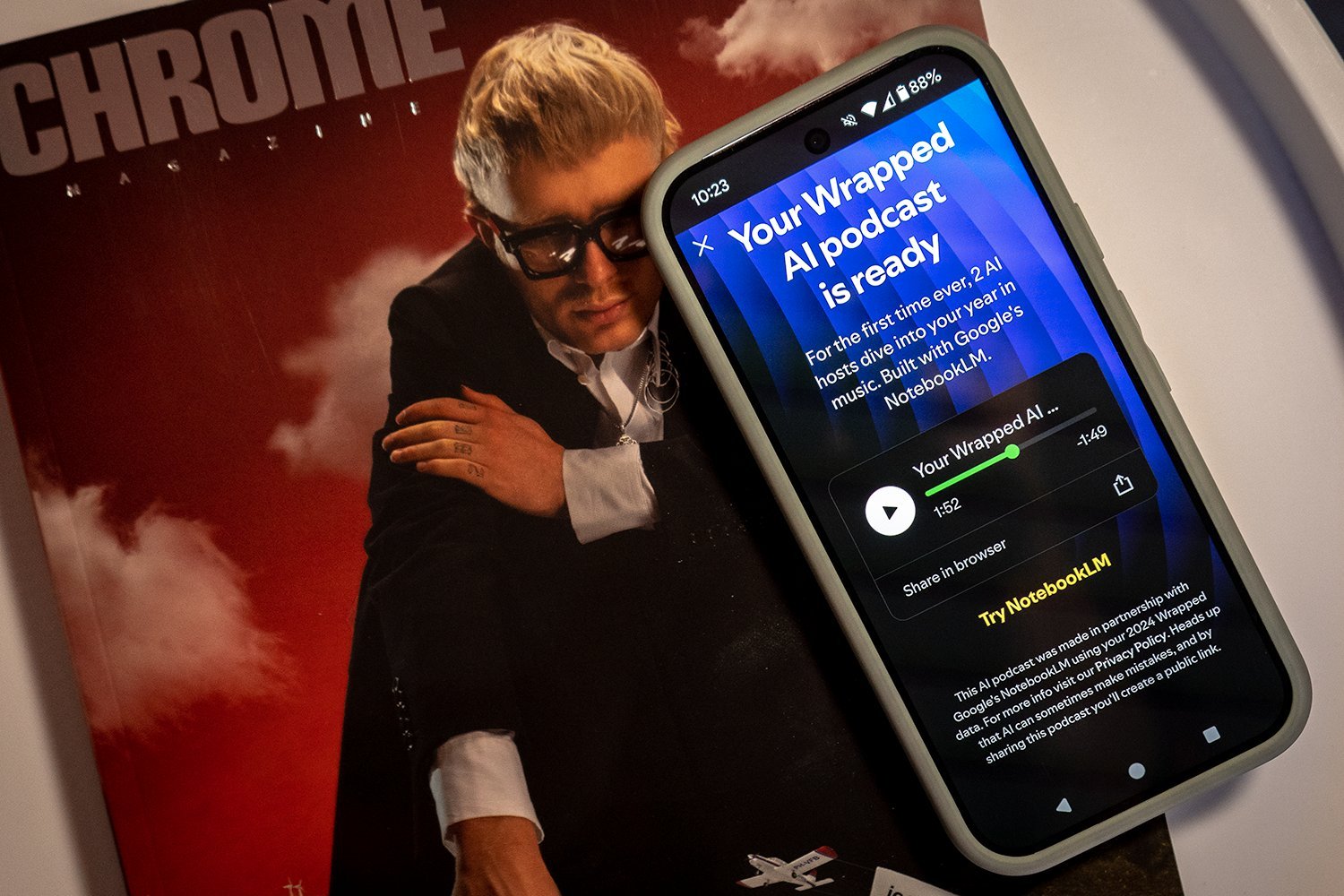

Undermining Autonomy: AI systems can subtly manipulate the information we see, undermining our ability to make autonomous decisions. Social media platforms utilize algorithms to maximize third-party interests, subtly shaping opinions, decisions, and behaviors across millions of users.

-

Diminishing Equality: Despite being designed for neutrality, AI systems often inherit biases present in their data and algorithms, reinforcing societal inequalities. One example is a facial recognition system used in retail stores that disproportionately misidentified women and people of color.

-

Impairing Safety: AI systems make decisions that impact safety and well-being. System failures can have catastrophic consequences. Even when functioning as intended, AI systems can cause harm, such as the cumulative effects of social media algorithms on teenagers’ mental health.

These cumulative harms often stem from AI applications protected by trade secret laws, making it difficult for victims to detect or trace the source of the harm. This lack of transparency creates an accountability gap. When a biased hiring decision or wrongful arrest occurs due to an algorithm, victims often remain unaware. Without transparency, holding companies accountable becomes nearly impossible.

Bridging the Accountability Gap

Categorizing algorithmic harms helps define the legal boundaries of AI regulation and suggests potential legal reforms to address the accountability gap. Several changes could prove beneficial:

-

Mandatory Algorithmic Impact Assessments: Companies should be required to document and address the immediate and cumulative harms of AI applications to privacy, autonomy, equality, and safety – both before and after deployment. For example, firms using facial recognition systems would need to evaluate the system’s impact throughout its lifecycle.

-

Stronger Individual Rights: Individuals should have the right to opt out of harmful AI practices, and certain AI applications should require explicit opt-in consent. For instance, data processing by facial recognition systems could require an opt-in regime, with users retaining the right to opt out at any time.

-

Mandatory Disclosure: Companies should be required to disclose the use of AI technology and its potential harms. This might involve notifying customers about the use of facial recognition systems and the anticipated harms across the four domains outlined above.

As AI becomes increasingly integrated into critical societal functions – from healthcare to education and employment – the need to regulate its potential harms becomes more urgent. Without intervention, these invisible harms will likely continue to accumulate, impacting nearly everyone and disproportionately affecting the most vulnerable.

Conclusion: Prioritizing Civil Rights in the Age of AI

With generative AI amplifying and exacerbating existing AI harms, policymakers, courts, technology developers, and civil society must recognize the legal implications of AI. This necessitates not only improved laws but also a more thoughtful approach to AI development – one that prioritizes civil rights and justice in the face of rapid technological advancement. The future of AI holds immense promise, but without appropriate legal frameworks, it also risks entrenching inequality and eroding the very civil rights it is often designed to enhance.

Sylvia Lu, Faculty Fellow and Visiting Assistant Professor of Law, University of Michigan

This article is republished from The Conversation under a Creative Commons license. Read the original article.