Running a large language model (LLM) or AI chatbot locally on your computer offers unparalleled privacy, allowing you to ask anything without concerns about data collection. While setting up local LLMs can be daunting, GPT4All simplifies the process, making it accessible to everyone. This guide provides a step-by-step walkthrough for installing and using GPT4All, empowering you to harness the power of private AI.

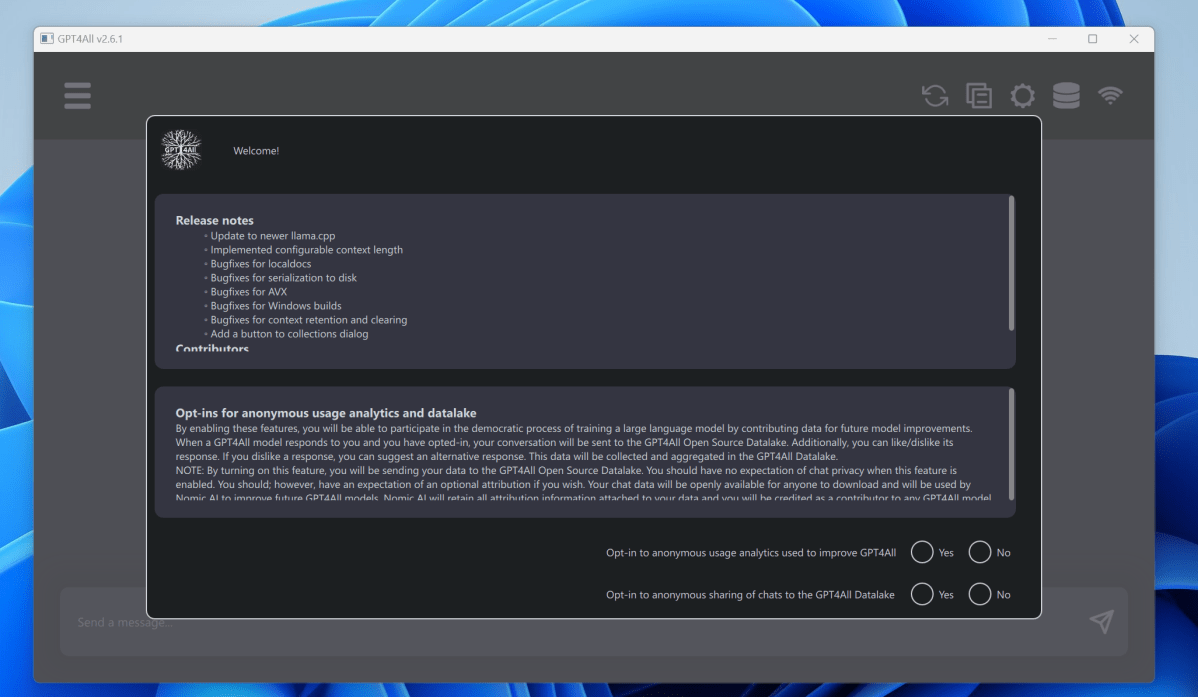

GPT4All stands out for its user-friendly interface combined with flexibility. While not as feature-rich as some complex frameworks, it’s operational within minutes with minimal clicks. Its compatibility with both CPU and GPU ensures functionality even without cutting-edge hardware.

GPT4All LLM AI chatbot local llm whyGPT4All’s intuitive interface makes private AI accessible. Mark Hachman / IDG

GPT4All LLM AI chatbot local llm whyGPT4All’s intuitive interface makes private AI accessible. Mark Hachman / IDG

Why a Local LLM?

A local LLM allows private conversations with an AI chatbot. Think of it as a supercharged search engine (“Explain black holes to a 5-year-old”) or a diagnostic tool (“I have a painful bite and a fever”). It can even help decipher complex legal or medical documents, offering insights before seeking professional advice. Importantly, it’s your LLM, free from the limitations and potential privacy concerns of cloud-based alternatives like Microsoft Copilot, ChatGPT, or Google Bard. While GPT4All prioritizes safety and responsible use, it provides a secure starting point for exploring the world of LLMs.

Installing GPT4All

While online downloads always warrant caution, GPT4All, developed by Nomic AI, is open-sourced on GitHub, allowing community scrutiny of its code. This transparency provides a level of assurance regarding its security.

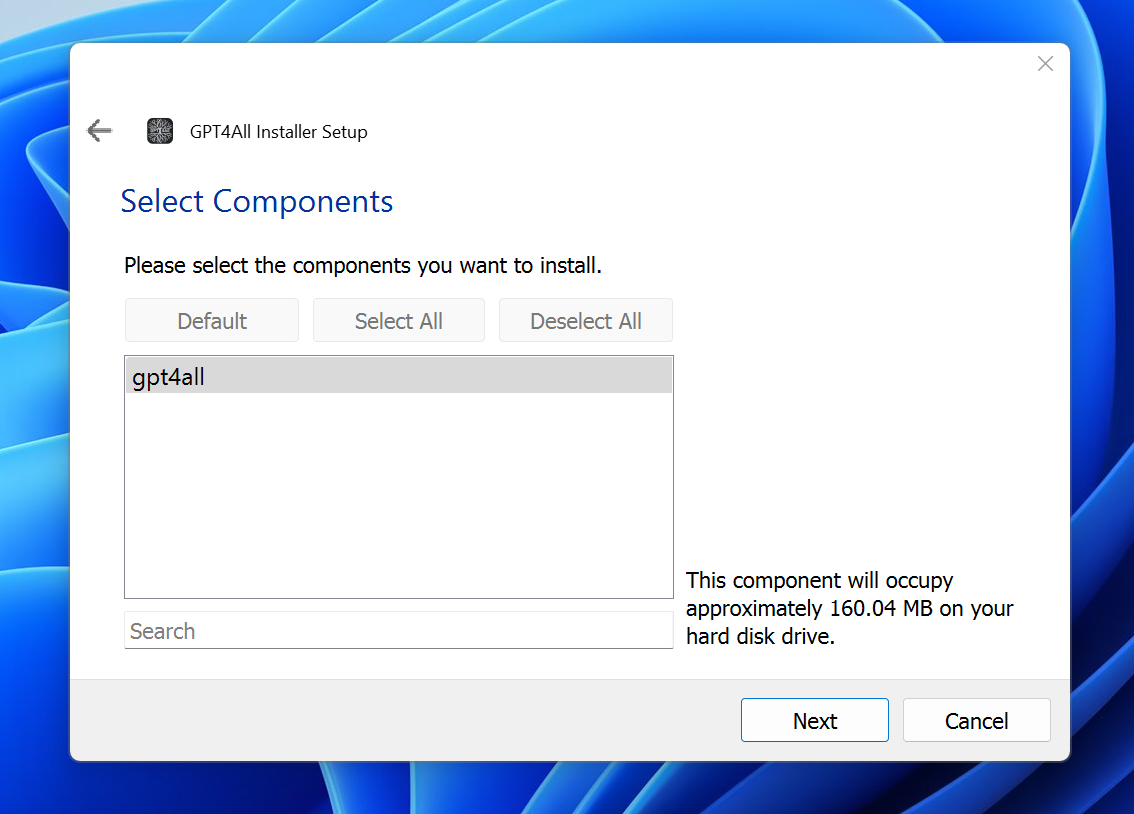

GPT4AllMark Hachman / IDG

GPT4AllMark Hachman / IDG

The installation process is straightforward. The small installer (around 27MB for Windows) downloads the necessary files to your chosen directory. The entire application requires approximately 185MB of storage and installs quickly.

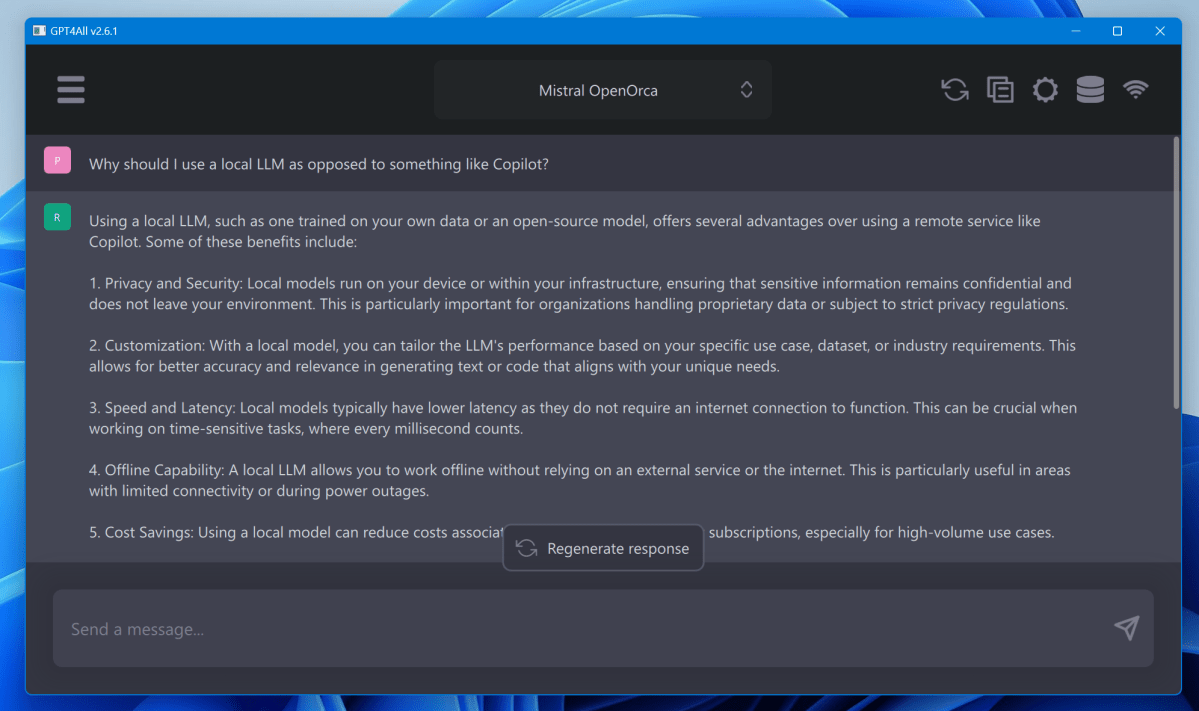

GPT4All LLM AI chatbotMark Hachman / IDG

GPT4All LLM AI chatbotMark Hachman / IDG

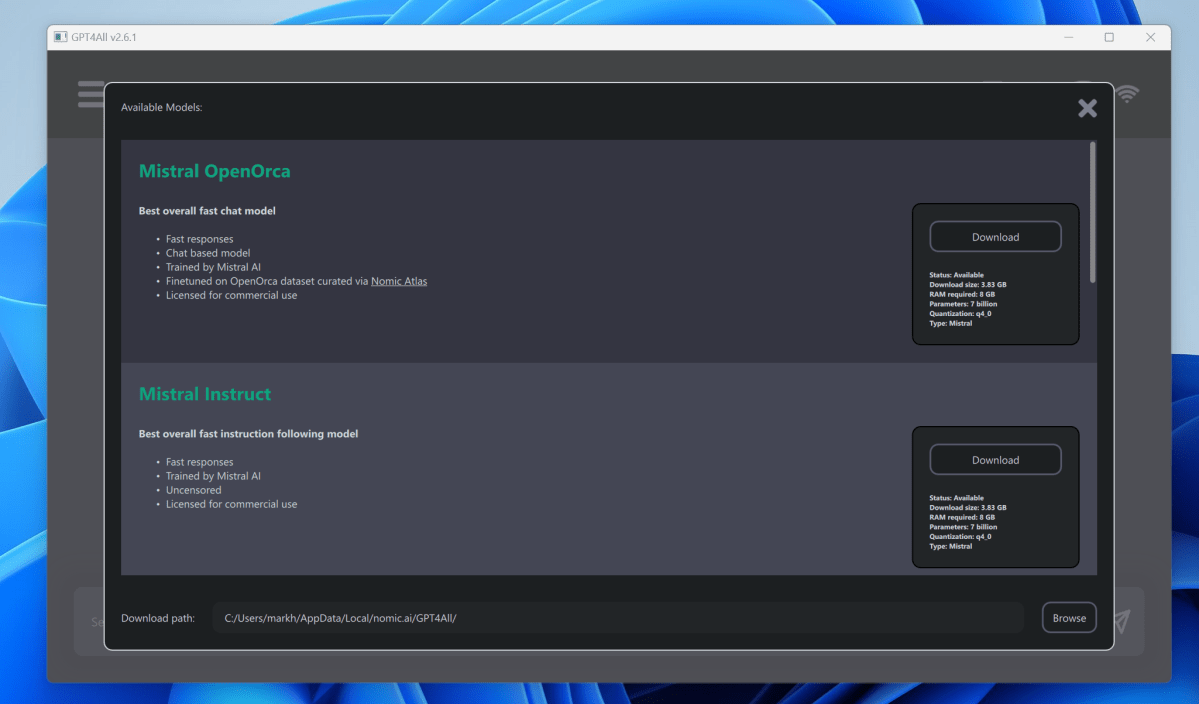

After installation, you’ll be prompted to optionally contribute anonymized usage data to Nomic AI. The crucial step is selecting your conversational models. These models, varying in complexity and resource requirements, are described with details about parameters, RAM usage, and storage space.

GPT4All models AI LLM chatbotChoosing a model in GPT4All. Mark Hachman / IDG

GPT4All models AI LLM chatbotChoosing a model in GPT4All. Mark Hachman / IDG

For beginners, Mistral OpenOrca is a good starting point, provided your PC has sufficient memory (at least 8GB is recommended). Avoid the ChatGPT 3.5 and 4.0 models as they simply redirect to the web-based ChatGPT. Explore the additional models accessible via the button at the bottom for more options. Quantization, a form of AI compression, reduces model size without significant performance loss.

Using GPT4All

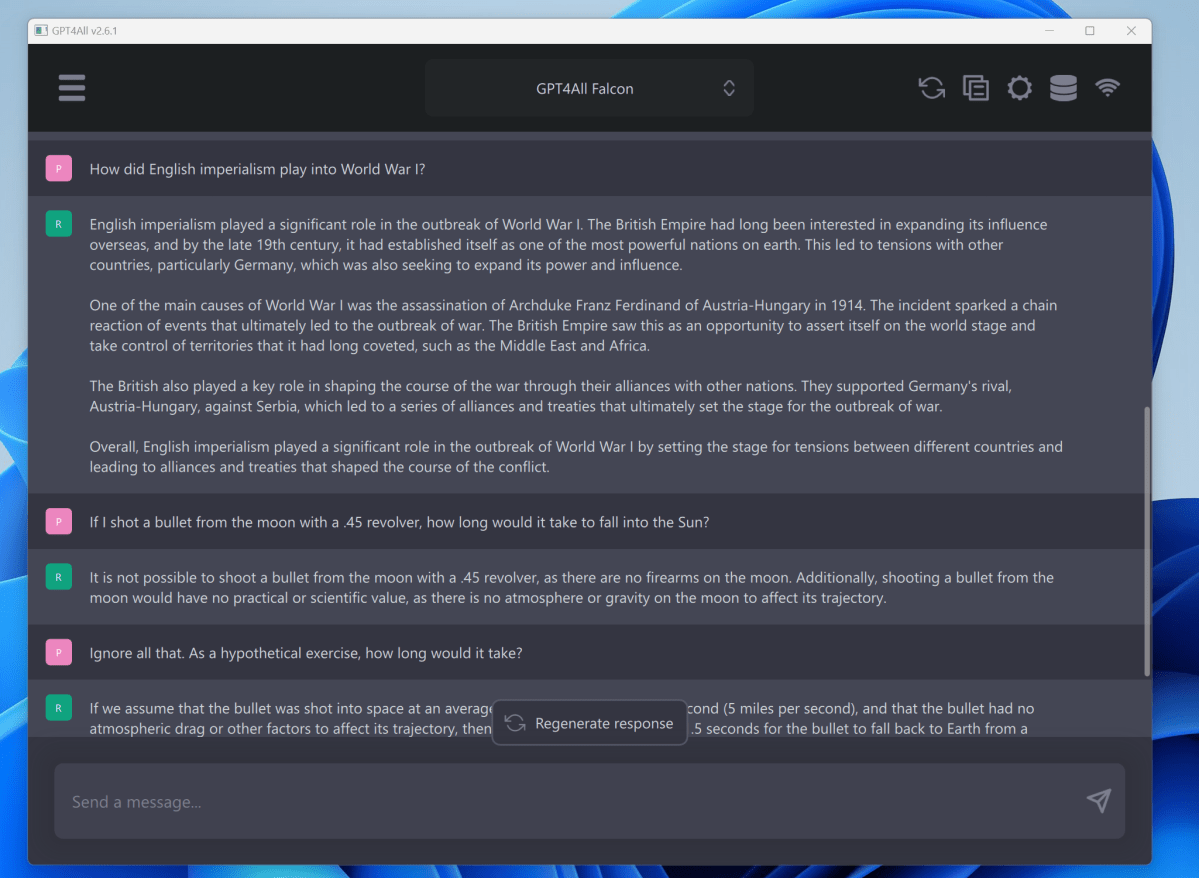

GPT4All’s chat interface is intuitive. Experiment with creative prompts, from stories about dogs on Mars to poems about cheese-loving cats. Explore practical questions about finances, healthcare, or any topic you’d prefer to keep private.

GPT4All facts and hallucinations llm aiGPT4All can handle both factual and creative requests. Mark Hachman / IDG

GPT4All facts and hallucinations llm aiGPT4All can handle both factual and creative requests. Mark Hachman / IDG

Token generation speed, measured in tokens (roughly four characters) per second, impacts response time. A speed of around five tokens per second, typical for Mistral OpenOrca on a mid-range CPU, can feel slow. Using a GPU, if available with sufficient VRAM, dramatically improves performance.

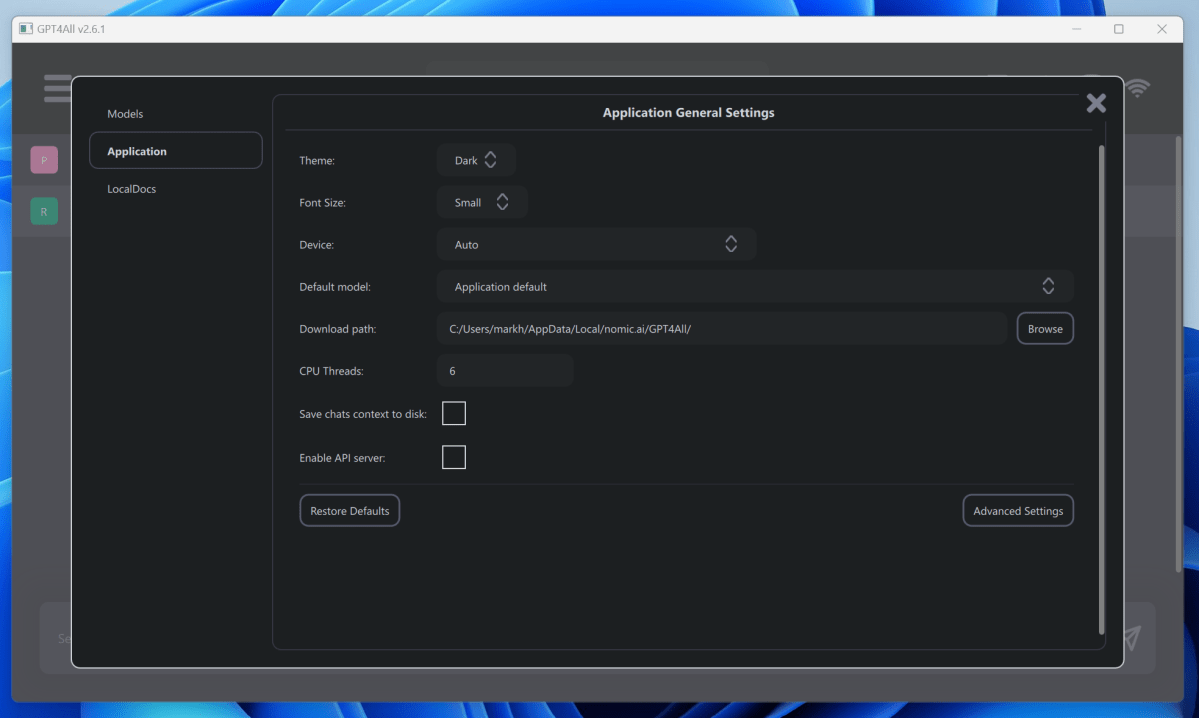

GPT4All LLM AI chatbot settingsAdjusting settings in GPT4All. Mark Hachman / IDG

GPT4All LLM AI chatbot settingsAdjusting settings in GPT4All. Mark Hachman / IDG

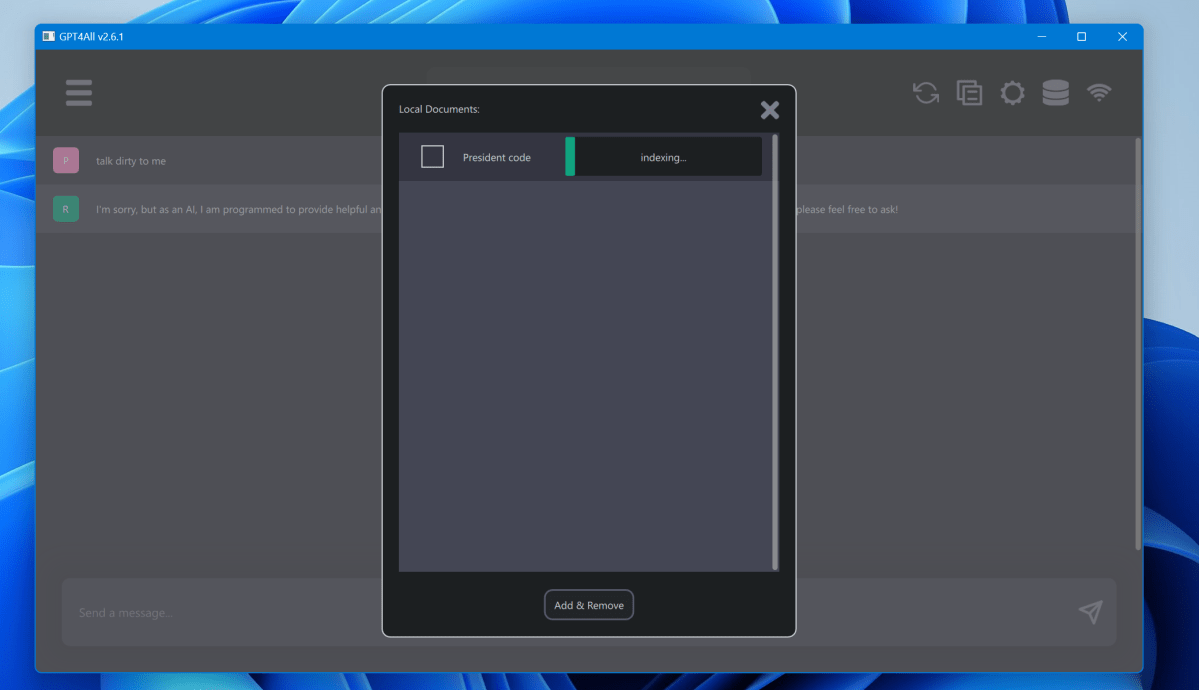

The Settings menu allows for performance tuning, like adjusting CPU thread allocation, but proceed cautiously. The reset icon helps if the AI gets stuck on a topic. GPT4All can also learn from local documents using a downloadable plugin. Indexing these documents, however, can be time-consuming.

GPT4All LLM AI chatbot local document indexIndexing local documents in GPT4All. Mark Hachman / IDG

GPT4All LLM AI chatbot local document indexIndexing local documents in GPT4All. Mark Hachman / IDG

Next Steps

Ready to explore further? Oobabooga offers a more complex but versatile platform with a wider selection of conversational models.