AI voice cloning has become remarkably easy. Advanced tools can process a short voice sample, replicate it, and generate speech that sounds exactly like the original speaker. While this technology has existed since 2018, current tools are faster, more accurate, and readily accessible.

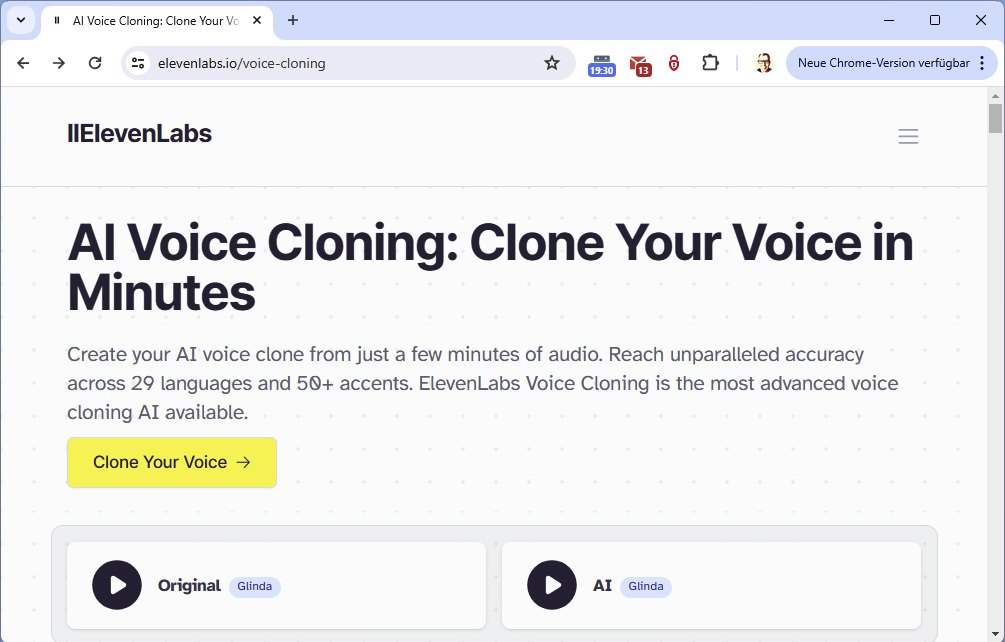

OpenAI, the company behind ChatGPT, demonstrated this capability earlier this year, showcasing voice cloning from a mere 15-second recording. While OpenAI’s tool isn’t publicly available yet and incorporates security measures, other services, like Eleven Labs, offer similar functionality. Eleven Labs can clone a voice from a one-minute sample for a nominal fee, making it accessible to virtually anyone.

The potential for misuse is clear. Scammers can easily obtain voice samples from phone calls or social media videos and use cloned voices to perpetrate fraud.

Eleven LabsEleven Labs offers voice cloning for a small fee. While the results aren’t flawless, they are convincing enough to deceive many.

Eleven LabsEleven Labs offers voice cloning for a small fee. While the results aren’t flawless, they are convincing enough to deceive many.

One prevalent example is the grandparent scam. Scammers impersonate a grandchild, using a cloned voice to plead for urgent financial assistance, often citing an accident or arrest. They typically urge secrecy, discouraging victims from contacting other family members, thus preventing the scam from being exposed.

Unaware individuals are particularly vulnerable to this deception. Even those familiar with voice cloning can be tricked if they’re not vigilant. Furthermore, the emotional distress of potentially accusing a loved one of being an imposter creates hesitation and increases the likelihood of falling victim.

This scam is becoming increasingly common as AI-powered voice cloning proliferates. Protecting yourself requires proactive measures.

Protecting Yourself from Voice Cloning Scams

One effective strategy is establishing a family code word. In an emergency, this code word serves as verification. If the caller cannot provide the correct code word, it’s highly probable they are a scammer. Discuss this with your family and agree on a unique and memorable phrase.

Staying Vigilant in the Age of AI

As AI technology advances, so do the methods employed by scammers. Staying informed about these evolving tactics is crucial for protecting yourself and your loved ones. By understanding the risks and implementing simple preventative measures like a family code word, you can significantly reduce your vulnerability to these increasingly sophisticated scams.