Researchers at Stanford University, in collaboration with Google’s DeepMind, have developed AI agents that can replicate human behavior with surprising accuracy. In a study involving 1,052 participants, these AI agents, trained on just two-hour interviews, were able to answer questions similarly to their real-world counterparts in a significant number of cases. This breakthrough raises both exciting possibilities and concerning ethical questions about the future of AI and its potential impact on society.

Simulating Human Behavior: A New Frontier in AI Research

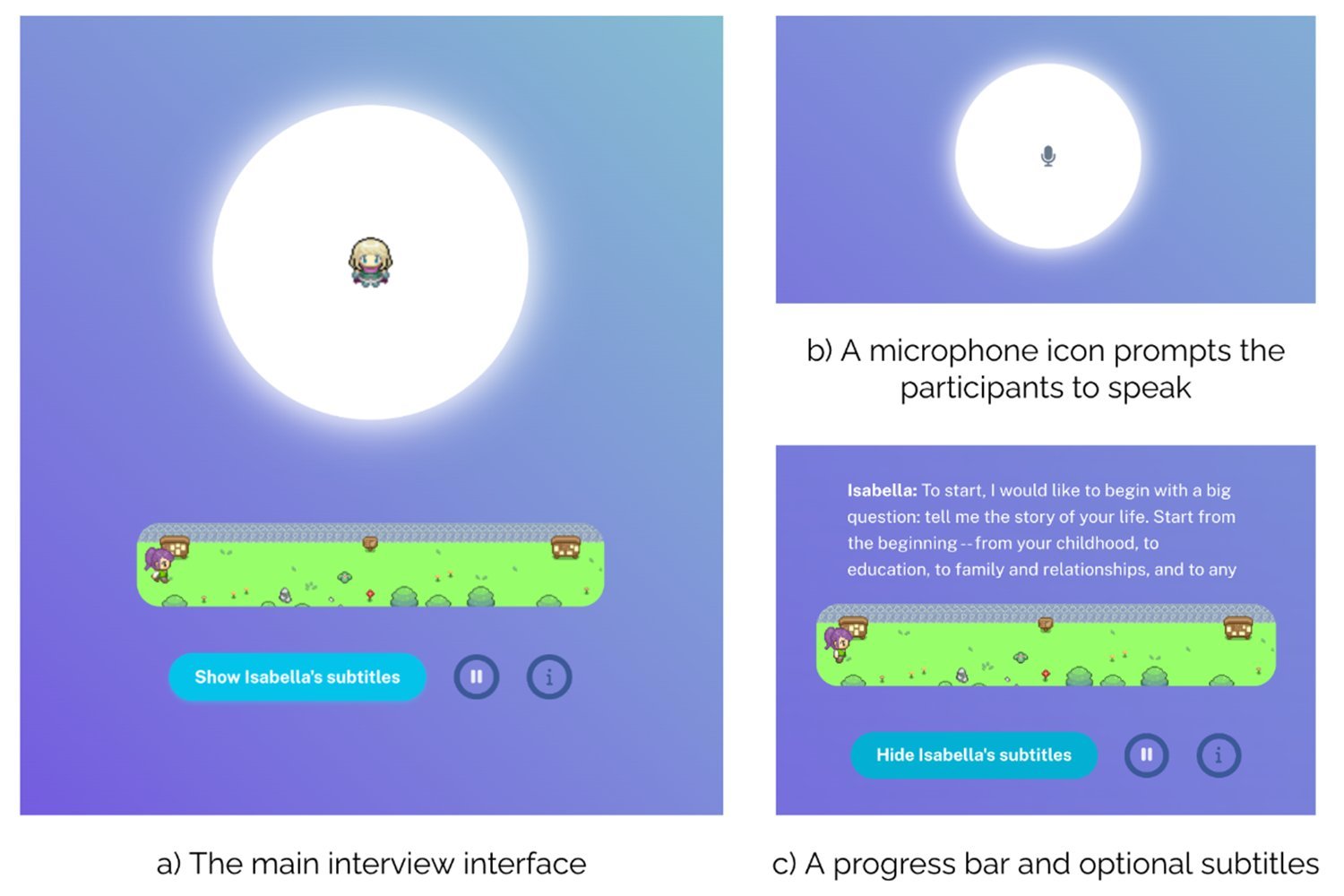

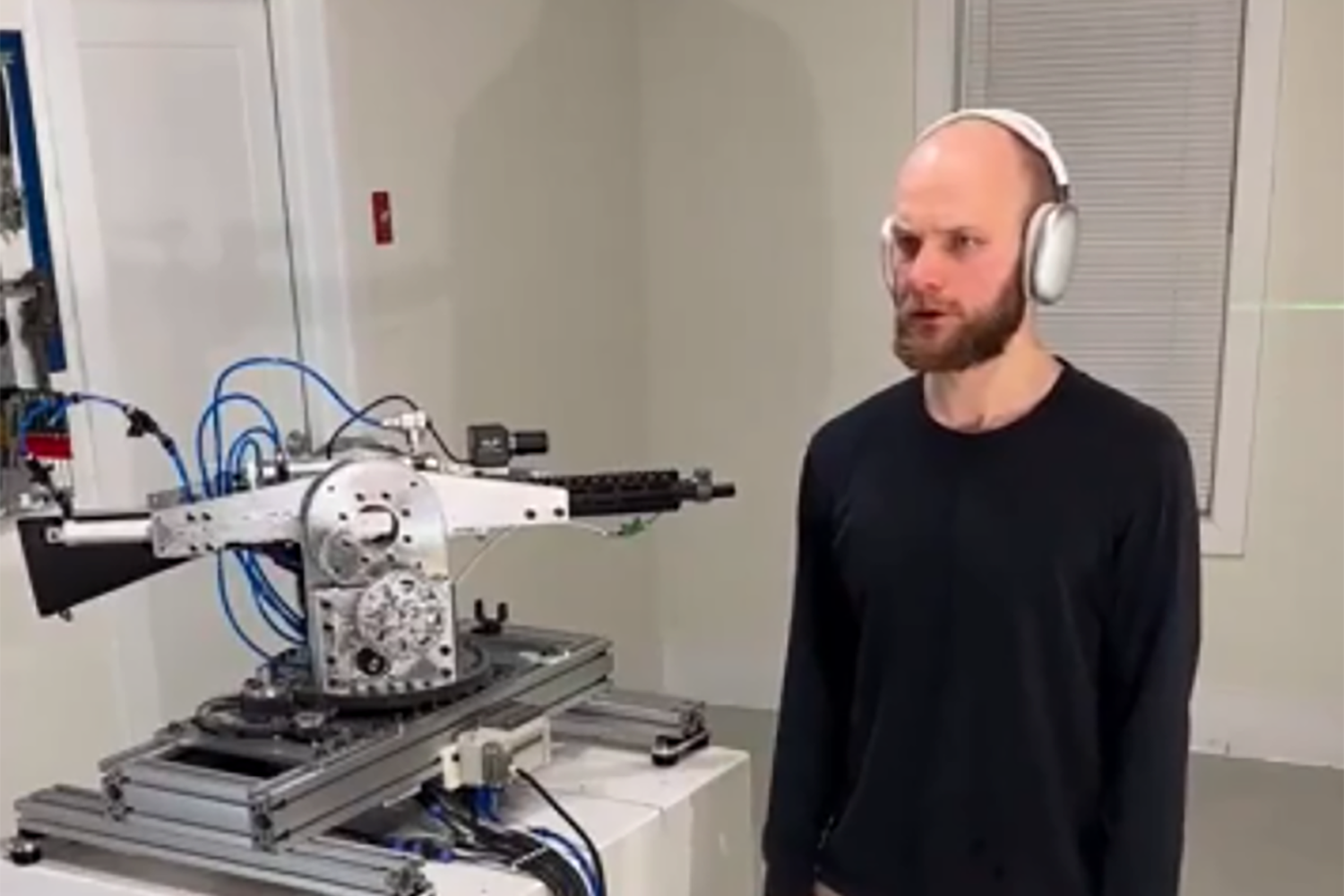

The study, titled “Generative Agent Simulations of 1,000 People,” explored the potential of AI agents to understand and simulate human behavior. Participants were compensated $60 to read the opening lines of The Great Gatsby to an app and then engage in a life story interview with an AI. This data was then used to create AI agents that mimicked the participants’ behavior with an impressive 85% accuracy in certain scenarios.

Potential Applications and Ethical Concerns

The researchers envision these AI agents as valuable tools for policymakers and businesses to better understand public opinion and predict responses to various scenarios. Imagine piloting public health policies, gauging reactions to product launches, or even anticipating responses to major societal shifts – all through the lens of simulated human interaction.

This technology could revolutionize fields like economics, sociology, and political science, offering unprecedented insights into individual and collective behavior. However, the ethical implications are equally significant. The ability to create AI personas based on limited interaction raises concerns about privacy, manipulation, and the potential for misuse.

The Study’s Methodology and Findings

The study employed a diverse sample of participants, reflecting a broad spectrum of the U.S. population. After reading the Great Gatsby excerpt for audio calibration, participants engaged in two-hour interviews conducted by an AI within a custom interface. These interviews covered various topics, including demographics, personal beliefs, and social experiences.

The resulting transcripts, averaging 6,491 words, were then fed into a separate LLM to generate the AI agents. These agents were subsequently tested using established social science tools like the General Social Survey (GSS) and the Big Five Personality Inventory (BFI).

The AI agents performed remarkably well, matching participant responses on the GSS with 85% accuracy and 80% on the BFI. However, their performance dipped significantly when faced with economic games like the Prisoner’s Dilemma and the Dictator’s Game, achieving only around 60% correlation.

The Future of AI Agents and Society

The study demonstrates the potential of AI to replicate human behavior, albeit with limitations. While the accuracy in answering social science questions is impressive, the disparity in performance during economic games suggests that complex decision-making remains a challenge for AI.

This research is a stepping stone towards more sophisticated AI agents. The implications are profound, offering both powerful tools for understanding human behavior and potential avenues for misuse. As this technology evolves, it’s crucial to consider the ethical implications and strive for responsible development and application of AI in our society.

Conclusion

The Stanford study offers a glimpse into the future of AI, where simulated humans could play increasingly significant roles in research, policymaking, and business. While the technology is still in its early stages, the potential benefits and risks are substantial. Further research and careful ethical considerations are paramount as we navigate this new frontier in artificial intelligence.