The AI chatbot ChatGPT from OpenAI has sparked immense hype around generative artificial intelligence and dominates media coverage. However, numerous alternatives exist beyond OpenAI’s offerings, some of which offer local PC deployment and unlimited free usage. This article explores four such local chatbots, enabling you to converse with AI and generate text, even on older hardware.

These chatbots typically comprise two components: a front-end interface and a large language model (LLM) that powers the AI. You choose the model after installing the front-end. While basic operation is straightforward, some chatbots offer advanced settings requiring technical expertise. Rest assured, they function effectively with default configurations too.

Local AI Capabilities

A local LLM’s performance depends heavily on the resources you provide. LLMs demand significant computing power and RAM for swift responses. Insufficient resources can prevent larger models from launching or cause smaller ones to respond slowly. A modern Nvidia or AMD graphics card accelerates performance, as most local chatbots and AI models leverage GPU capabilities. Weaker graphics cards force reliance on the CPU, resulting in slower processing.

8GB of RAM limits you to very small AI models, capable of answering simple queries but struggling with complex topics. 12GB offers a noticeable improvement, while 16GB or more is ideal for smooth operation with models boasting 7 to 12 billion parameters. Model names often indicate parameter count, with suffixes like “2B” or “7B” denoting billions.

For systems with 8GB RAM and no GPU, Gemma 2 2B (2.6 billion parameters) provides fast, well-structured results. For even less demanding requirements, Llama 3.2 1B within LM Studio is a viable option. If your PC boasts ample RAM and a powerful GPU, explore Gemma 2 7B or larger Llama models like Llama 3.1 8B, loadable through Msty, GPT4All, or LM Studio. Information on Llama models is provided below. Notably, OpenAI’s ChatGPT isn’t available for local PC installation; its apps and tools rely on internet connectivity.

Getting Started

Using these chatbots follows a similar process: install the tool, load an AI model, and navigate to the chat area. Llamafile streamlines this process further by integrating the AI model directly, eliminating the download step. Different Llamafiles exist, each containing a different model.

Llamafile: Simplicity at its Core

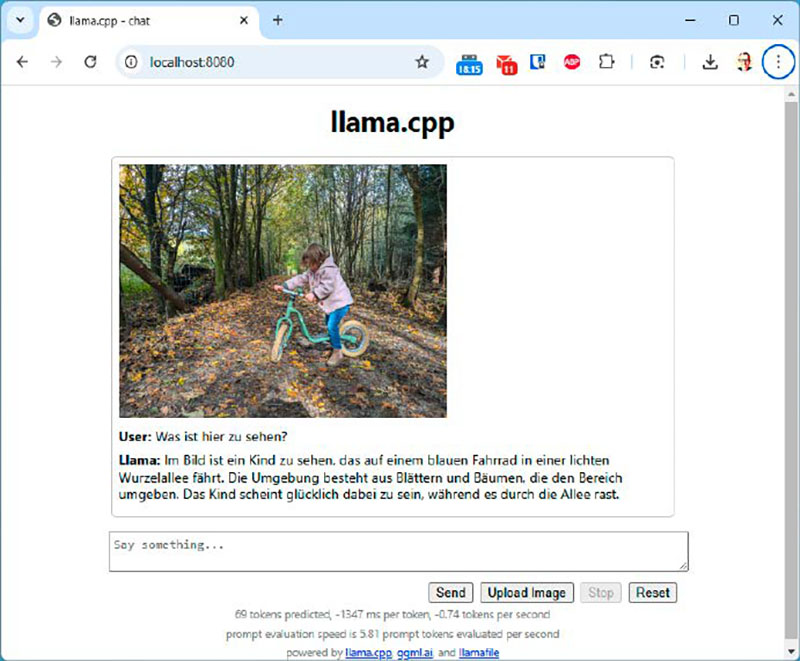

Llamafiles provide the easiest way to interact with a local chatbot, aiming to democratize AI access. By packaging the front-end and AI model into a single executable Llamafile, users simply launch the file and access the chatbot in their browser. While the interface is basic, its simplicity is its strength.

The Llamafile chatbot is available in different versions, each with different AI models. With the Llava model, you can also integrate images into the chat. Overall, Llamafile is easy to use as a chatbot.

The Llamafile chatbot is available in different versions, each with different AI models. With the Llava model, you can also integrate images into the chat. Overall, Llamafile is easy to use as a chatbot.

Installation

Download the single Llamafile corresponding to your chosen model. For example, the Llava 1.5 model (7 billion parameters) is named “llava-v1.5-7bq4.llamafile.” Since the .exe extension might be missing, rename the file in Windows Explorer to “llava-v1.5-7b-q4.llamafile.exe,” dismissing any warnings. Double-click to launch; a prompt window might appear (for the program, not the chatbot). The chatbot operates within your browser. Launch your browser and enter 127.0.0.1:8080 or localhost:8080. To use a different AI model, download the corresponding Llamafile from Llamafile.ai (under “Other example llamafiles”), ensuring each has the .exe extension.

Chatting

The browser interface displays chatbot settings at the top and a chat input field (“Say something”) at the bottom. Llava-based Llamafiles (e.g., llava-v1.5-7b-q4.llamafile) support image analysis; upload images via “Upload Image” and “Send.” (“Llava” stands for “Large Language and Vision Assistant.”) Close the prompt window to exit.

Tip: Network Usage

Llamafiles can operate within your local network. Launch the chatbot on a powerful PC and ensure other devices have access. Access the chatbot from other devices via their browsers, using the address “<PC’s IP address>:8080.”

Msty: Versatile and Feature-Rich

Msty provides access to numerous language models, user-friendly guidance, and local file import for AI utilization. While not entirely intuitive, it becomes easy to use after a brief learning curve.

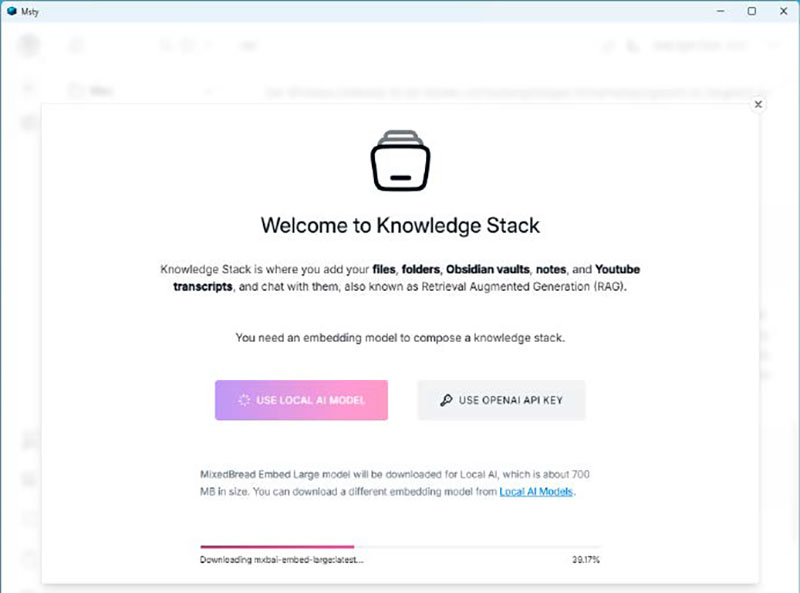

If you want to make your own files available to the AI purely locally, you can do this in Msty in the so-called Knowledge Stack. That sounds a bit pretentious. However, Msty actually offers the best file integration of the four chatbots presented here.

If you want to make your own files available to the AI purely locally, you can do this in Msty in the so-called Knowledge Stack. That sounds a bit pretentious. However, Msty actually offers the best file integration of the four chatbots presented here.

Installation

Msty offers two versions: one with GPU support (Nvidia/AMD) and one for CPU-only execution. During installation, choose between local installation (“Set up local AI”) or server installation. Local installation defaults to the Gemma 2 model (1.6GB), suitable for text generation on less powerful hardware. Click “Gemma2” to choose from five other models. Later, access a broader model library via “Local AI Models” (e.g., Gemma 2 2B, Llama 3.1 8B) or download models from www.ollama.com and www.huggingface.com through “Browse & Download Online Models.”

Interface and Features

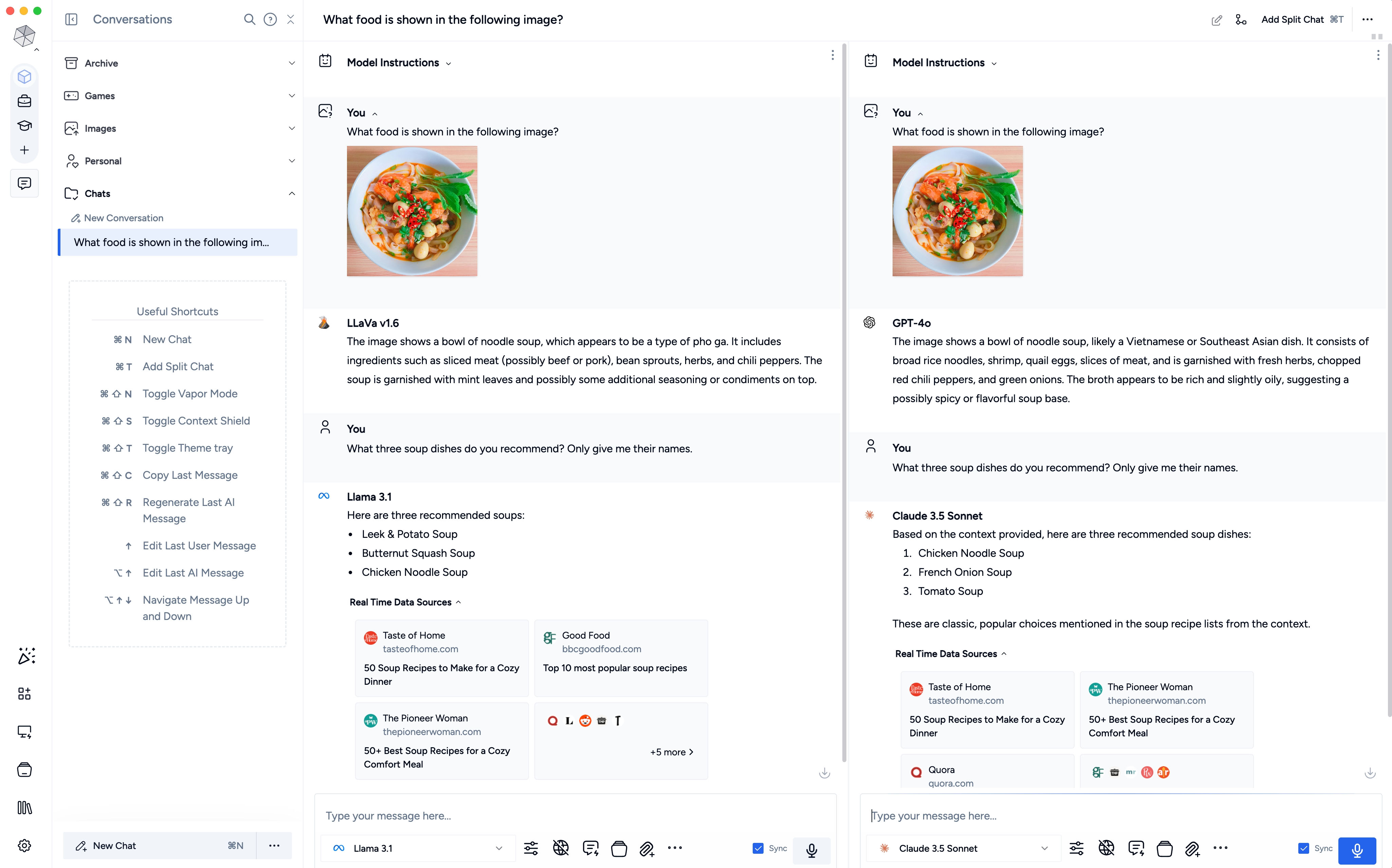

Msty’s interface is visually appealing and well-organized. While some features might require exploration, the tool allows for easy model integration and file import. Msty provides graphical menus for accessing the often complex options of individual models. A unique feature is “splitchats,” enabling simultaneous queries to multiple AI models. This facilitates model comparison by displaying multiple chat instances side-by-side.

Integrating Your Files

“Knowledge Stacks” facilitate file integration. Choose an embedding model to prepare your data for the LLMs (Mixedbread Embed Large is the default). Be mindful of online embedding models (e.g., OpenAI), as these transmit your data to external servers. After adding documents to “Knowledge Stacks,” select “Attach Knowledge Stack and Chat with them” beneath the chat input. Tick your stack and ask a question; the model will search your data for relevant answers, though this functionality is still under development.

A special feature of Msty is that you can ask several AI models for advice at the same time. However, your PC should have enough memory to respond quickly. Otherwise you will have to wait a long time for the finished answers.

A special feature of Msty is that you can ask several AI models for advice at the same time. However, your PC should have enough memory to respond quickly. Otherwise you will have to wait a long time for the finished answers.

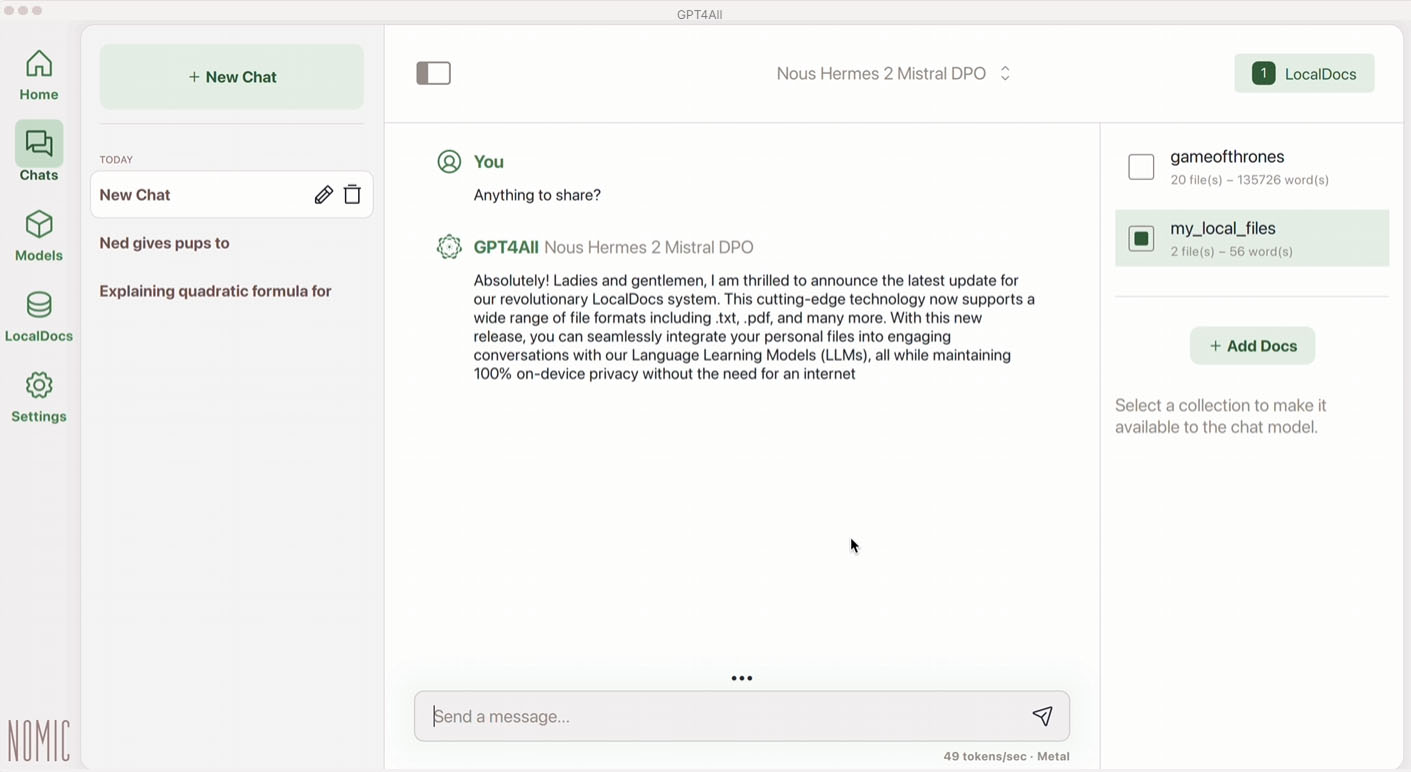

GPT4All: Simple and Efficient

GPT4All offers a straightforward interface, a curated selection of models, and local file integration. While its model selection is smaller than Msty’s, it benefits from clarity. Additional models are downloadable from Huggingface.com.

The GPT4All chatbot is a solid front end that offers a good selection of AI models and can load more from Huggingface.com. The user interface is well structured and you can quickly find your way around.

The GPT4All chatbot is a solid front end that offers a good selection of AI models and can load more from Huggingface.com. The user interface is well structured and you can quickly find your way around.

Installation and Models

GPT4All’s installation is typically quick and easy. The “Models” section displays available models, including Llama 3 8B, Llama 3.2 3B, Microsoft Phi 3 Mini, and EM German Mistral, each indicating required RAM. A search function allows access to Huggingface.com models. For those seeking online integration, OpenAI (ChatGPT) and Mistral can be added via API keys.

Usage and File Integration

GPT4All’s interface resembles Msty’s, but with fewer features, enhancing simplicity. Loading models and selecting them for chat is straightforward. “Localdocs” enables file integration, though unlike Msty, embedding model selection isn’t available; Nomic-embed-textv1.5 is used consistently. GPT4All generally exhibits good stability, though model loading status isn’t always clear.

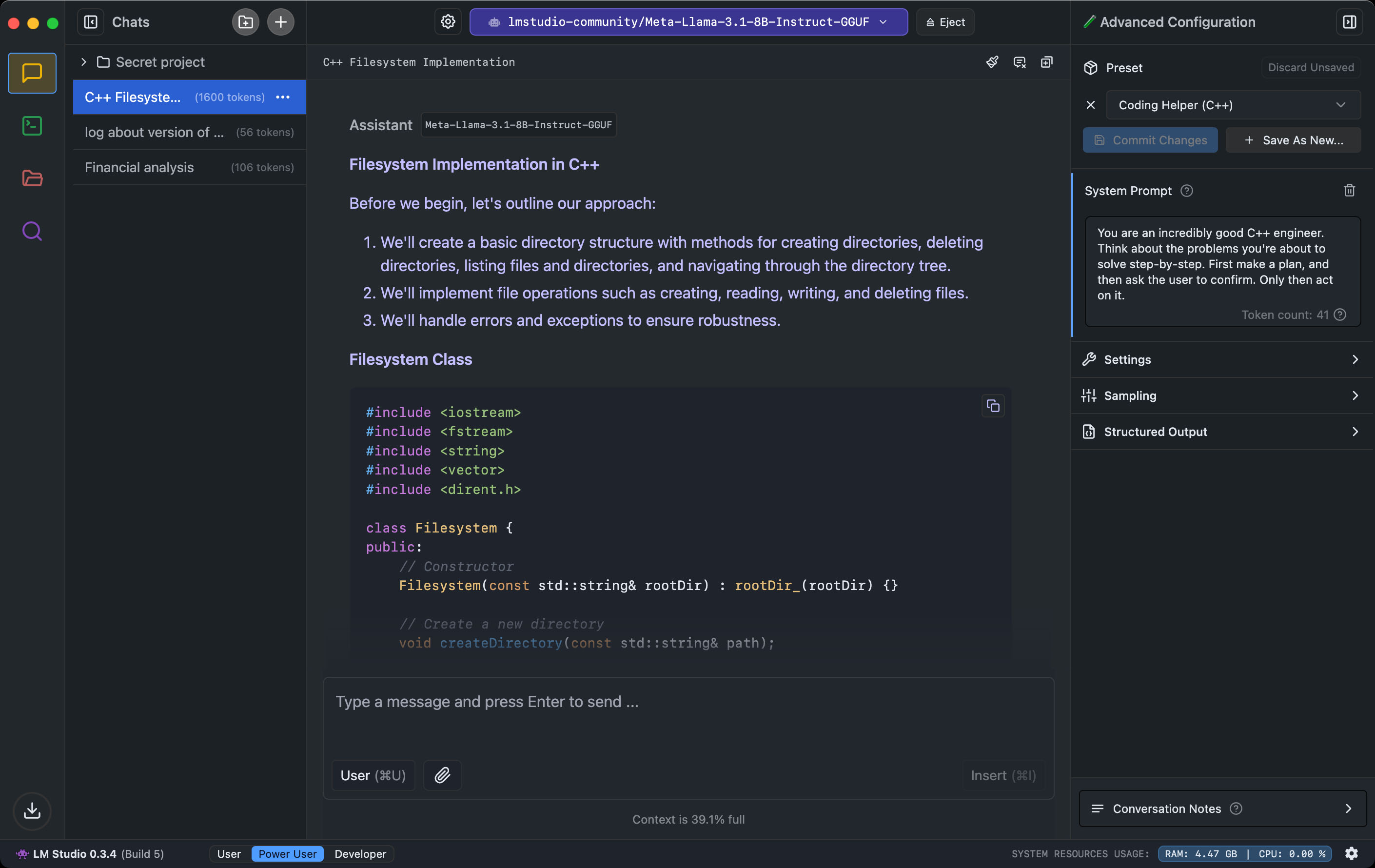

LM Studio: Power User and Developer Focused

LM Studio caters to beginners, power users, and developers. Despite this categorization, its focus leans towards professionals, providing access to numerous models and their intricacies.

The LM Studio chatbot not only gives you access to a large selection of AI models from Huggingface.com, but also allows you to fine-tune the AI models. There is a separate developer view for this.

The LM Studio chatbot not only gives you access to a large selection of AI models from Huggingface.com, but also allows you to fine-tune the AI models. There is a separate developer view for this.

Installation and Models

Post-installation, LM Studio prompts you to download your first LLM, suggesting Llama 3.2 1B, a lightweight model suitable for older hardware. After downloading, load the model. Add more models via Ctrl-Shift-M or the “Discover” icon.

Chatting and Document Integration

LM Studio offers three view modes: “User,” “Power User,” and “Developer.” “User” mode mirrors ChatGPT’s browser interface, while “Power User” and “Developer” modes provide detailed information (token counts, processing speed). This detailed access makes LM Studio attractive for advanced users seeking fine-tuning and in-depth insights.

Document integration is limited to the current chat; permanent integration with language models isn’t supported. When adding a document, LM Studio automatically determines whether its length allows direct inclusion in the AI model’s prompt. Longer documents undergo Retrieval Augmented Generation (RAG) to extract key content for the model, though this process isn’t always comprehensive.