Microsoft has intensified its efforts to combat AI misuse by identifying four developers allegedly responsible for bypassing safety measures on its AI tools to generate celebrity deepfakes. This action stems from a lawsuit filed in December 2024, where a court order allowed Microsoft to seize a website linked to the operation, ultimately leading to the identification of these individuals.

Unveiling the Cybercrime Network

The four developers, reportedly part of the global cybercrime network Storm-2139, are: Arian Yadegarnia (“Fiz”) from Iran, Alan Krysiak (“Drago”) from the United Kingdom, Ricky Yuen (“cg-dot”) from Hong Kong, and Phát Phùng Tấn (“Asakuri”) from Vietnam. Microsoft acknowledges identifying additional individuals involved but refrains from disclosing their names to avoid jeopardizing ongoing investigations. The group allegedly compromised accounts with access to Microsoft’s generative AI tools, circumventing safety protocols to create various images, including deepfake nudes of celebrities. They then sold this illicit access to others, further fueling the misuse of this technology.

Panic Ensues After Website Seizure

Following the lawsuit and website seizure, Microsoft reported a significant disruption within the group. According to a company blog post, the actions triggered panic and infighting among members, with some turning on each other. This internal turmoil underscores the impact of Microsoft’s legal measures.

Deepfakes: A Growing Threat to Celebrities and Individuals

Celebrities like Taylor Swift have been frequent targets of deepfake pornography, where realistic facial images are superimposed onto nude bodies. In January 2024, Microsoft updated its text-to-image models in response to the proliferation of fake Swift images online. The ease with which generative AI can create these images, even with limited technical skills, has led to a surge in deepfake scandals, particularly in high schools across the U.S. Victims’ accounts highlight the real-world harm caused by deepfakes, leading to anxiety, fear, and a sense of violation.

The Debate on AI Safety and Open Source vs. Closed Source

The AI community continues to debate the importance of safety measures and whether concerns are genuine or exaggerated to benefit major players like OpenAI. Proponents of closed-source models argue that restricting user access to safety controls can mitigate abuse. Conversely, open-source advocates believe that open access fosters innovation and that addressing abuse shouldn’t hinder progress. However, both sides seem to overlook the more immediate threat: AI-generated misinformation and low-quality content flooding the internet.

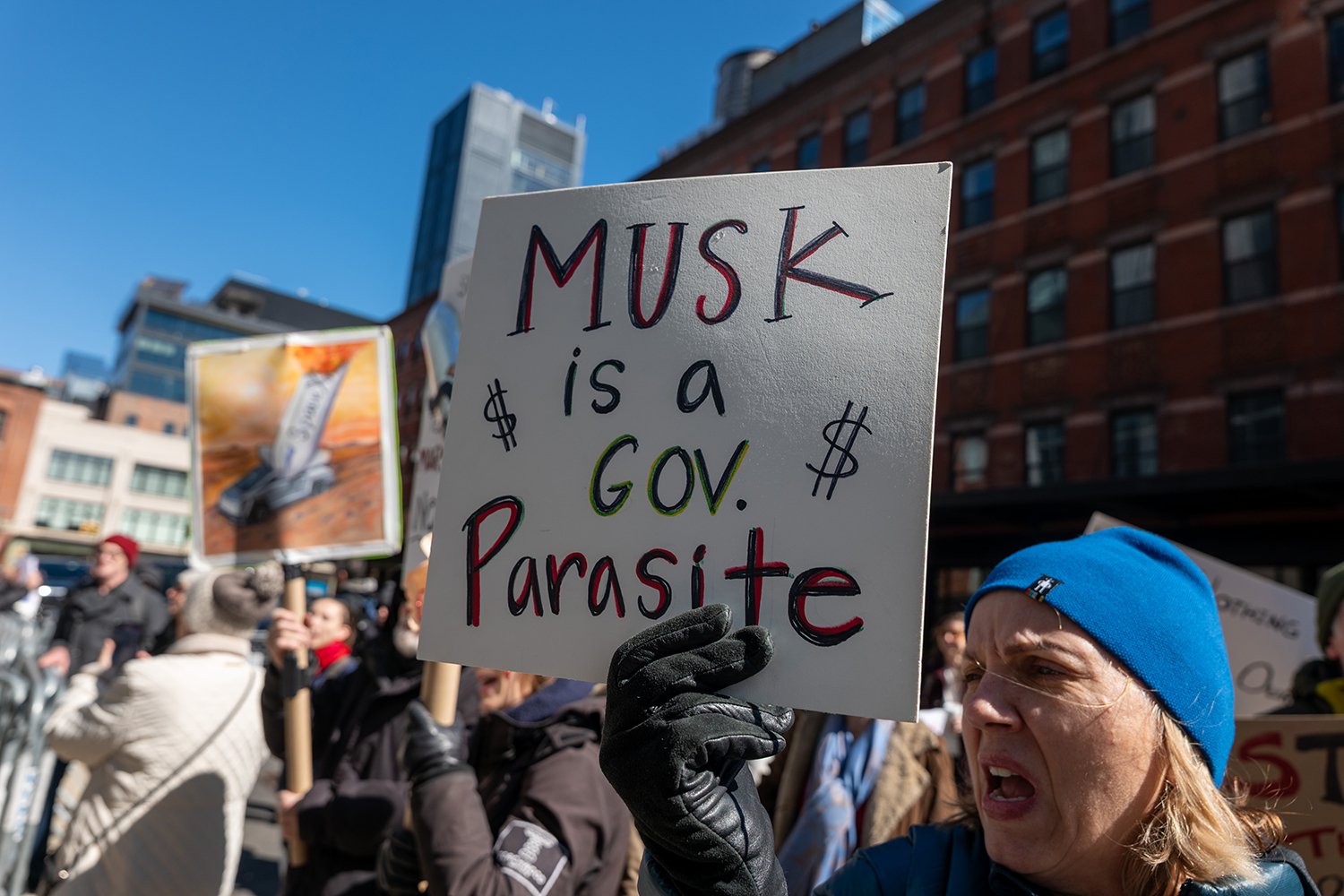

Legal Action Against Deepfake Abuse

While many AI fears may seem exaggerated, the misuse of AI to create deepfakes is undeniably real. Legal action offers a tangible solution to combatting this abuse. Numerous arrests have been made across the U.S. involving AI-generated deepfakes of minors. The NO FAKES Act, introduced in Congress in 2024, aims to criminalize generating images based on someone’s likeness. The United Kingdom already penalizes deepfake porn distribution and will soon criminalize its production. Australia has also criminalized the creation and sharing of non-consensual deepfakes. These legislative efforts demonstrate a growing global recognition of the need to address this emerging threat.

Conclusion: Addressing the Real Threat of Deepfakes

While broader AI concerns are debated, the concrete harm caused by deepfakes demands immediate attention. Microsoft’s actions, alongside legislative initiatives worldwide, represent crucial steps in addressing this issue and protecting individuals from the damaging consequences of AI misuse. The focus should remain on tangible solutions to mitigate the very real threat of deepfakes, rather than hypothetical anxieties about AI’s future potential.