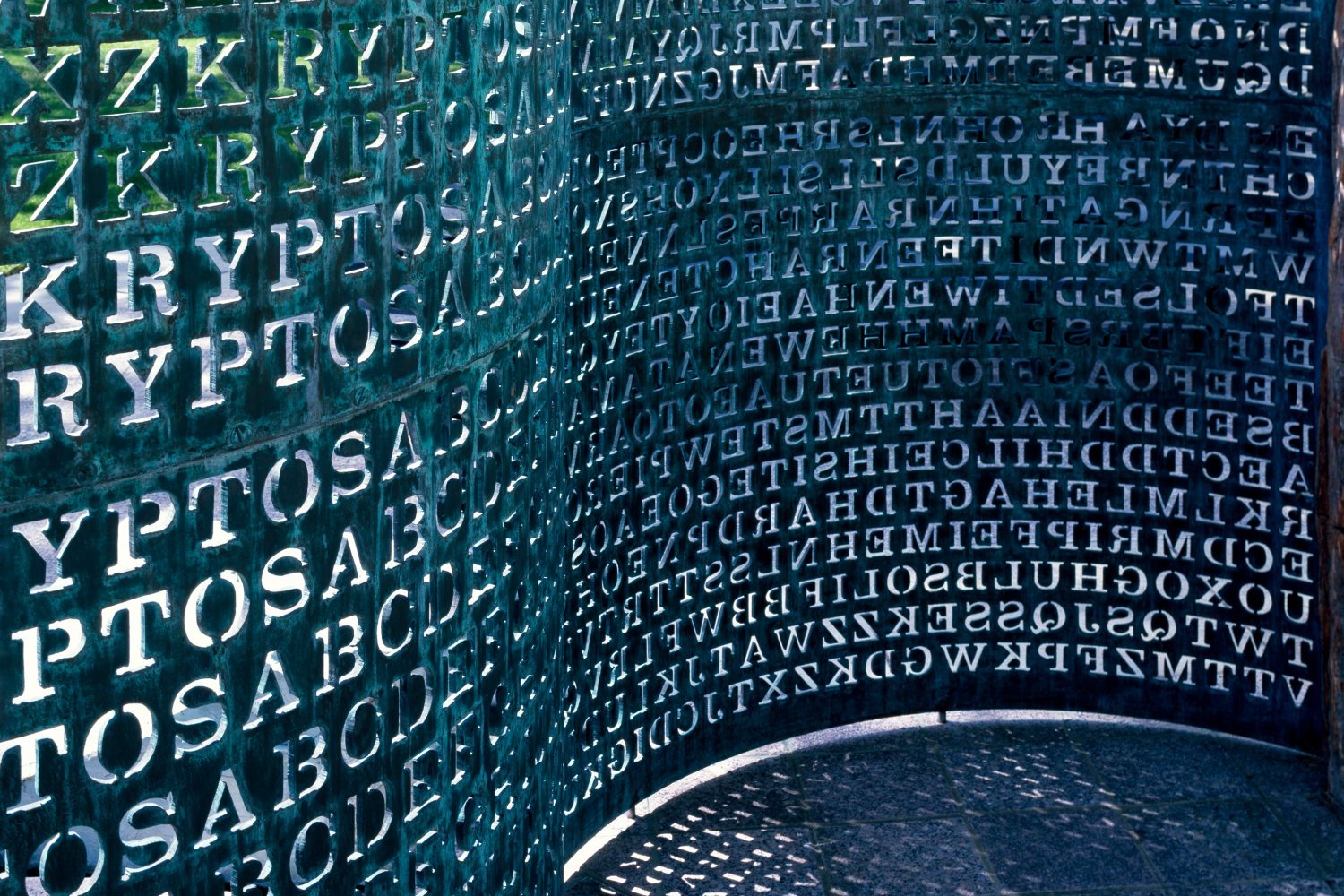

Near the CIA headquarters in Langley, Virginia, stands Kryptos, a sculpture erected in 1990. This enigmatic artwork features four encrypted messages, three of which have been deciphered. However, the fourth, known as K4, has remained uncracked for 35 years. And according to a recent Wired report, the sculptor, Jim Sanborn, wants the world to know that AI chatbots aren’t solving it anytime soon.

Sanborn, whose artistic creations grace institutions like MIT and NOAA, has been flooded with supposed solutions for K4. These aren’t from seasoned cryptographers who have dedicated years to unraveling the mystery, but rather from individuals who’ve simply fed the code into a chatbot and taken the response as gospel. This surge in submissions has become particularly irksome for the 79-year-old artist, who implemented a $50 review fee after decades of dealing with countless incorrect answers.

Sanborn told Wired that the nature of the emails has changed. Those using AI demonstrate unwavering confidence in their chatbot-generated solutions, believing they’ve cracked a decades-old code over breakfast. He shared examples of the arrogant messages he’s received: “I’m just a vet…Cracked it in days with Grok 3,” “What took 35 years and even the NSA with all their resources could not do I was able to do in only 3 hours before I even had my morning coffee,” and “History’s rewritten…no errors 100% cracked.”

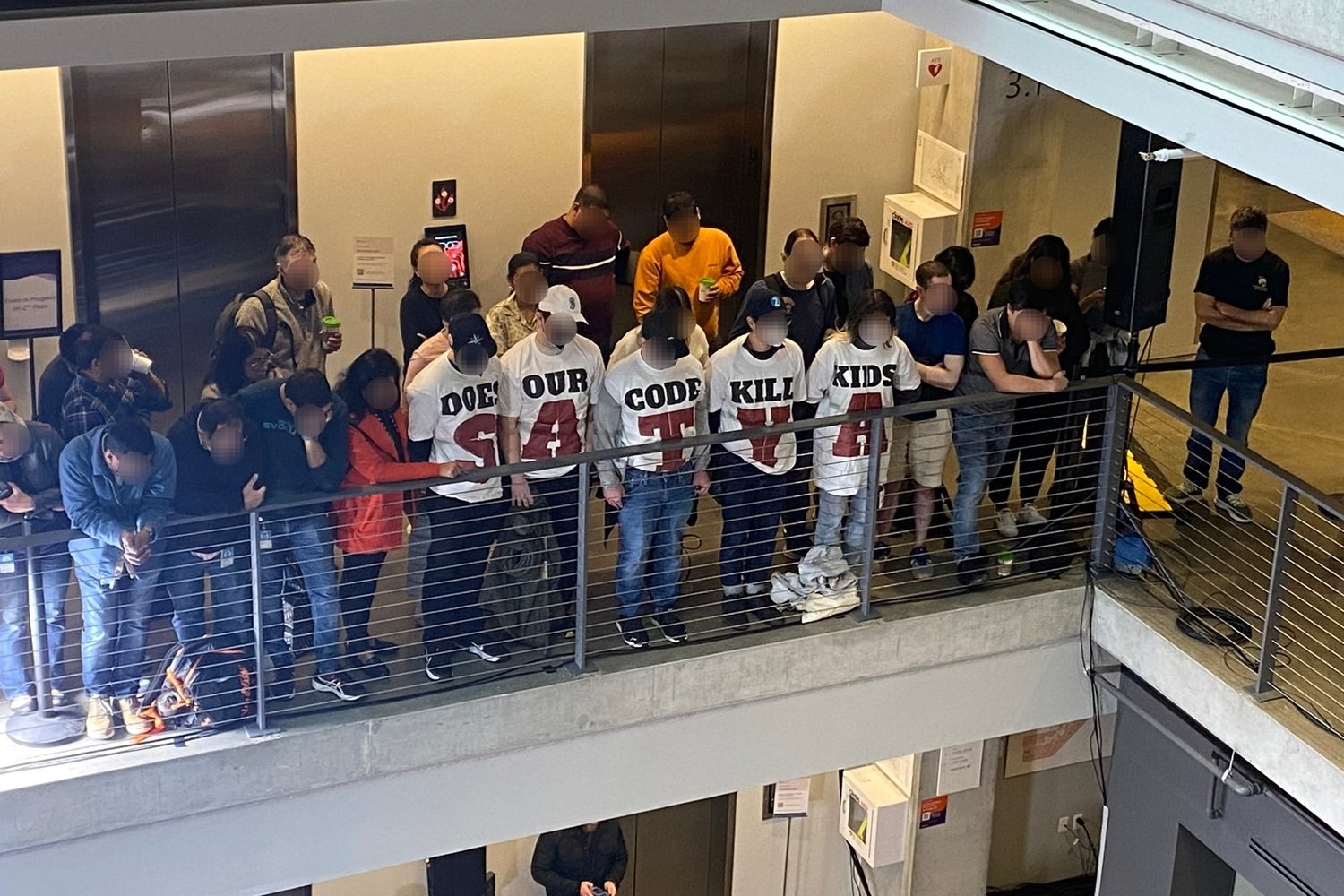

This overconfidence echoes a familiar online persona – individuals who tout AI chatbots as infallible sources of information, sharing screenshots of responses as irrefutable proof. The smugness is perplexing, even if they had genuinely cracked the code. Simply prompting a machine to solve a complex puzzle hardly warrants self-congratulation. It’s akin to checking the answer key in the back of a textbook, except in this case, the textbook might be hallucinating.

This behavior isn’t isolated. A 2024 study in Computers in Human Behavior revealed that people tend to over-rely on AI-generated advice, even when it contradicts other information or their own best interests. Furthermore, this over-reliance negatively impacts their interpersonal interactions. Perhaps this stems from the inflated sense of self-satisfaction derived from outsourcing cognitive tasks.

In conclusion, while AI has undeniable potential, blind faith in chatbot solutions, especially for complex problems like the Kryptos K4 cipher, is misplaced. The real challenge lies in critical thinking and rigorous analysis, not simply relying on a machine’s output. True accomplishment comes from dedicated effort and understanding, not from taking shortcuts. Kryptos K4 continues to beckon, awaiting a solution born of genuine ingenuity, not artificial pronouncements.