Meta Platforms, Inc. is testing its first in-house AI training chip, aiming to significantly reduce its massive infrastructure expenses, according to Reuters. This strategic move aligns with Meta’s focus on AI-powered tools for future growth, especially as the company projects substantial capital expenditures in AI infrastructure.

Meta’s new chip is a dedicated accelerator, designed specifically for AI tasks. This specialized design enhances power efficiency compared to the commonly used graphics processing units (GPUs). While the company currently uses its chips for inference, tailoring content to individual users after AI model training, the goal is to utilize these chips for training by 2026.

This development has significant financial implications. Meta’s forecasted expenses for 2025 range from $114 billion to $119 billion, with up to $65 billion allocated to capital expenditure, largely driven by AI infrastructure investments. Even if generative AI’s consumer applications, like chatbots, don’t meet expectations, Meta can leverage this technology for enhanced content recommendations and ad targeting. These seemingly small improvements can generate billions in revenue through increased advertising effectiveness.

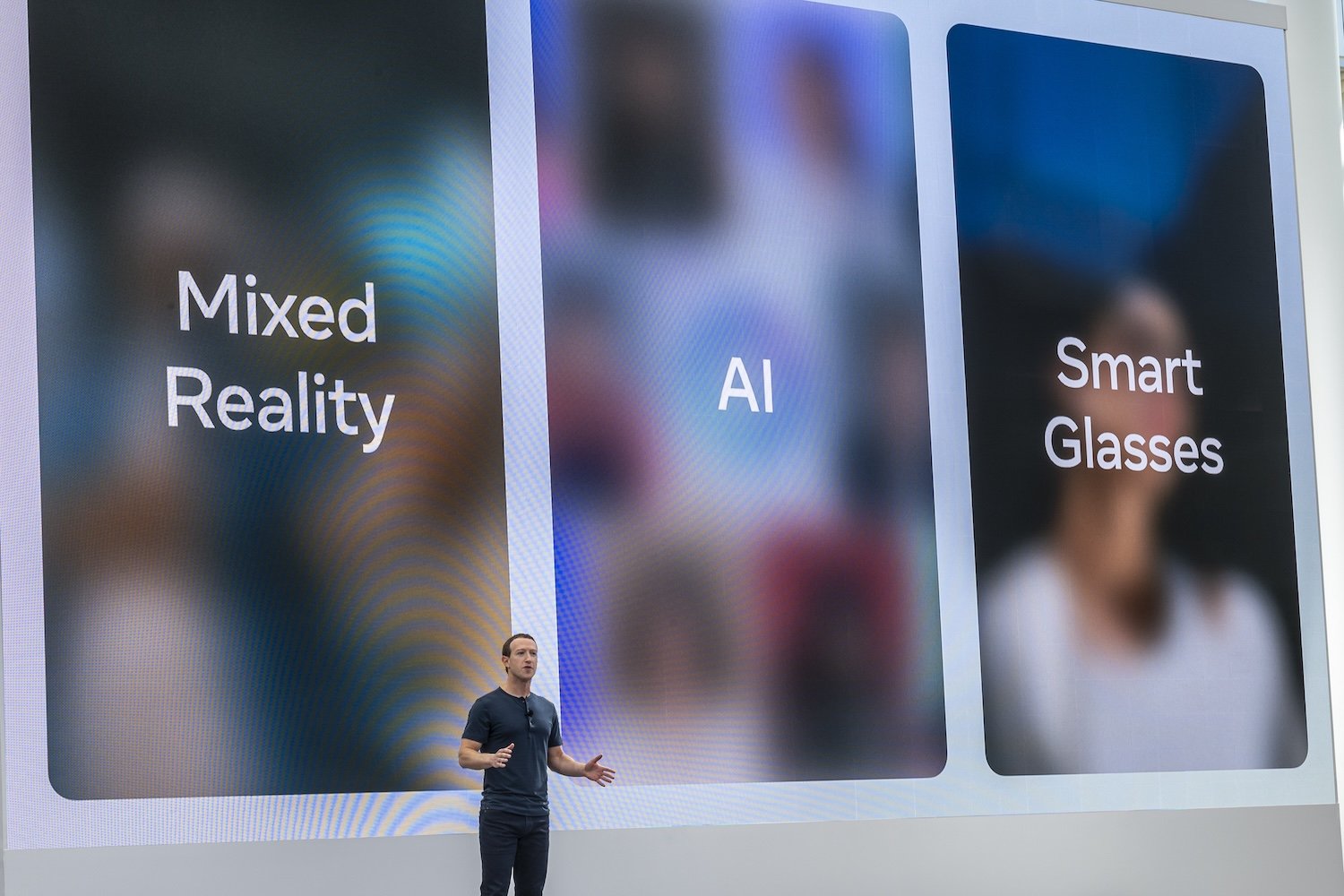

Despite facing setbacks with its Reality Labs division, Meta has cultivated strong hardware teams and achieved some success with its Ray-Ban AI glasses. While internal evaluations suggest their hardware hasn’t reached its full potential, the company’s VR headsets sell in the low millions annually. CEO Mark Zuckerberg’s long-term vision emphasizes building proprietary hardware platforms to reduce dependency on Apple and Google.

Since 2022, major tech companies have invested heavily in Nvidia’s GPUs, the industry standard for AI processing. While Nvidia enjoys a competitive edge with its CUDA software toolkit, companies like Amazon (with Inferentia chips) and Google (with Tensor Processing Units) are developing their own processors to mitigate reliance on external suppliers and reduce costs.

Nvidia’s reliance on a few large customers who are developing in-house processors, along with the emergence of efficient AI models like China’s DeepSeek, raises questions about Nvidia’s long-term growth. CEO Jensen Huang remains optimistic, projecting a $1 trillion investment in data center infrastructure over the next five years.

This investment could fuel Nvidia’s growth into the 2030s, particularly since most companies lack the resources to develop their own chips like Meta. Meta’s investment demonstrates the long-term strategic value of in-house chip development, even with substantial upfront costs and a multi-year timeframe for return on investment. Only time will tell if this investment delivers the anticipated cost savings and performance improvements.