Google’s AI advancements are often associated with Gemini, its flagship AI model integrated into various Workspace products. However, Google also actively develops open-source AI models under the Gemma label. The latest iteration, Gemma 3, boasts impressive capabilities and broad device compatibility.

Google recently unveiled its third-generation Gemma models, available in four variants: 1 billion, 4 billion, 12 billion, and 27 billion parameters. These models are designed to run on a wide range of devices, from smartphones to powerful workstations.

Optimized for Single-Accelerator Performance

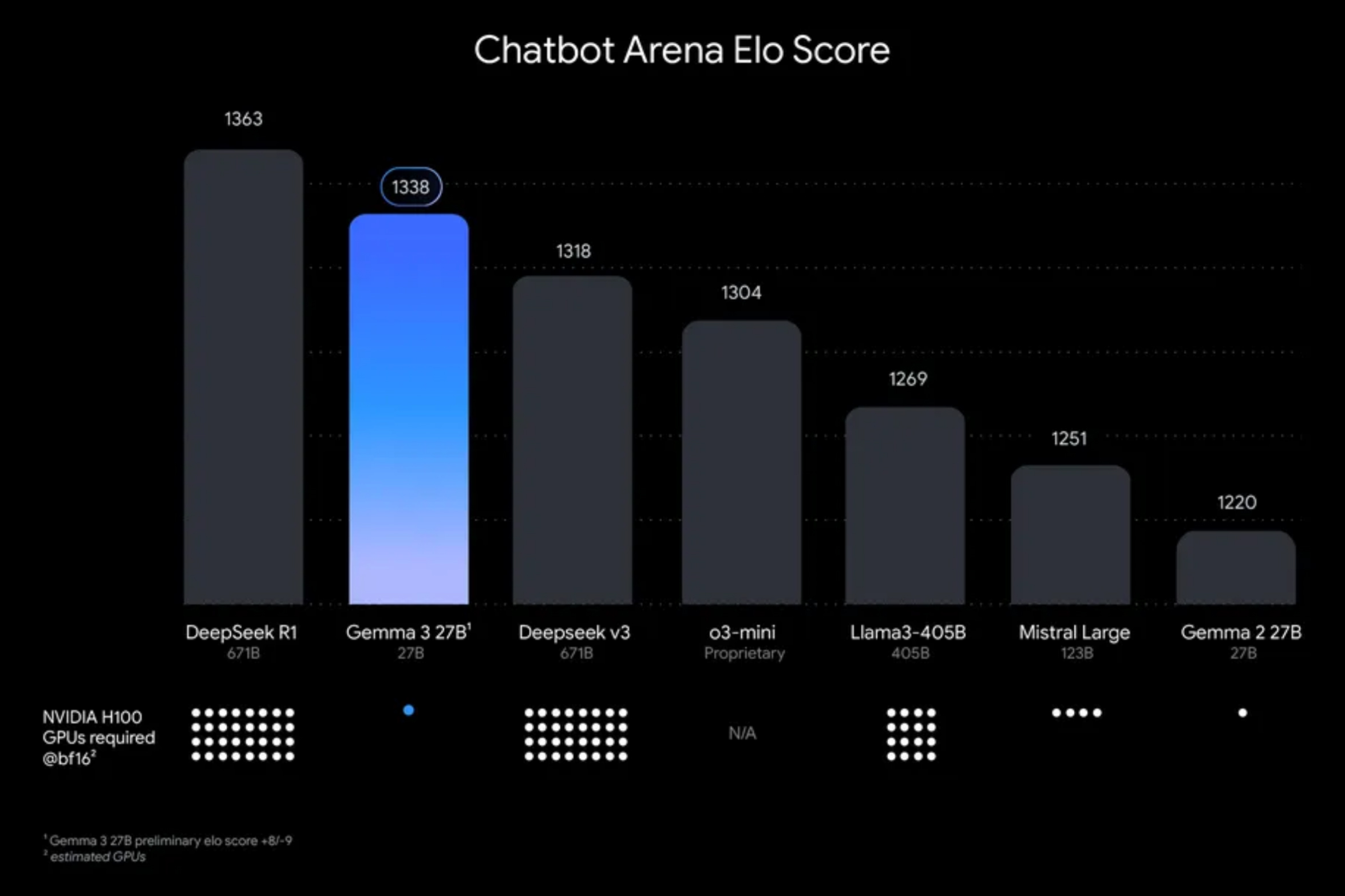

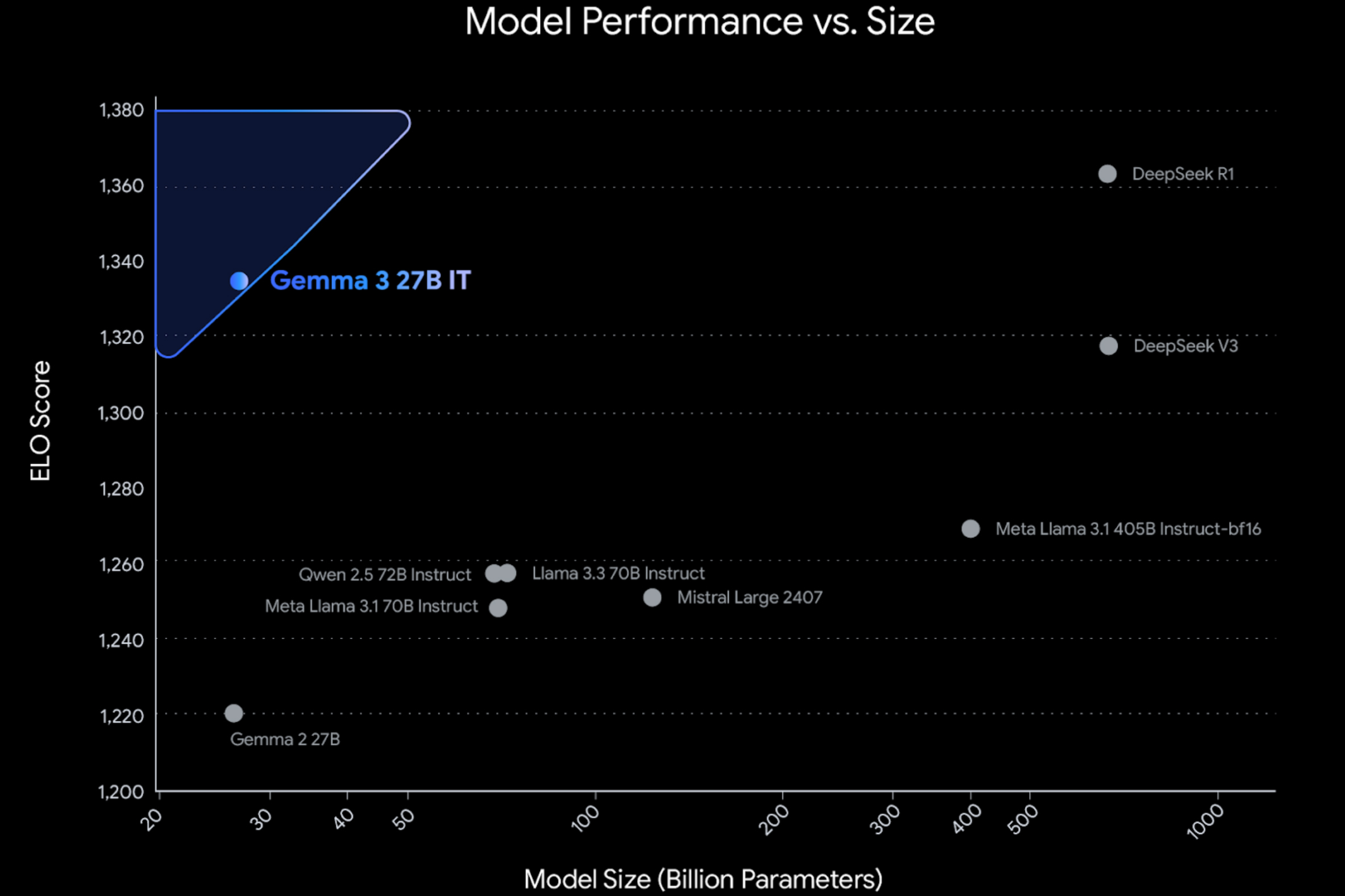

Google Gemma 3 AI model’s performance comparison.

Google Gemma 3 AI model’s performance comparison.

Google highlights Gemma 3 as the leading single-accelerator model, meaning it can operate on a single GPU or TPU, eliminating the need for complex clusters. This allows for native execution on devices like the Pixel smartphone, leveraging its Tensor Processing Core (TPU), similar to how Gemini Nano operates locally.

A key advantage of Gemma 3’s open-source nature is the flexibility it offers developers. They can customize and integrate it into mobile apps and desktop software according to their specific needs. Furthermore, Gemma 3 supports over 140 languages, with 35 available pre-trained.

Multimodal Capabilities and Enhanced Performance

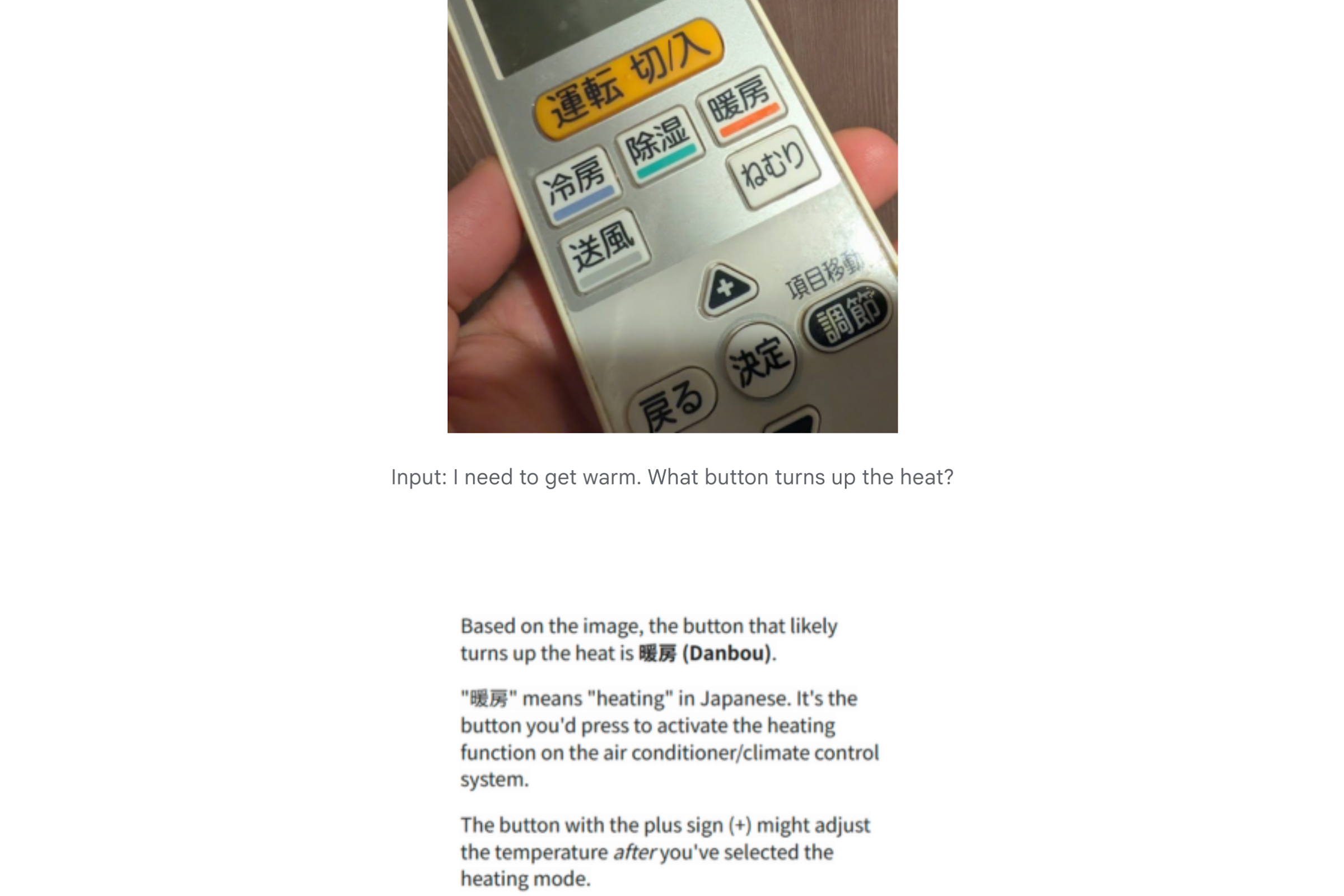

Like the Gemini 2.0 models, Gemma 3 is multimodal, understanding text, images, and videos. Performance benchmarks indicate that Gemma 3 surpasses other open-source models like DeepSeek V3, OpenAI’s o3-mini, and Meta’s Llama-405B.

Extensive Context Window and Function Calling

Gemma 3 boasts a 128,000-token context window, capable of processing the equivalent of a 200-page book. While smaller than Gemini 2.0 Flash Lite’s million-token window, it remains substantial. For context, an average English word translates to approximately 1.3 tokens.

Demonstration of visual understanding with Google Gemma 3 AI model.

Demonstration of visual understanding with Google Gemma 3 AI model.

Function calling and structured output support allow Gemma 3 to interact with external datasets and perform tasks like an automated agent, mirroring Gemini’s cross-platform functionality within applications like Gmail and Docs.

Deployment Options and Availability

Gemma 3 can be deployed locally or through Google’s cloud platforms like Vertex AI. The models are accessible via Google AI Studio and third-party repositories like Hugging Face, Ollama, and Kaggle.

Google Gemma 3 AI model benchmark.

Google Gemma 3 AI model benchmark.

Gemma 3 reflects a growing trend of companies developing both Large Language Models (LLMs) and Small Language Models (SLMs). Microsoft employs a similar strategy with its open-source Phi series. SLMs like Gemma and Phi are resource-efficient, making them suitable for mobile devices and offering lower latency for enhanced mobile application performance.

Conclusion

Google’s Gemma 3 represents a significant advancement in open-source AI, providing developers with powerful and adaptable models for a variety of applications. Its efficiency and multimodal capabilities position it as a strong contender in the evolving landscape of AI models, particularly for mobile and resource-constrained environments. The open-source nature of Gemma 3 fosters innovation and collaboration within the AI community, pushing the boundaries of what’s possible with accessible and efficient AI models.