Google has unveiled a series of significant upgrades for its Gemini AI assistant. The latest Gemini 2.0 Flash Thinking Experimental model boasts faster processing speeds and the ability to process uploaded files as input. However, the most noteworthy advancement is a new opt-in feature called Personalization, leveraging your Google Search history for tailored responses.

This personalized experience will eventually extend beyond Search, integrating with other Google apps like Photos and YouTube, similar to Apple’s delayed AI features for Siri.

Search History Powers Personalized Gemini Responses

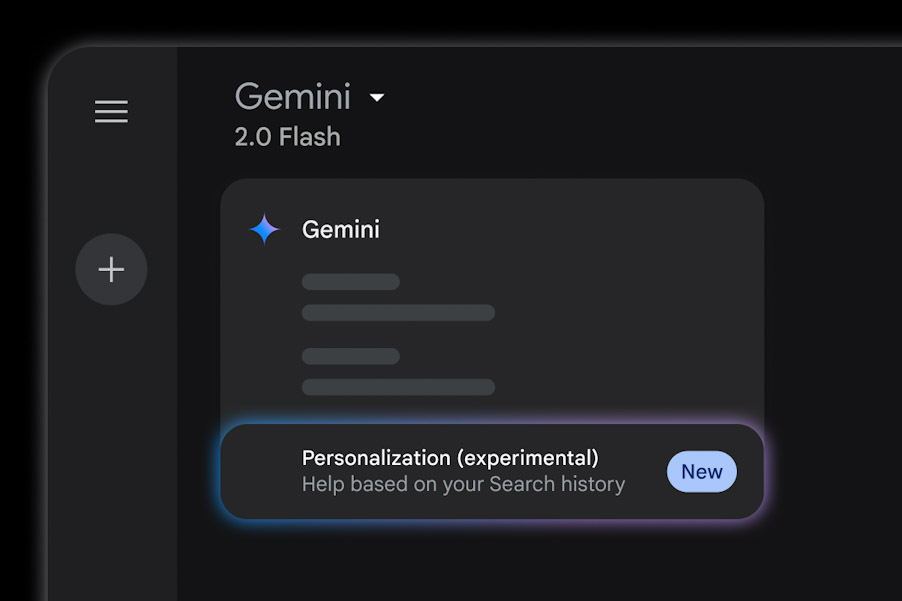

Gemini personalization feature.

Gemini personalization feature.

With the Google Search integration, Gemini can now offer more personalized recommendations. For example, if you inquire about nearby cafes, Gemini will reference your past searches for similar information, potentially incorporating previously viewed cafe names into its suggestions.

Google explains in a blog post that this integration “will enable Gemini to provide more personalized insights, drawing from a broader understanding of your activities and preferences to deliver responses that truly resonate with you.”

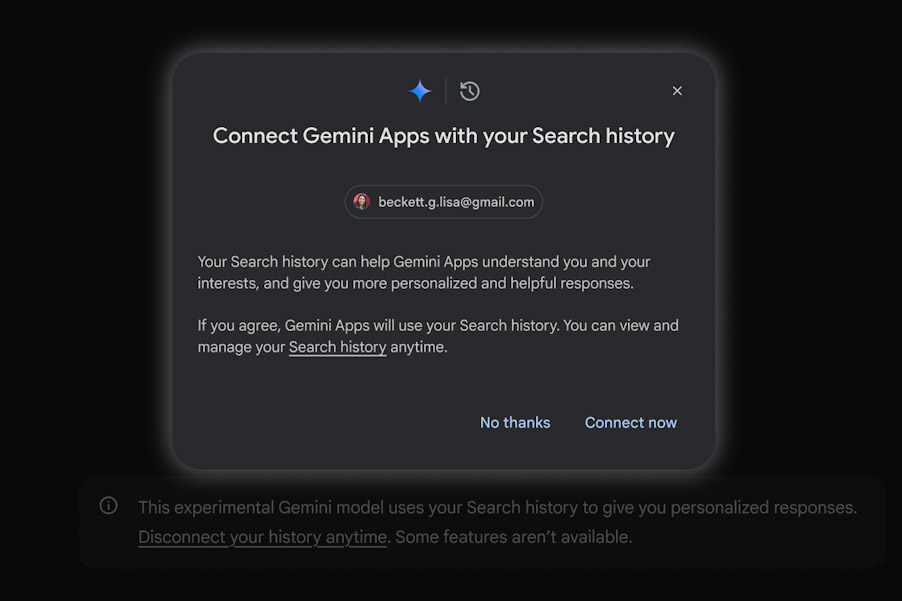

Giving Search history access to Gemini.

Giving Search history access to Gemini.

This Personalization feature is available to both free and paid Gemini Advanced subscribers, initially rolling out on the web version before expanding to mobile. Supporting over 40 languages, Google plans to make this feature available globally.

While privacy concerns are understandable, Personalization is opt-in and includes several safeguards:

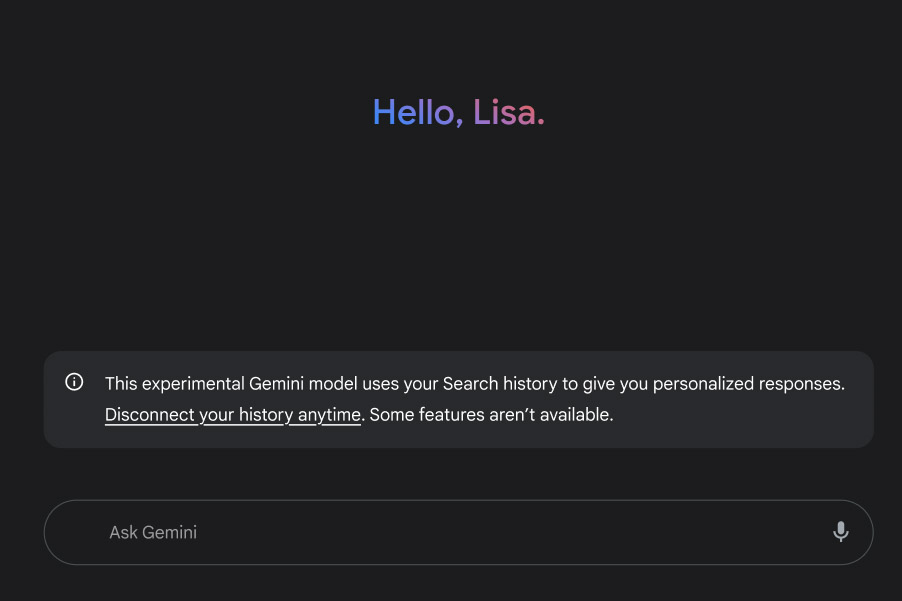

Warning banner in Gemini.

Warning banner in Gemini.

- It requires users to connect Gemini with their Search history, enable Personalization, and activate Web & App Activity.

- An in-chat banner allows users to quickly disconnect their Search history when Personalization is active.

- Gemini explicitly discloses the user data it utilizes, including saved information, previous chats, and Search history.

Furthermore, users can instruct Gemini to reference past chats for even more contextually relevant responses. This feature, currently exclusive to Advanced subscribers, will soon be available to all free users.

Expanding Gemini’s App Integration

App that work across Gemini.

App that work across Gemini.

Gemini’s “apps” system, formerly known as extensions, facilitates interaction with both Google and third-party applications. This allows users to complete tasks across various apps without directly launching them.

The Gemini 2.0 Flash Thinking Experimental model now includes access to this expanded app ecosystem, adding Google Photos and Notes to the existing roster of YouTube, Maps, Google Flights, Google Hotels, Keep, Drive, Docs, Calendar, and Gmail. Third-party services like WhatsApp and Spotify can also be integrated by linking them to a Google account.

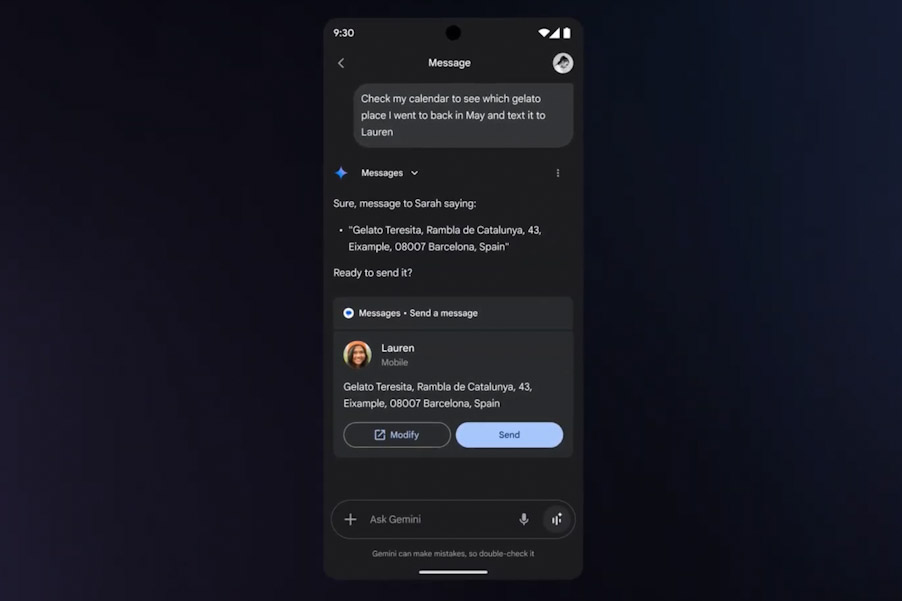

Beyond retrieving information and executing tasks, this system enables multi-step workflows. For example, a single voice command could instruct Gemini to find a recipe on YouTube, add ingredients to Google Keep, and locate a nearby grocery store. Google Photos integration is also on the horizon.

Multi-app workflow in Gemini.

Multi-app workflow in Gemini.

Google explains that this enhanced model “can better tackle complex requests…because the new model can better reason over the overall request, break it down into distinct steps, and assess its own progress as it goes.”

Furthermore, the Gemini 2.0 Flash Thinking Experimental model boasts an expanded context window of 1 million tokens. Since AI tools like Gemini process language by breaking down words into tokens (with an average English word equating to approximately 1.3 tokens), this larger context window enables Gemini to handle significantly more information and tackle complex problems.