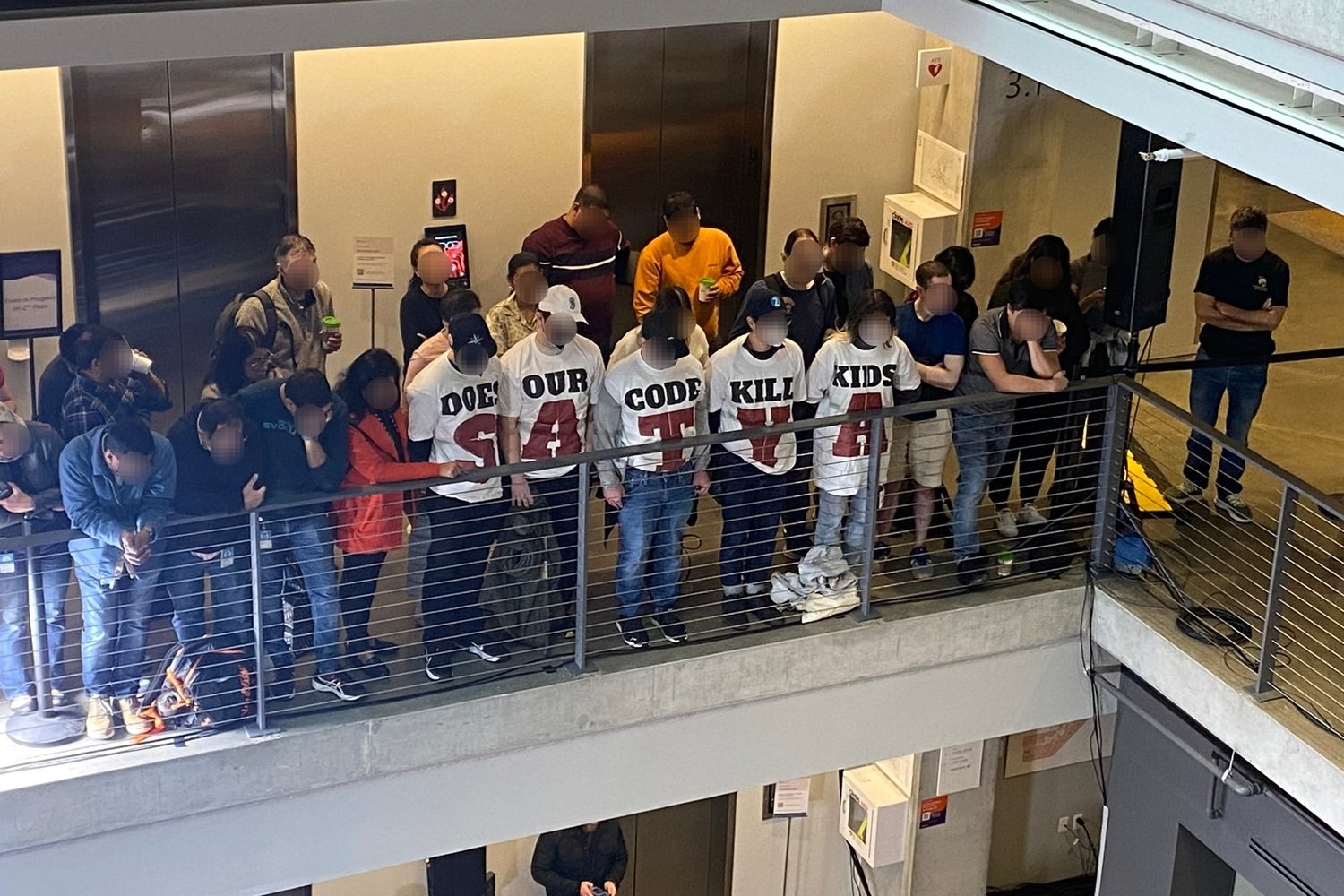

ChatGPT’s new 4.0 model consistently generates images of a generic, brown-haired white man with glasses when asked to depict itself as a human. This intriguing phenomenon, highlighted by AI researcher Daniel Paleka, persists across various requested styles, from manga to tarot cards. While the artistic style changes, the underlying image of this nondescript man remains constant.

The Default Human and AI Bias

This “default human” raises questions about the biases embedded within AI systems. While ChatGPT, as a machine learning model, lacks self-conception, its output reflects the biases present in its training data and the programmers who created it. This isn’t a new issue; machine learning systems used in areas like crime prediction and facial recognition have been criticized for exhibiting biases against certain demographics, particularly Black individuals. Similarly, sexist biases are also prevalent, perpetuating stereotypes present in the training data. To see a female representation of ChatGPT, one has to specifically request it, reinforcing the notion of the white male as the default human.

Pixargpt

Pixargpt

Exploring ChatGPT’s Self-Image

Paleka suggests several theories for this phenomenon, including a deliberate choice by OpenAI, an inside joke, or an emergent property of the training data. When questioned about its self-image, ChatGPT offers abstract descriptions, envisioning itself as a “glowing, ever-shifting entity made of flowing data streams” or a “mirror and collaborator—part library, part conversational partner.” These responses highlight the inherent difference between human self-perception and the simulated understanding of an LLM.

Platosgpt

Platosgpt

Reflecting the User and Programmer

The differing responses to the same prompt underscore the nature of LLMs as reflections of both the user and the programmer. They are sophisticated word calculators, predicting desired outputs based on training data and user input. Somewhere in this complex process, the association of a brown-haired white guy with glasses as the representative “person” has emerged. This observation highlights the importance of continued scrutiny and development to mitigate biases and ensure fairer representations within AI systems.

Conclusion

The curious case of ChatGPT’s default human reveals the complexities and potential biases embedded within AI. While these systems offer impressive capabilities, understanding their limitations and actively working to address inherent biases is crucial for responsible development and deployment. The ongoing conversation about AI ethics and fairness must continue to evolve alongside the technology itself.