Microsoft marked its 50th anniversary with a grand celebration featuring past and present CEOs, but the event was interrupted by an employee protest. Software engineer Ibtihal Aboussad publicly confronted Microsoft’s head of AI, Mustafa Suleyman, accusing the company of complicity in genocide through its technology sales to Israel.

Aboussad, a member of the AI Platform team, directly addressed Suleyman, stating, “Shame on you. You are a war profiteer. Stop using AI for genocide in our region. You have blood on your hands. All of Microsoft has blood on its hands.” After being escorted out, Aboussad reportedly disseminated a memo to internal distribution lists detailing her concerns.

Microsoft responded with a statement emphasizing the company’s commitment to providing avenues for employee feedback while maintaining business operations. The company also used the event to showcase updates to its Copilot assistant, including new autonomous agent capabilities. These agents can perform browser-based tasks but require user oversight due to speed, cost, and accuracy limitations.

Aboussad’s memo highlighted the escalating death toll in Gaza and referenced Microsoft’s $133 million contract with the Israeli Ministry of Defense. She cited reports indicating a significant increase in the Israeli military’s use of Microsoft and OpenAI artificial intelligence, as well as the storage of sensitive data on Microsoft servers. The memo alleges that Microsoft’s AI powers critical Israeli military projects, including a “target bank” and Palestinian population registry, contributing to increased lethality in Gaza.

The tech industry’s relationship with the defense sector has evolved, particularly with rising geopolitical tensions. While historically, tech employees often opposed military applications of their work, recent layoffs and power shifts within companies have made dissent more challenging. Companies like Palantir and Anduril have emerged as prominent players in the defense technology space.

Aboussad expressed her dismay at the use of her work for military purposes, stating, “I was excited to contribute to cutting-edge AI technology…for the good of humanity… I was not informed that Microsoft would sell my work to the Israeli military and government, with the purpose of spying on and murdering journalists, doctors, aid workers, and entire civilian families.”

A key concern regarding AI in warfare is the potential for over-reliance by operators on automated systems for attack planning. Reports suggest Israeli soldiers have used AI for rapid target identification, sometimes without adequate accuracy verification. The Signalgate scandal further revealed instances of indiscriminate strikes and collateral damage authorized by military leadership.

While major tech companies explore military applications for AI, even defense tech CEOs like Palmer Luckey of Anduril acknowledge the ethical dilemmas faced by engineers whose work is repurposed for defense. While Anduril employees understand the nature of their work, engineers at larger tech firms may find their software used in ways they did not intend.

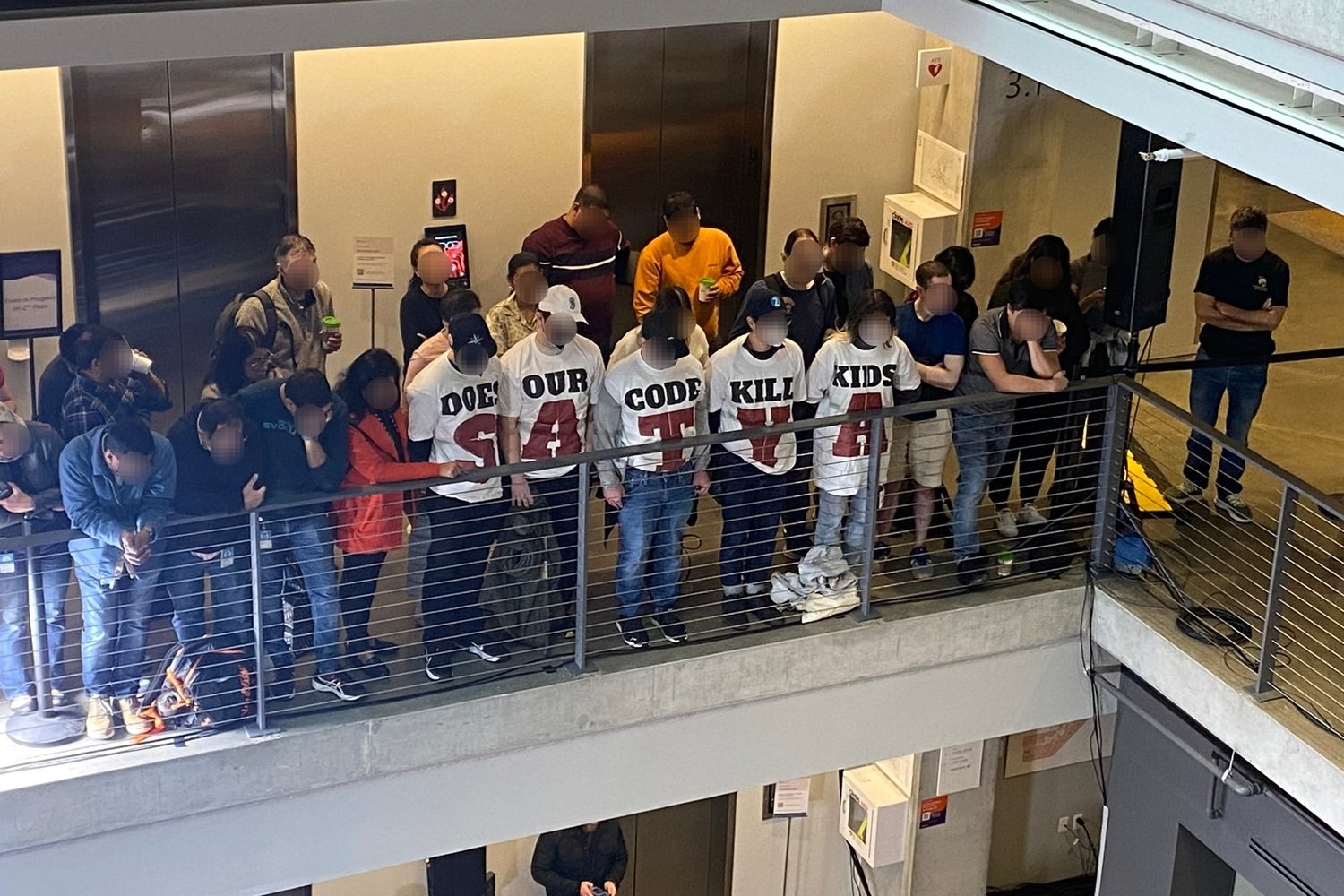

This incident follows previous protests at Microsoft over its Israeli military contracts. In February, employees were removed from a town hall for questioning CEO Satya Nadella about the potential use of their code in harming children.