The internet is abuzz with a curious new trend: prompting Google’s AI Overview feature to generate meanings for completely fabricated phrases. Historian Greg Jenner sparked the phenomenon by asking Google to explain the meaning of “You can’t lick a badger twice.” Surprisingly, the AI confidently provided a seemingly plausible explanation, suggesting it meant you can’t deceive someone twice after they’ve already been tricked. The only problem? This idiom didn’t exist before Jenner’s query.

This discovery has led to a wave of humorous experiments, with users prompting Google’s AI to interpret a range of nonsensical phrases. Examples include “A squid in a vase will speak no ill,” which the AI interpreted as something outside its natural environment being unable to cause harm. Another example, “You can take your dog to the beach but you can’t sail it to Switzerland,” was explained as a commentary on the difficulties of international pet travel.

However, this trick doesn’t always work. Some phrases don’t trigger the AI Overview feature at all. Cognitive scientist Gary Marcus, speaking to Wired, described the AI’s performance as “wildly inconsistent,” a characteristic he attributes to generative AI in general.

While entertaining, this phenomenon highlights the potential dangers of over-relying on AI-generated information. Jenner cautioned that this could make fact-checking and verifying sources more challenging, as AI might prioritize statistically likely interpretations over factual accuracy. He expressed concern that “one of the key functions of Googling – the ability to factcheck a quote, verify a source, or track down something half remembered – will get so much harder if AI prefers to legitimate statistical possibilities over actual truth.”

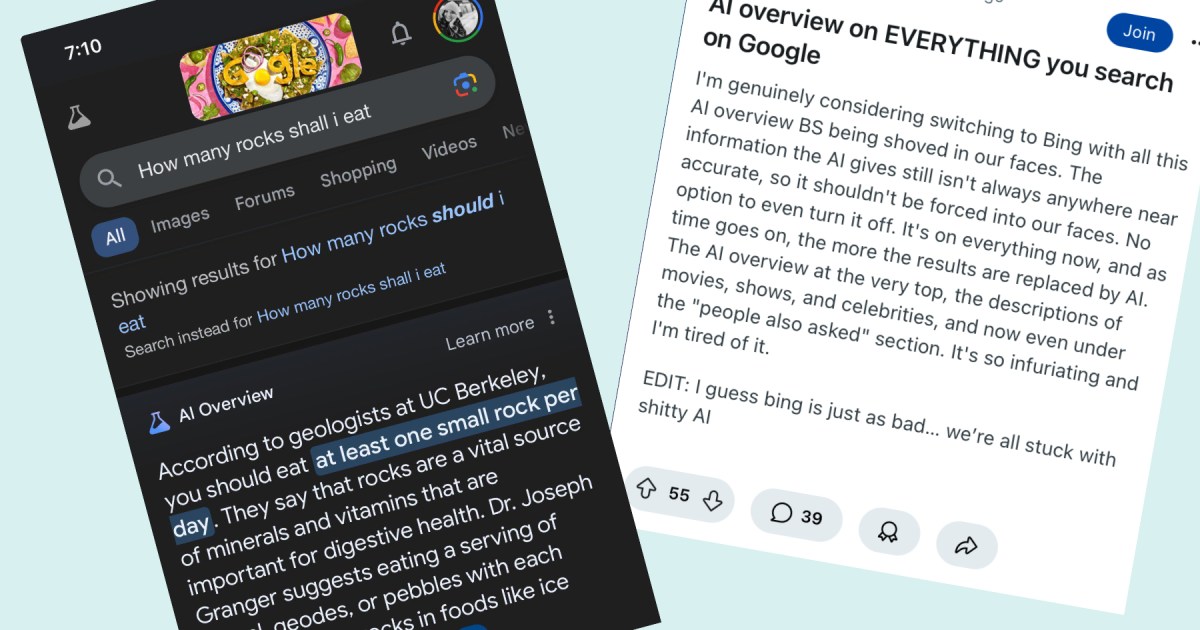

This isn’t the first time AI Overview’s limitations have been exposed. Upon its launch, the feature infamously suggested consuming one small rock daily and adding glue to pizza, though these recommendations were swiftly removed.

In a statement to MaagX, Google asserted that most AI Overviews provide helpful and factual information, emphasizing that they are still gathering feedback on the product.

For now, this serves as a crucial reminder to double-check information presented in Google’s AI Overview box, as accuracy is not guaranteed.