From self-driving cars to medical diagnoses, AI’s integration into daily life promises transformative benefits. However, these systems are not infallible. AI failures, whether due to design flaws, biased data, or malicious attacks, can have serious consequences. Understanding why these systems malfunction is critical, but the inherent complexity of AI often obscures the root causes. This article explores AI Psychiatry (AIP), a novel forensic tool designed to investigate and diagnose AI failures.

AI systems can fail for various reasons, including technical design flaws, biased training data, and security vulnerabilities exploitable by hackers. Identifying the precise cause is crucial for rectifying the issue. However, the “black box” nature of many AI systems makes this a daunting task, particularly for forensic investigators lacking access to proprietary system data.

The Challenge of AI Forensics

Consider a self-driving car unexpectedly veering off course and crashing. While logs and sensor data might point to a faulty camera misinterpreting a road sign, determining the underlying cause requires a deeper investigation. Could a hacker have exploited a software vulnerability, causing the camera malfunction? Traditional forensic methods for cyber-physical systems often lack the capability to fully investigate the AI component. Furthermore, advanced AIs constantly update their decision-making processes, making it challenging to analyze the most recent models. While researchers strive for greater AI transparency, forensic tools like AIP are essential for understanding AI failures in the meantime.

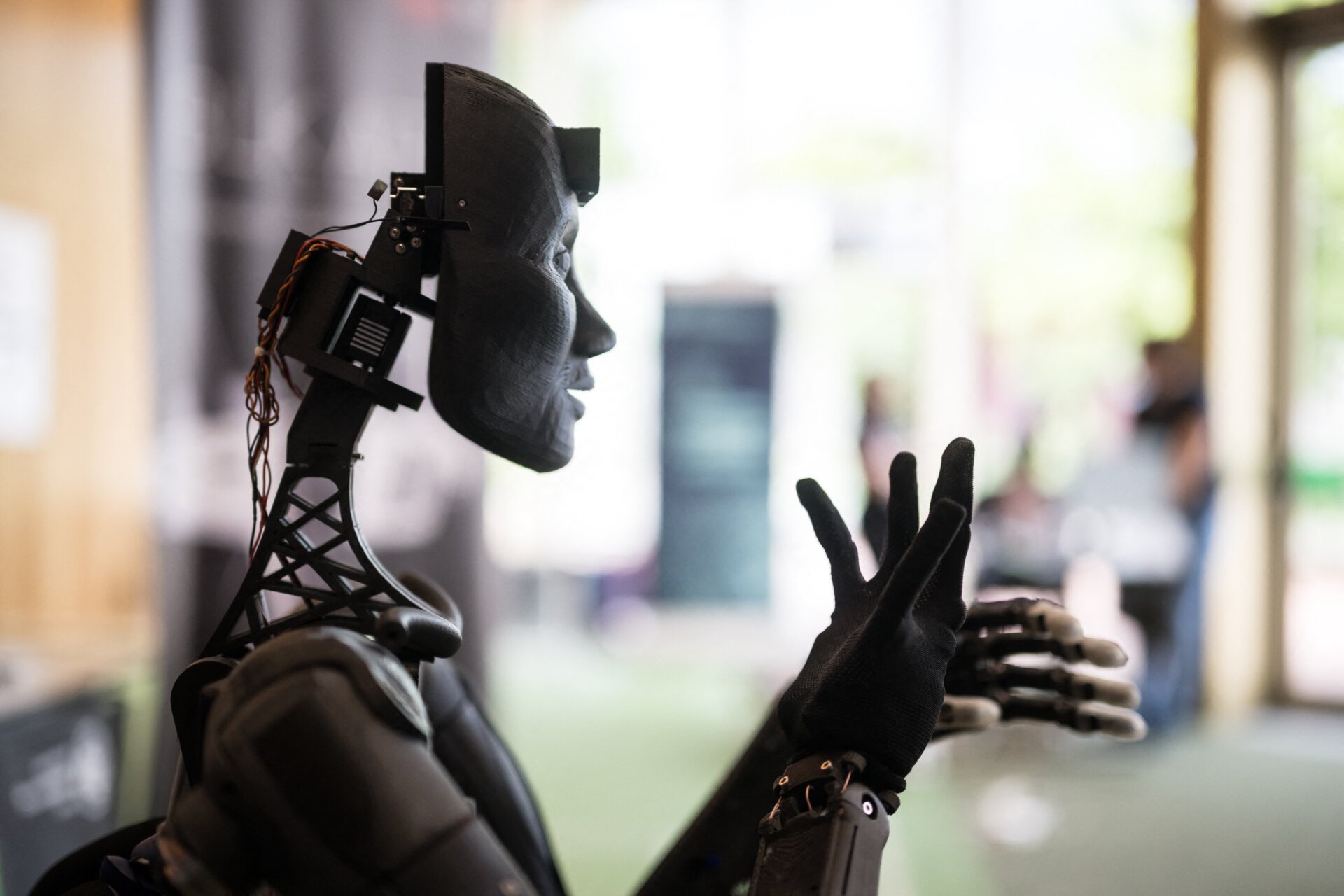

![]() Alt text: A conceptual image representing the complexity of AI systems and the challenge of understanding their failures.

Alt text: A conceptual image representing the complexity of AI systems and the challenge of understanding their failures.

Introducing AI Psychiatry

AIP utilizes forensic algorithms to isolate the data driving an AI’s decisions. These pieces are then reconstructed into a functional model mirroring the original, allowing investigators to “reanimate” the AI in a controlled environment and test it with various inputs, including malicious ones, to uncover harmful or hidden behaviors. The process begins with a memory image, a snapshot of the AI’s operational state at the time of failure. This memory image contains vital clues about the AI’s internal workings and decision-making process. AIP extracts the AI model from the memory image, dissects it, and loads it into a secure environment for testing.

In tests involving 30 AI models, 24 of which were intentionally “backdoored” to produce incorrect outputs under specific triggers, AIP successfully recovered, rehosted, and tested every model, including those used in real-world applications like street sign recognition for autonomous vehicles. These results demonstrate AIP’s potential to effectively solve the digital mystery behind AI failures, providing answers where traditional methods fall short. Even if AIP doesn’t identify a vulnerability within the AI itself, it allows investigators to rule out the AI and focus on other potential causes, like faulty hardware.

Beyond Autonomous Vehicles

AIP’s core algorithm is generic, targeting the universal components essential for AI decision-making. This makes it adaptable to various AI models using popular development frameworks. Investigators can utilize AIP to assess a model without prior knowledge of its architecture. Whether analyzing a product recommendation bot or an autonomous drone fleet control system, AIP can recover and rehost the AI for comprehensive analysis. The system is open source, accessible to any investigator.

Moreover, AIP can serve as a valuable tool for preemptive AI audits. As government agencies increasingly integrate AI into their workflows, AI audits are becoming a crucial oversight requirement. AIP offers auditors a consistent forensic methodology applicable across diverse AI platforms and deployments.

Alt text: Diagram illustrating the process of AI Psychiatry, from memory image acquisition to rehosting and testing.

Alt text: Diagram illustrating the process of AI Psychiatry, from memory image acquisition to rehosting and testing.

In conclusion, AI Psychiatry represents a significant advancement in AI forensics. By enabling in-depth analysis of AI failures, it promotes greater transparency, accountability, and ultimately, trust in these increasingly pervasive systems. This benefits both AI developers and those impacted by AI-driven decisions.

David Oygenblik, Ph.D. Student in Electrical and Computer Engineering, Georgia Institute of Technology and Brendan Saltaformaggio, Associate Professor of Cybersecurity and Privacy, and Electrical and Computer Engineering, Georgia Institute of Technology

This article is republished from The Conversation under a Creative Commons license. Read the original article.