Google DeepMind has unveiled Gemini 2.0, the next generation of its AI model, less than a year after the debut of Gemini 1.5. This new model boasts native image and audio output, paving the way for “new AI agents that bring us closer to our vision of a universal assistant,” as stated in Google’s official announcement.

Gemini 2.0 logo

Gemini 2.0 logo

Gemini 2.0 is immediately available across all subscription tiers, including the free version. As Google’s flagship AI model, it’s anticipated to power AI functionalities across Google’s product ecosystem in the near future. Similar to OpenAI’s approach with its models, this initial release of Gemini 2.0 is an “experimental preview,” a smaller, less powerful version compared to the full version planned for later release within Google Gemini.

According to DeepMind CEO Demis Hassabis in an interview with The Verge, the current preview performs comparably to the existing Pro model, offering a significant improvement in capability at the same cost, performance, and speed. A lightweight version, Gemini 2.0 Flash, is also being released for developers.

Gemini 2.0: Advancing Google’s AI Agent Agenda

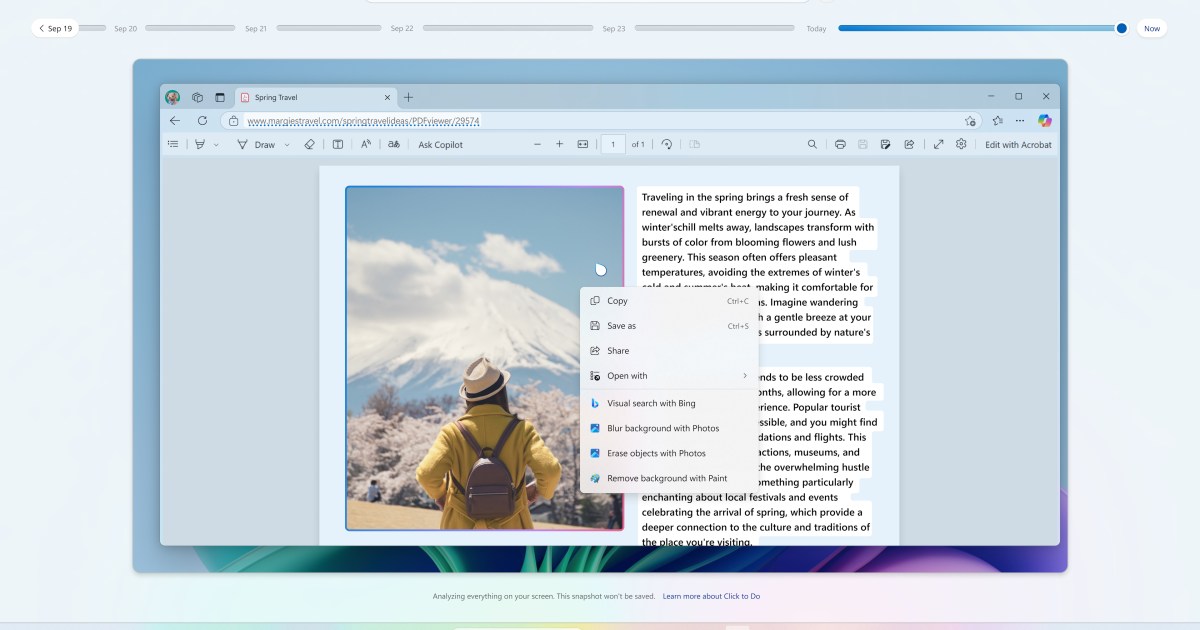

With this more powerful Gemini model, Google progresses its AI agent strategy. This involves smaller, specialized models performing autonomous actions on behalf of the user. Gemini 2.0 is expected to significantly accelerate Google’s Project Astra. This project integrates Gemini Live’s conversational AI with real-time video and image analysis, providing users with contextual information about their surroundings via a smart glasses interface.

Introducing Project Mariner and Other New AI Tools

Google also announced Project Mariner, its counterpart to Anthropic’s Computer Control. This Chrome extension allows control of a desktop computer, mimicking human interaction through keystrokes and mouse clicks. Additionally, Google is launching Jules, an AI coding assistant designed to help developers identify and refine inefficient code. Another new tool, Deep Research, functions similarly to Perplexity AI and ChatGPT Search, generating in-depth reports on user-specified internet search topics.

Currently available to English-language Gemini Advanced subscribers, Deep Research creates a multi-step research plan for user approval before execution. Upon approval, the research agent conducts a comprehensive search on the given topic, exploring relevant related areas. Finally, it compiles a report summarizing key findings and providing citation links to the source information. This feature is accessible through the chatbot’s model selection menu on the Gemini homepage.

Conclusion: A Leap Forward in AI Capabilities

Gemini 2.0 marks a significant advancement in Google’s AI capabilities. With its enhanced features, including native image and audio output, and its integration into various projects like Astra and Mariner, Google is positioning itself at the forefront of AI innovation. The introduction of tools like Jules and Deep Research further demonstrates Google’s commitment to developing practical AI applications for diverse user needs. This new model promises a more integrated and powerful AI experience, bringing Google closer to its vision of a universal AI assistant.