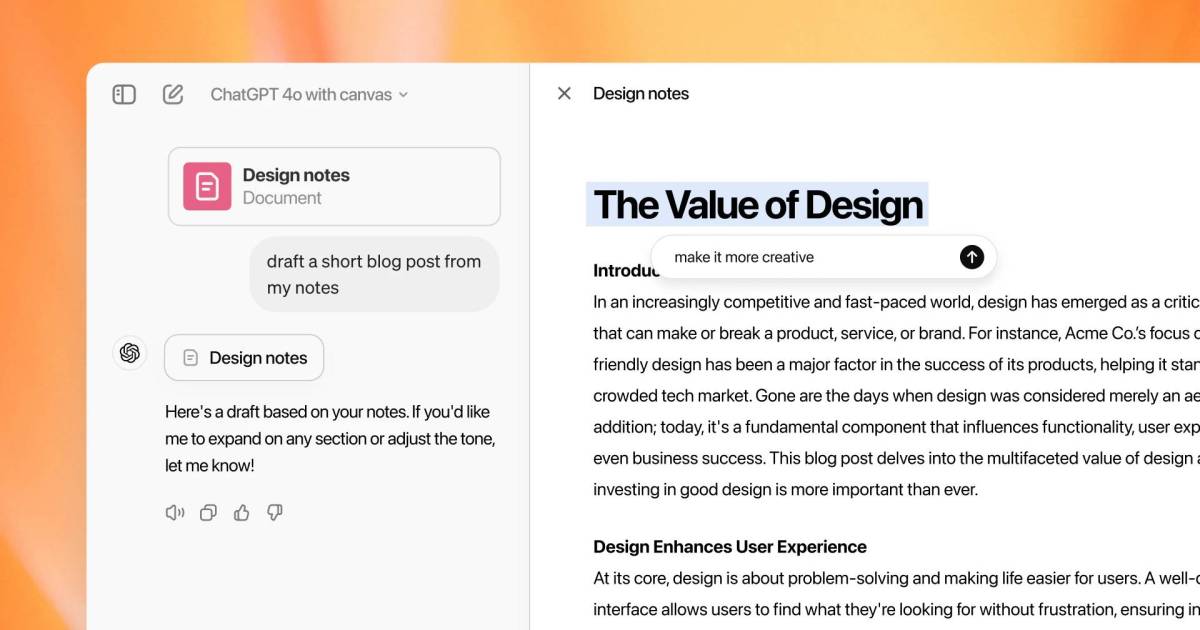

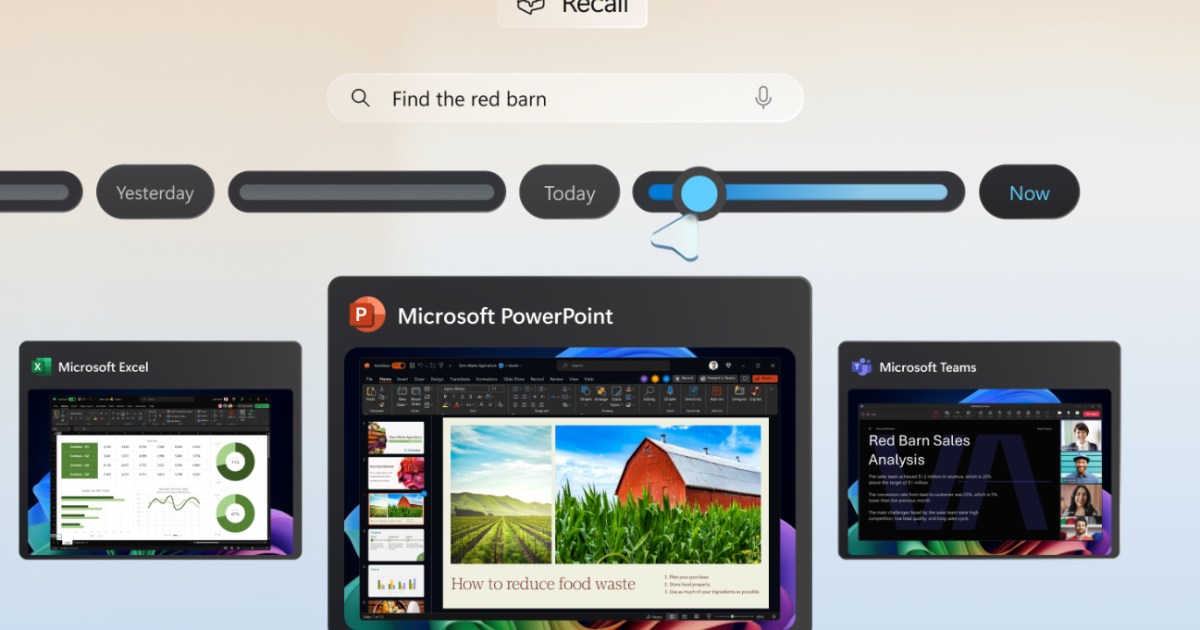

The rapid growth of generative AI like ChatGPT has brought with it a significant increase in resource consumption, particularly water and electricity. A recent study conducted by The Washington Post and researchers from the University of California, Riverside sheds light on the substantial resources required by OpenAI’s chatbot for even its most basic functions.

a serverServer racks in a data center. Generative AI models like ChatGPT require significant resources to operate.

a serverServer racks in a data center. Generative AI models like ChatGPT require significant resources to operate.

Water Consumption: Location Matters

The amount of water needed for ChatGPT to generate a 100-word email varies depending on the location of the data center and its proximity to the user. In areas where water is scarce and electricity is cheap, data centers tend to rely more on electricity-powered air conditioning units for cooling. For example, generating a 100-word email in Texas consumes an estimated 235 milliliters of water. However, the same task performed in Washington requires significantly more water, approximately 1,408 milliliters (almost a liter and a half).

The Shift to Liquid Cooling

The increasing size and density of data centers, driven by the rise of generative AI, have pushed air-based cooling systems to their limits. Consequently, many AI data centers are transitioning to liquid-cooling methods. These systems pump large volumes of water past server stacks to absorb heat, which is then dissipated through cooling towers.

Electricity Consumption: A Growing Concern

ChatGPT’s electricity demands are also substantial. According to The Washington Post, generating a 100-word email using ChatGPT consumes enough electricity to power more than a dozen LED lightbulbs for an hour. To illustrate the scale of this consumption, if just one-tenth of Americans used ChatGPT to write a 100-word email weekly for a year, the total electricity used would be equivalent to the power consumed by all households in Washington, D.C., for 20 days, a city with approximately 670,000 residents.

The Future of Resource Demands

The resource demands of AI are not expected to decrease anytime soon and are likely to increase. Meta, for instance, required 22 million liters of water to train its Llama 3.1 models. Court records reveal that Google’s data centers in The Dalles, Oregon, consume nearly a quarter of the town’s available water. Similarly, xAI’s new supercluster in Memphis already demands 150 megawatts of electricity, enough to power approximately 30,000 homes, from the local utility company.

Conclusion

The environmental impact of AI, specifically its water and electricity consumption, is a growing concern. As AI models become more complex and their usage expands, addressing these resource challenges will be crucial for sustainable development. The ongoing shift to more efficient cooling systems and the exploration of renewable energy sources for data centers are critical steps towards mitigating the environmental footprint of AI.