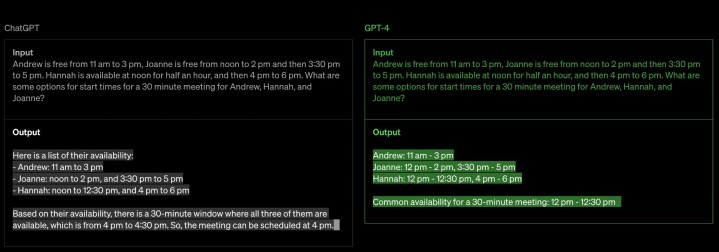

The release of ChatGPT, initially powered by the GPT-3.5 large language model, amazed users with its natural language capabilities. However, the arrival of GPT-4 significantly raised the bar for AI potential, prompting some to consider it a glimpse into artificial general intelligence (AGI).

What is GPT-4?

GPT-4, with GPT-4o being its latest iteration, is OpenAI’s cutting-edge language model. It represents a significant advancement over the technology previously used by ChatGPT (initially GPT-3.5, later updated). GPT stands for Generative Pre-trained Transformer, a deep learning technology leveraging artificial neural networks to generate human-like text.

OpenAI highlights three key improvements in GPT-4 compared to its predecessor: enhanced creativity, visual input capabilities, and a broader context window. GPT-4 demonstrates superior creativity in both generating and collaborating with users on creative endeavors, including music composition, screenplay writing, technical writing, and even adapting to a user’s writing style.

Image used with permission by copyright holder

Image used with permission by copyright holder

The expanded context window further contributes to GPT-4’s capabilities. It can process up to 128k tokens of text, enabling interaction with content from provided web links, facilitating long-form content creation and extended conversations.

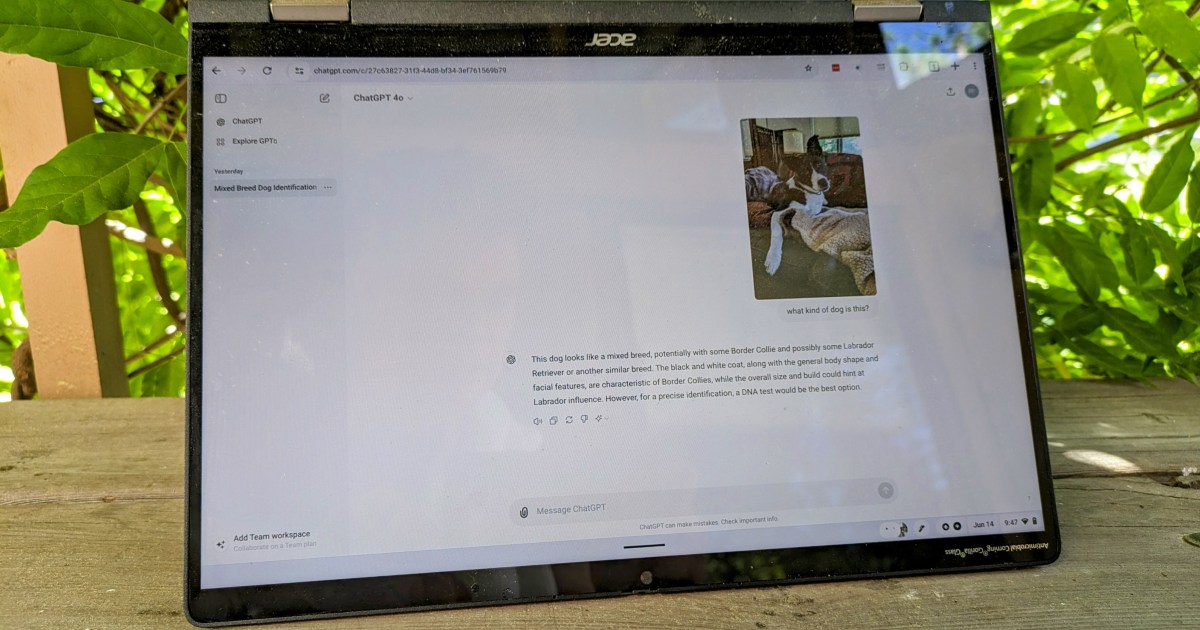

Unlike ChatGPT’s earlier versions, GPT-4 can analyze uploaded images. For example, it can identify potential recipes based on a picture of ingredients. However, it currently lacks the ability to analyze video clips.

OpenAI also emphasizes GPT-4’s improved safety, reporting a 40% increase in factual responses and an 82% reduction in responses to disallowed content requests, based on their internal testing. These advancements were achieved through training with human feedback, including collaboration with over 50 experts in AI safety and security.

Initial user experiences showcased GPT-4’s remarkable capabilities, from creating new languages and devising escape plans to generating complex app animations. One user reportedly prompted GPT-4 to create a functional version of Pong in just 60 seconds using HTML and JavaScript.

Accessing GPT-4

Jacob Roach / MaagX

Jacob Roach / MaagX

GPT-4 is accessible across all OpenAI subscription tiers. Free users have limited GPT-4o access (approximately 80 chats in a 3-hour window) before transitioning to the less powerful GPT-4o mini until the timer resets. Upgrading to ChatGPT Plus ($20/month) provides expanded GPT-4 access and Dall-E image generation. Simply click “Upgrade to Plus” in the ChatGPT sidebar and enter payment information to toggle between GPT-4 and older models.

Free alternatives for experiencing GPT-4 include Microsoft’s Bing Chat, which incorporates GPT-4 (albeit with some features missing and integrated with Microsoft’s technology), offering limited free usage (15 chats per session, 150 sessions per day). Other platforms like Quora also utilize GPT-4.

GPT-4 Release and Versions

Shutterstock

Shutterstock

GPT-4 was officially announced on March 13th (as confirmed by Microsoft) and initially became available via ChatGPT Plus and Microsoft Copilot. It’s also accessible as an API for developers to integrate into applications and services. Early adopters include Duolingo, Be My Eyes, Stripe, and Khan Academy. The first public demonstration was livestreamed on YouTube.

GPT-4o mini, a streamlined version of GPT-4o, launched in July 2024, replacing GPT-3.5 as the default model in ChatGPT after the GPT-4o usage limit. It outperforms similarly sized models in reasoning benchmarks.

ChatGPT, initially based on GPT-3.5, transitioned to GPT-4o mini in July 2024. This enhanced version offers superior input comprehension, safety measures, conciseness, and lower operating costs.

The GPT-4 API is available to developers with a payment history with OpenAI, offering various GPT-4 versions and legacy GPT-3.5 models. While GPT-3.5 remains available for developers, its eventual discontinuation is planned. The API primarily targets app development, but it’s also relevant for consumer services like Plexamp’s ChatGPT integration, requiring a separate API key.

Performance Concerns and Visual Input

Despite initial acclaim, some users reported a decline in GPT-4’s performance over time. While OpenAI initially attributed this to heightened user awareness, a study comparing March and June versions indicated a significant accuracy drop. Further concerns arose in November 2024 with reported performance declines in various benchmarks, although response times improved.

One of GPT-4’s key features, visual input, allows ChatGPT Plus to process and interpret images, enabling multimodal interaction. Uploading images is straightforward, similar to uploading documents, via the paperclip icon next to the context window.

Limitations and Future Developments

OpenAI acknowledges that GPT-4, despite its advancements, still exhibits limitations such as social biases, hallucinations, and susceptibility to adversarial prompts. While not perfect, OpenAI asserts that GPT-4 is less prone to fabricating information.

GPT-4’s training data cutoff is December 2023 (October 2023 for GPT-4o and 4o mini), but its web search capabilities enable access to more recent information. GPT-4o is the latest GPT-4 iteration, with GPT-5 still anticipated.