The rapid advancement of artificial intelligence (AI) has sparked both excitement and concern within the tech community. A group of current and former employees from leading AI companies like OpenAI, Google’s DeepMind, and Anthropic have issued an open letter highlighting the “serious risks” associated with unchecked AI development. These experts warn of potential misuse, exacerbating existing inequalities, manipulating information, spreading disinformation, and even the potential for “loss of control of autonomous AI systems potentially resulting in human extinction.”

Mitigating the Risks of AI

While acknowledging the transformative potential of AI, the signatories emphasize the need for a robust oversight framework. They believe that mitigating these risks requires a collaborative effort between the scientific community, legislators, and the public. However, they express concern that “AI companies have strong financial incentives to avoid effective oversight” and cannot be solely relied upon to manage the technology’s development responsibly.

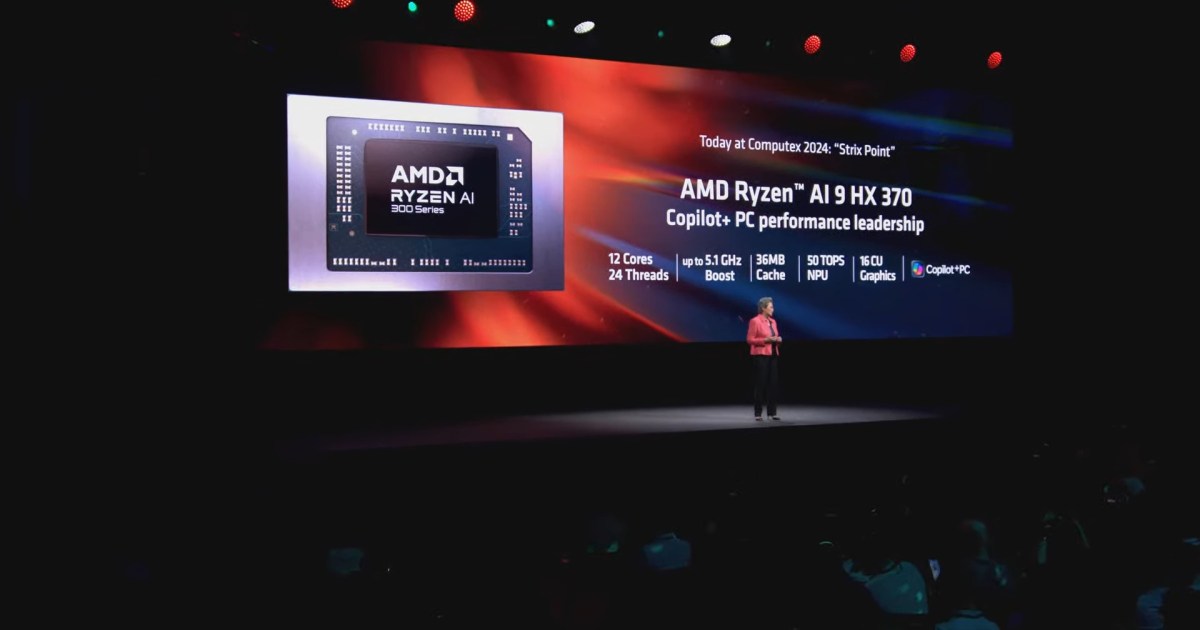

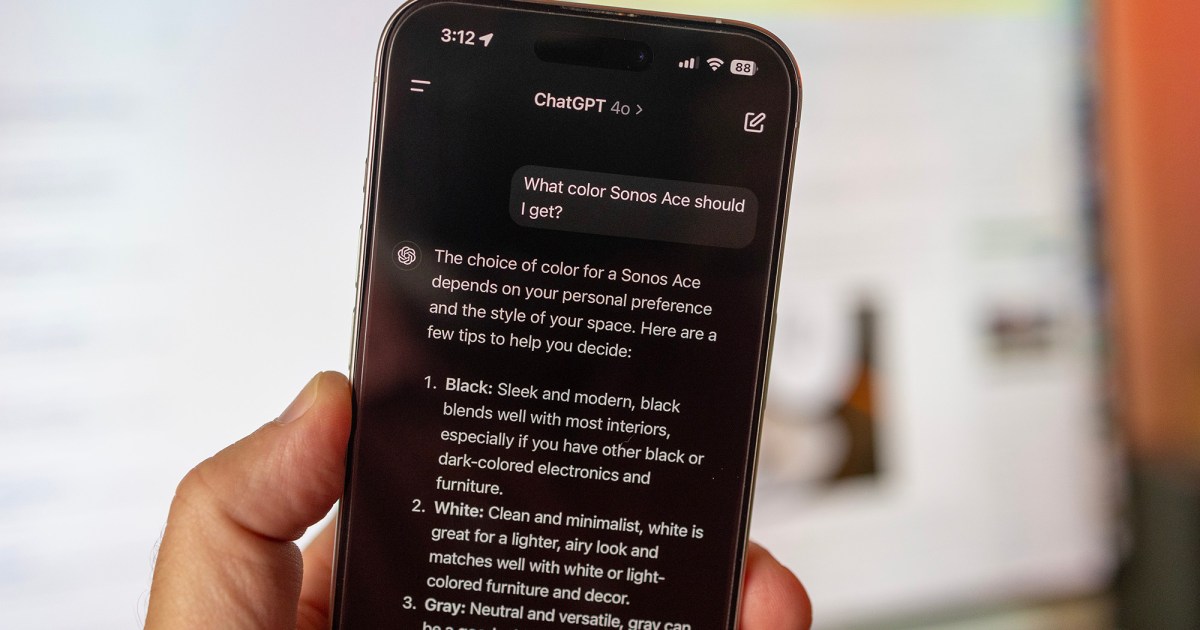

Since the launch of ChatGPT in late 2022, generative AI has revolutionized the computing world. Industry giants like Google Cloud, Amazon AWS, Oracle, and Microsoft Azure are at the forefront of what’s projected to be a trillion-dollar industry by 2032. McKinsey’s research indicates that by March 2024, nearly 75% of organizations had incorporated AI in some capacity. Microsoft’s Work Index survey further reveals that 75% of office workers already utilize AI in their daily tasks.

The “Move Fast and Break Things” Mentality

Despite the widespread adoption, concerns remain about the rapid pace of development. As former OpenAI employee Daniel Kokotajlo pointed out, the prevailing “move fast and break things” approach is unsuitable for such a powerful and complex technology. This approach has led to legal issues, with companies like OpenAI and Stable Diffusion facing copyright infringement lawsuits. Publicly available chatbots have also been manipulated into generating hate speech, conspiracy theories, and misinformation.

The Need for Transparency and Accountability

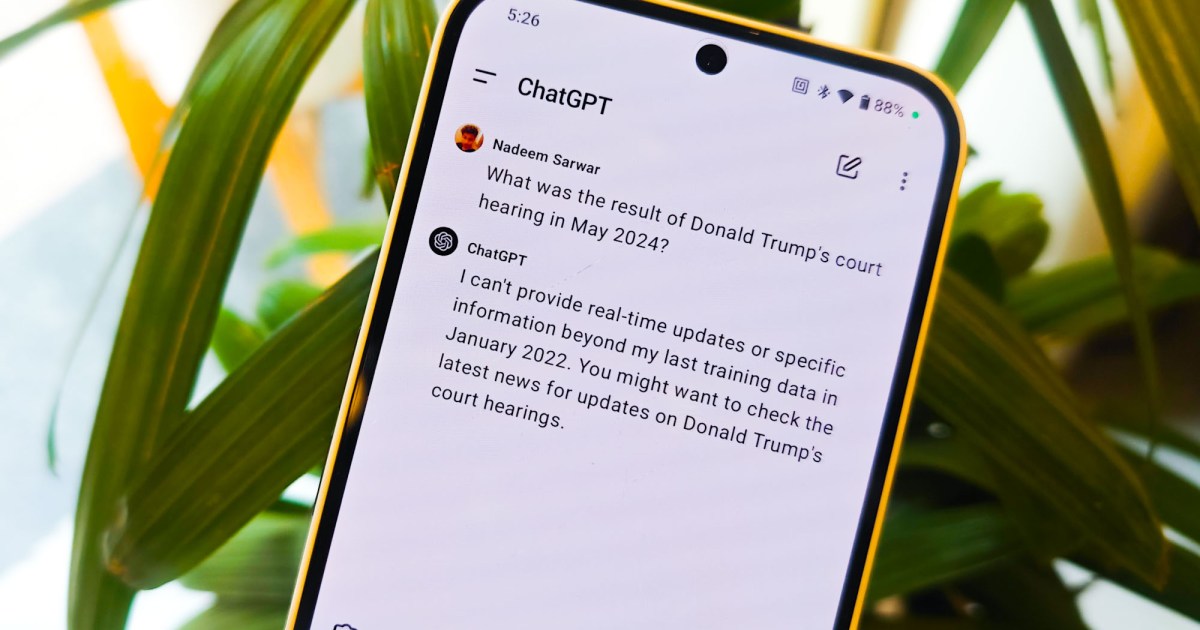

The concerned AI employees argue that these companies hold “substantial non-public information” about their products’ capabilities and limitations, including potential risks and the effectiveness of safety measures. This information is often inaccessible to government agencies and the public, hindering effective oversight.

The group emphasizes that “current and former employees are among the few people who can hold [AI companies] accountable to the public,” especially given the prevalence of confidentiality agreements and weak whistleblower protections.

Call to Action for Responsible AI Development

The group urges AI companies to take concrete steps towards greater transparency and accountability:

- Cease enforcing non-disparagement agreements.

- Implement anonymous channels for employees to raise concerns with the board and regulators.

- Protect whistleblowers from retaliation.

These measures are crucial to fostering a responsible AI ecosystem that prioritizes safety, ethical considerations, and public well-being.

Conclusion: Balancing Innovation with Responsibility

The rapid advancement of AI presents immense opportunities but also significant risks. The concerns raised by these AI experts underscore the urgent need for effective oversight and a collaborative approach to ensure responsible AI development. Balancing innovation with safety and ethical considerations is paramount to harnessing the transformative potential of AI while mitigating its potential harms. The call for greater transparency and accountability is a crucial step towards building a future where AI benefits humanity.