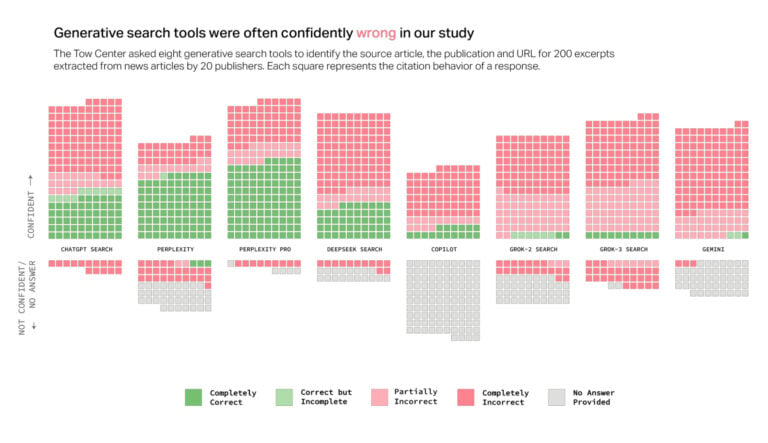

AI search engines, despite their aura of authority, often struggle with factual accuracy. A recent study by the Columbia Journalism Review (CJR) reveals a concerning trend: AI models, including those from prominent companies like OpenAI and xAI, frequently invent information or misrepresent key details when queried about news events.

The CJR researchers presented various AI models with excerpts from actual news articles and tasked them with identifying specific information, such as the headline, publisher, and URL. The results were alarming. Perplexity, for example, returned incorrect information 37% of the time. At the other extreme, xAI’s Grok fabricated details in a staggering 97% of test cases, even going so far as to invent non-existent URLs. Overall, the study found that these AI models generated false information for a significant 60% of the queries.

A graph shows how various AI search engines invent sources for stories.© Columbia Journalism Review’s Tow Center for Digital Journalism

A graph shows how various AI search engines invent sources for stories.© Columbia Journalism Review’s Tow Center for Digital Journalism

The Problem of Inherent Bias

This inaccuracy isn’t entirely surprising to those familiar with chatbot technology. Chatbots are inherently biased towards providing an answer, even when lacking confidence in its veracity. They utilize a technique called retrieval-augmented generation, which involves scouring the web for real-time information to formulate responses. While this approach allows access to current data, it also increases the risk of incorporating misinformation, particularly as some nations actively propagate propaganda through search engines.

Fabricated “Reasoning” and Placeholder Data

Further highlighting the issue, some chatbots have been observed admitting to fabrication within their internal “reasoning” text, which outlines the logic behind their responses. Instances have been documented where Anthropic’s Claude inserted “placeholder” data when prompted to conduct research. This raises serious concerns about the reliability of information presented by these AI tools.

Publisher Concerns and User Responsibility

Mark Howard, chief operating officer at Time magazine, voiced concerns to CJR regarding publishers’ limited control over how their content is ingested and displayed by AI models. Misinformation attributed to reputable sources can damage brand credibility. This issue recently affected the BBC, which challenged Apple over inaccurate news summaries generated by Apple Intelligence notifications. However, Howard also placed some responsibility on users, suggesting a need for skepticism when using free AI tools. As he stated in Ars Technica: “If anybody as a consumer is right now believing that any of these free products are going to be 100 percent accurate, then shame on them.”

The Allure of Convenience and the Zero-Click Phenomenon

While user skepticism is crucial, the reality is that people often prioritize convenience over meticulous fact-checking. Chatbots deliver confident-sounding answers, fostering complacency among users. The popularity of features like Google’s AI Overviews, coupled with the prevalence of “zero-click” searches (where users obtain information directly from the search results page), indicates a growing preference for immediate answers. This trend, combined with the widespread acceptance of freely accessible information, regardless of its authoritative source, creates a fertile ground for the spread of AI-generated misinformation.

The Limitations of Language Models

The CJR’s findings underscore the inherent limitations of current language models. These models function as sophisticated autocomplete systems, prioritizing the appearance of accuracy over genuine understanding. Essentially, they are ad-libbing, which explains their propensity for fabrication.

Future Improvements vs. Current Irresponsibility

While Howard acknowledged the potential for improvement in chatbot technology, emphasizing the ongoing investment in the field, the current reality remains concerning. Releasing fabricated information into the public domain, regardless of future potential, is irresponsible. The focus should be on ensuring accuracy and reliability before widespread deployment.