The rise of readily available generative AI tools has cast a shadow over the reliability of evidence presented in courtrooms. Judges are increasingly grappling with the challenge of distinguishing authentic material from sophisticated deepfakes, raising concerns about the justice system’s ability to discern truth in the digital age. This issue is particularly acute with audio and visual evidence, which holds significant weight with juries and is difficult to dismiss from memory, even if later proven to be fabricated.

The Deepfake Dilemma: Eroding Trust in Evidence

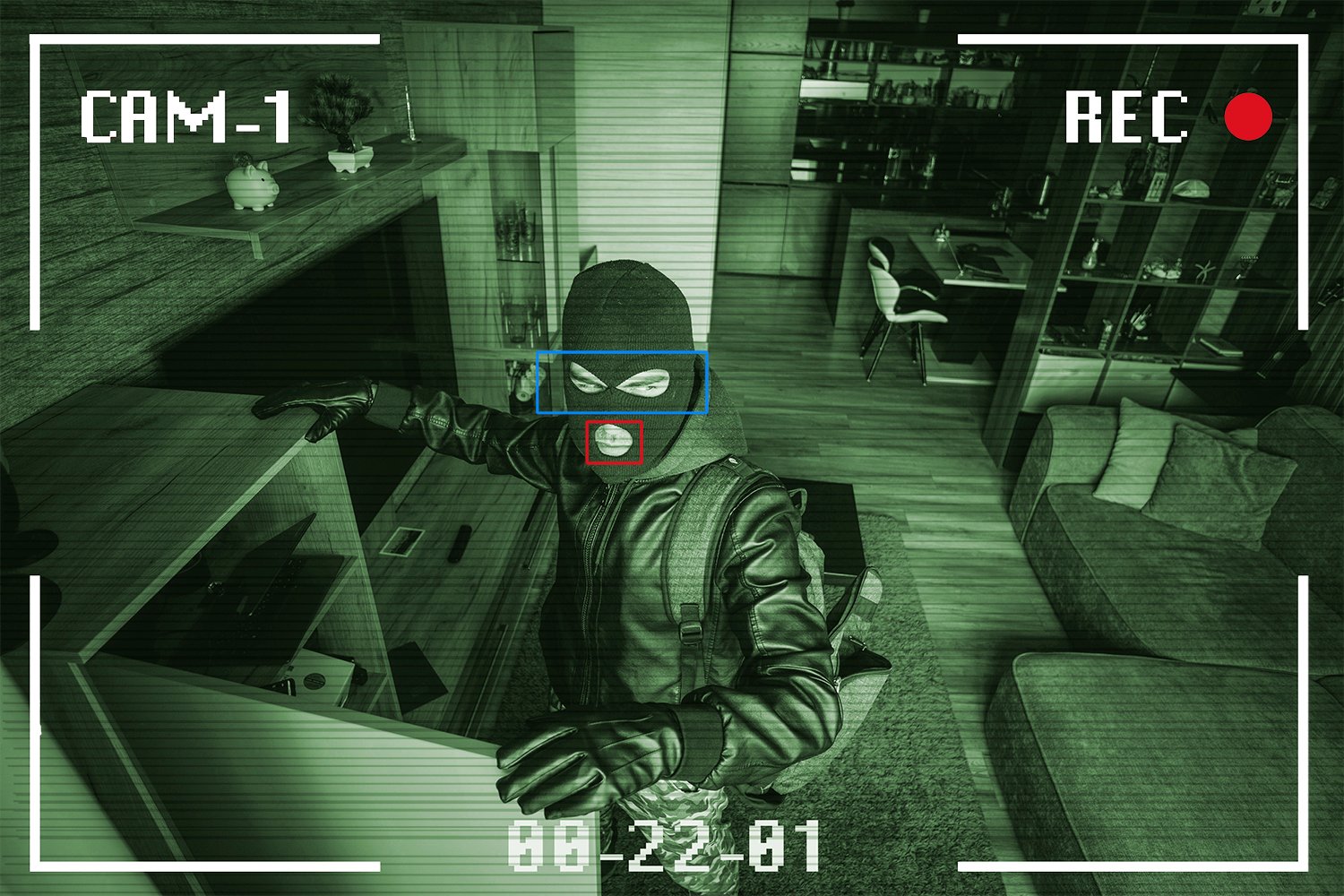

Judge Pamela Gates, a superior court judge in Phoenix, Arizona, expresses growing unease about the average person’s capacity to discern truth from falsehood in the face of AI-generated evidence. Imagine a scenario where a victim presents a photograph depicting bruises, only for the defendant to claim the injuries were digitally added. Or consider a plaintiff submitting an incriminating recording, while the defendant insists the voice, though identical, was manipulated. Such situations are becoming increasingly common, and the traditional methods of assessing evidence are no longer sufficient.

This explosion of cheap and accessible generative AI systems has prompted legal scholars to advocate for changes to long-standing evidence rules. Proposals currently under review by a federal court advisory committee suggest shifting the responsibility of authentication from juries to judges, empowering judges to filter out potentially fabricated material before trials commence. This represents a significant departure from current practice, where any questions about authenticity are typically left to the jury to decide.

The Power of Audio-Visual Evidence and the Liar’s Dividend

Research highlights the persuasive power of audio-visual evidence. Studies indicate that exposure to fabricated videos can influence eyewitness testimony, leading individuals to falsely recall events they never witnessed. Furthermore, jurors presented with video evidence alongside oral testimony retain information far more effectively than those who only hear testimony.

While judges currently possess the authority to exclude potentially fake evidence, the threshold for admissibility is relatively low. Under existing federal rules, if a party disputes the authenticity of an audio recording, the opposing party needs only to present a witness familiar with the voice to testify to its similarity. Given the sophistication of deepfakes, which have even fooled parents into believing they’re hearing or seeing their children in distress, this low bar is easily overcome.

Adding another layer of complexity is the “liar’s dividend,” where litigants exploit the prevalence of online deepfakes to falsely claim legitimate evidence is fabricated. This tactic preys on jurors’ awareness of AI-generated content, potentially leading them to doubt genuine evidence. This has already been observed in high-profile cases, including trials related to the January 6th Capitol riot and a civil suit involving Tesla.

Proposed Rule Changes: Strengthening Judicial Gatekeeping

To address these challenges, legal experts are proposing significant changes to the rules of evidence. One proposal empowers judges to act as gatekeepers, requiring litigants challenging the authenticity of evidence to present substantial proof of alteration or fabrication. The burden then shifts to the party introducing the evidence to provide corroborating information. Ultimately, the judge decides whether the evidence’s probative value outweighs any potential prejudice or harm to the jury.

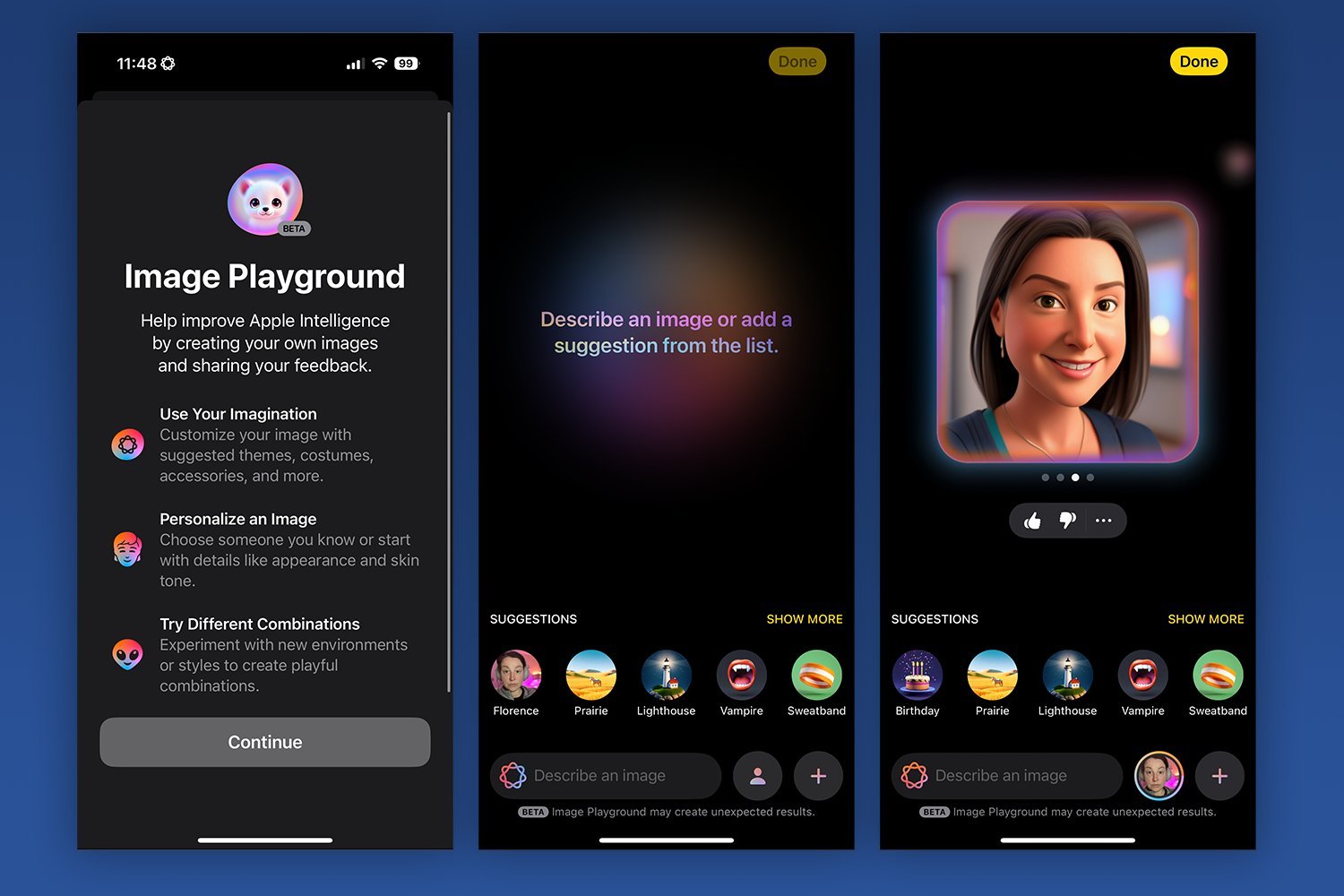

Another proposal removes deepfake questions entirely from the jury’s purview. It mandates that parties alleging a deepfake obtain a forensic expert’s opinion before trial. The judge would review the expert report and other arguments, determining the evidence’s admissibility based on the preponderance of the evidence. The jury would then be instructed to consider the evidence authentic. This proposal also suggests that the party alleging a deepfake should bear the cost of the forensic expert, unless the judge determines they lack sufficient resources.

The Path Forward: A Long Road to Reform

Implementing these changes to the federal rules of evidence is a lengthy process, requiring approval from various committees and the Supreme Court. While some advocate for a cautious approach, observing how judges handle deepfake cases under existing rules, others believe deepfakes represent a paradigm shift requiring immediate action.

Technological solutions, like deepfake detection tools and digital watermarking, are also being explored. However, current detection tools are unreliable, and watermarking technologies face challenges in widespread adoption and security.

Access to Justice: Protecting the Vulnerable

The most pressing concerns revolve around cases that rarely garner public attention, particularly in family courts where litigants often lack legal representation or financial resources. These individuals are most vulnerable to manipulation by deepfakes and least equipped to challenge their authenticity. The lack of readily available tools and resources for judges to assess AI-generated evidence in these scenarios presents a significant challenge to ensuring fair and just outcomes.