Former OpenAI researchers, William Saunders and Daniel Kokotajlo, have penned a letter to California Governor Gavin Newsom expressing their deep concern over OpenAI’s opposition to SB-1047, a state bill designed to implement stringent safety protocols for AI development. They argue that this stance, while disappointing, is not unexpected.

Driven by a commitment to AI safety, Saunders and Kokotajlo joined OpenAI to contribute to the responsible development of powerful AI systems. However, they ultimately resigned, citing a loss of faith in the company’s ability to prioritize safety, honesty, and responsibility in its AI pursuits.

The researchers warn that unchecked AI advancement poses significant risks of “catastrophic harm,” including potential threats like unprecedented cyberattacks and the facilitation of bioweapon creation. They criticize OpenAI CEO Sam Altman’s seemingly contradictory position on AI regulation, highlighting his congressional testimony advocating for industry regulation while simultaneously opposing specific state-level legislation like SB-1047. This apparent hypocrisy underscores their concerns about the company’s true commitment to safety.

Public apprehension regarding AI safety is evident. A 2023 survey conducted by MITRE Corporation and the Harris Poll revealed that only 39% of respondents considered current AI technology “safe and secure.”

SB-1047, the Safe and Secure Innovation for Frontier Artificial Models Act, mandates developers of advanced AI models to adhere to specific safety measures before initiating training. These include implementing immediate shutdown capabilities and establishing comprehensive safety and security protocols. This is particularly relevant given OpenAI’s history of data leaks and system intrusions.

OpenAI, however, disputes the researchers’ characterization of their stance on SB-1047, advocating for federal AI regulations over state-specific laws. They believe a unified federal approach will promote innovation and establish the US as a leader in global AI standards.

Saunders and Kokotajlo counter that OpenAI’s call for federal regulation is a delaying tactic, arguing that Congress has shown reluctance to enact meaningful AI legislation. They emphasize the urgency of state-level action, noting that federal regulations, if eventually implemented, could preempt California’s efforts.

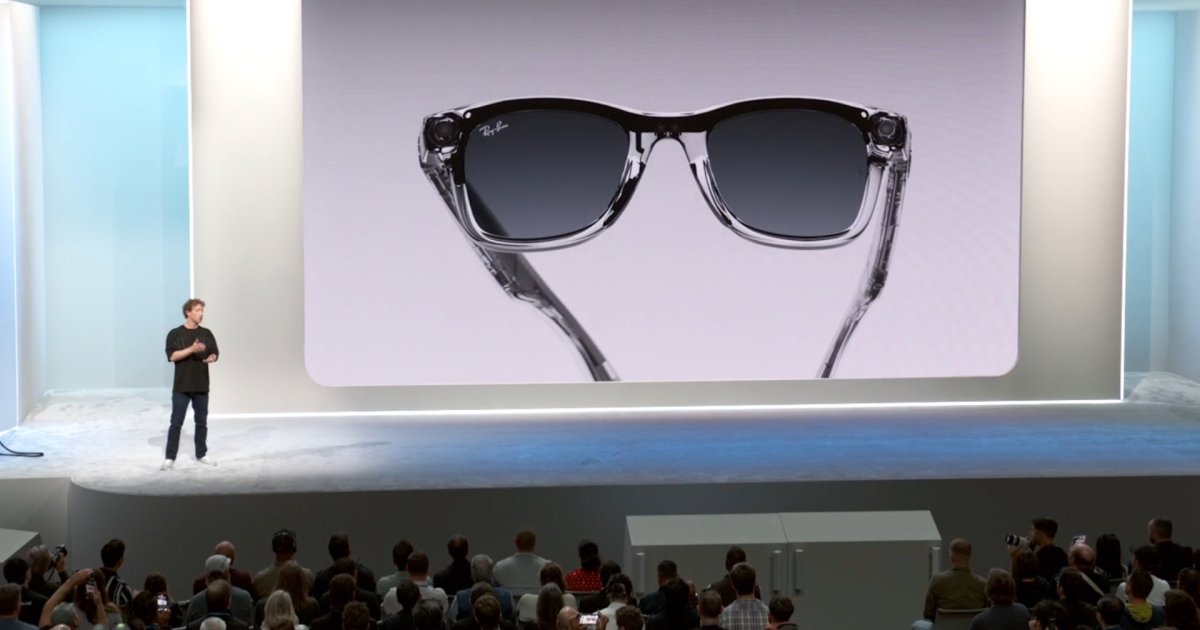

Surprisingly, xAI CEO Elon Musk has expressed support for SB-1047. Despite past threats to relocate his companies from California due to regulatory pressures, Musk acknowledged the importance of AI safety regulations, citing his long-standing advocacy for regulating potentially risky technologies. He even announced the construction of a powerful AI training cluster in Memphis, Tennessee.

The debate surrounding SB-1047 highlights the growing tension between fostering AI innovation and ensuring public safety. The differing perspectives underscore the critical need for a balanced approach that prioritizes both technological advancement and responsible development.