Google has unveiled Gemini 2.0, a significant upgrade to its AI model, promising to revolutionize how users interact with their devices. This new iteration boasts faster responses, AI audio output, and, most importantly, a focus on “agentic” AI. This advancement builds upon the foundation laid by Gemini 1.5, which introduced AI image generation with Imagen 3 and brought Gemini to Android and iPhone.

Understanding Agentic AI and its Potential

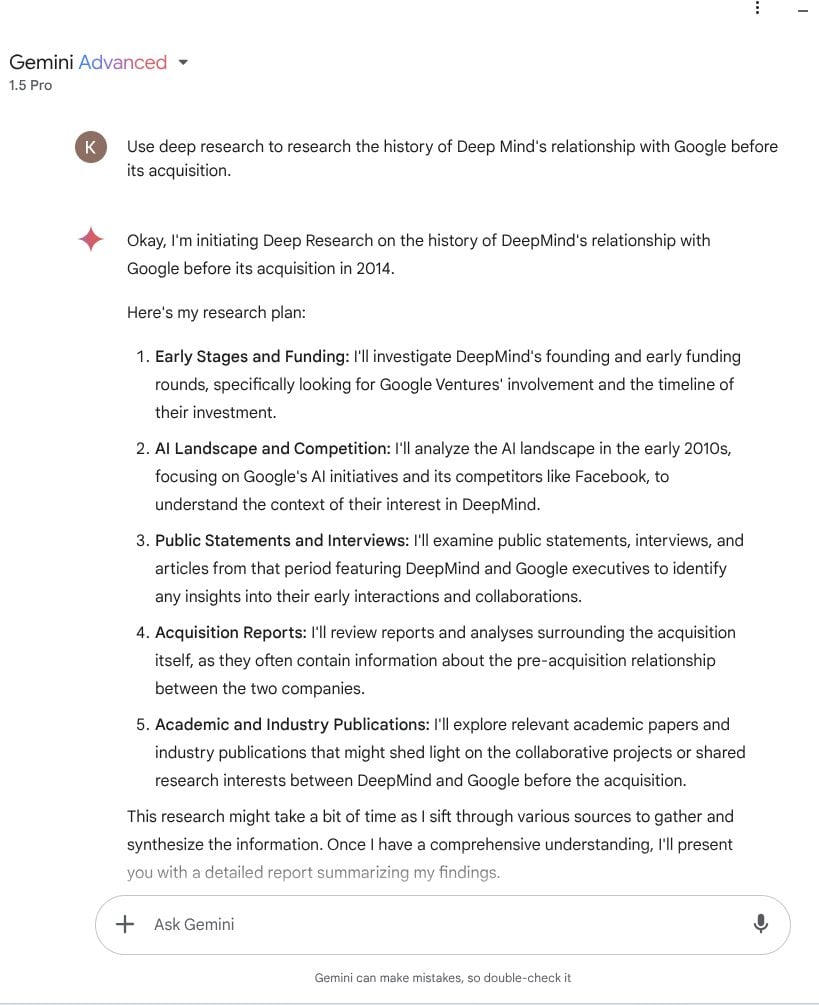

Agentic AI involves multiple AI models communicating with each other, allowing them to perform complex tasks on a user’s behalf. Imagine asking your AI to scan your emails, identify a dinner reservation, and add it to your calendar – all automatically. This “universal assistant,” as Google terms it, begins with Gemini 2.0 Flash, available to all Gemini users. Gemini Advanced users also gain access to Deep Research, an agentic AI tool designed to conduct comprehensive internet research and generate detailed reports.

Screenshot 2024 12 11 12.27.34 PmExample of a Google Deep Research output

Screenshot 2024 12 11 12.27.34 PmExample of a Google Deep Research output

Deep Research: Your AI Research Assistant

Deep Research creates a “research plan” outlining the report’s structure before analyzing relevant websites and compiling the information into a multi-page document complete with tables, graphs, and source citations. Currently available in English for Gemini Advanced users on desktop devices, a mobile version is planned for next year.

Agentic AI Beyond Research: Coding and Gaming

While Deep Research exemplifies agentic AI’s research capabilities, Google has demonstrated further applications. A new tool named “Jules,” similar to Microsoft’s GitHub Copilot, showcases Gemini 2.0’s ability to create, evaluate, merge, and execute code in real-time. Furthermore, Gemini 2.0 has been shown interacting with Supercell mobile games like Clash of Clans, providing gameplay advice and reminding players of daily challenges. However, the current coaching capabilities seem basic, offering information readily available elsewhere.

Project Astra: The Future of Agentic AI?

Project Astra, currently in testing, hints at the future of agentic AI. Similar to Gemini Live but with enhanced visual processing and interpretation capabilities, Astra promises more conversational dialogue, memory of past conversations, and integration with Google Search, Google Lens, and Google Maps. While a public release date remains unknown, aspects of Astra are expected to be incorporated into future Gemini products.

Gemini 2.0’s Deep Research: A Google Search Powerhouse?

Google’s Deep Research aims to simplify navigating the vast internet. This tool promises to extract relevant information from the web, creating comprehensive reports. However, the process can be time-consuming. Initial tests indicate report generation can take several hours, similar to the time a student might spend on a last-minute research assignment. Although powered by the large Gemini model with a 1 million token context window, accuracy remains a concern. Like Google’s AI Search, Deep Research might sometimes source information from less reliable websites. Thus, users are advised to verify the information presented.

Conclusion: A Leap Forward with Agentic AI

Gemini 2.0 marks a significant step forward in AI development. Its focus on agentic AI has the potential to transform how we interact with our devices, from streamlining research to enhancing gaming experiences. While challenges remain regarding accuracy and processing time, Google’s commitment to improving and expanding agentic AI capabilities promises exciting developments in the future. The integration of Project Astra’s features further suggests a future where AI seamlessly integrates into our daily lives, anticipating our needs and simplifying complex tasks.