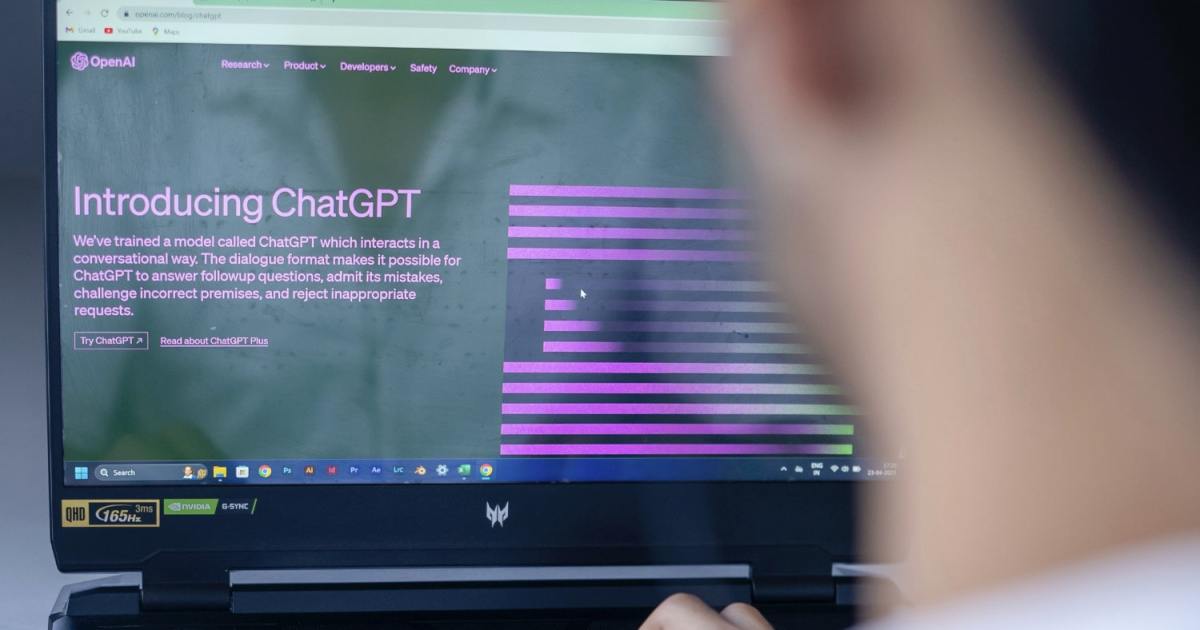

OpenAI’s upcoming next-generation large language model (LLM), rumored to be called Orion, has generated considerable excitement. However, recent reports suggest its advancements over GPT-4 may be less significant than the leap from GPT-3 to GPT-4. This potential slowdown raises important questions about the future of AI development.

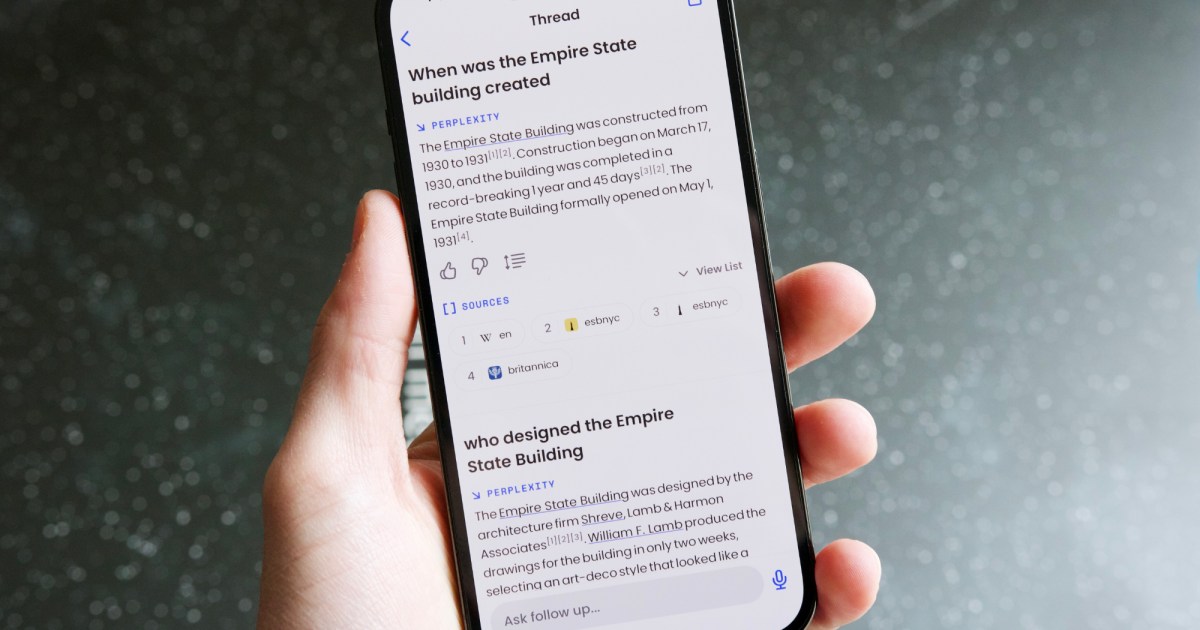

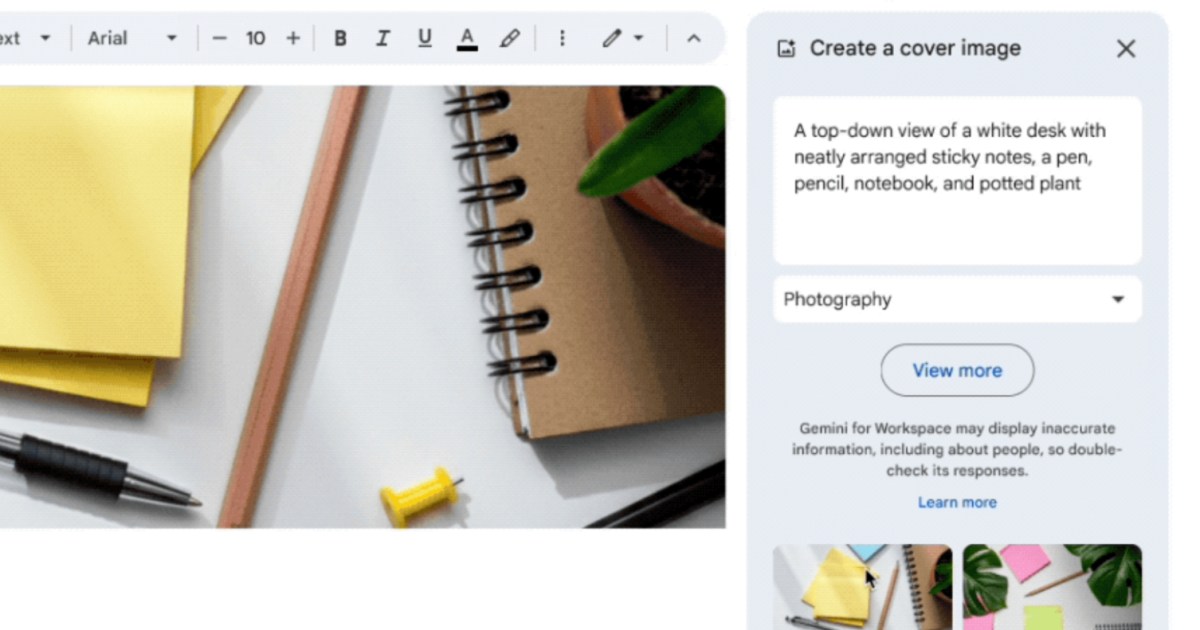

According to sources within OpenAI cited by The Information, Orion demonstrates only marginally better performance than GPT-4 in certain tasks, particularly coding. While it shows improved general language capabilities, like summarizing documents and composing emails, the overall improvement is reportedly “far smaller” than previously seen. One contributing factor is the increasing scarcity of high-quality training data. The readily available data from platforms like X, Facebook, and YouTube has already been extensively utilized, leaving AI companies scrambling for new sources of complex and diverse information. This data bottleneck is proving to be a major hurdle in pushing LLMs beyond their current limitations.

This scarcity of training data has significant implications, both environmentally and commercially. As LLMs become increasingly complex, with parameter counts reaching into the trillions, the energy and resource demands for training them are expected to soar. Projections suggest a six-fold increase in resource consumption within the next decade. This escalating demand is driving major tech companies to secure vast amounts of energy. Microsoft’s interest in restarting Three Mile Island, AWS’s acquisition of a 960 MW power plant, and Google’s purchase of the output of seven nuclear reactors all point to the immense power needs of AI data centers. These investments highlight the strain on existing infrastructure and the need for innovative solutions to power the future of AI.

A nuclear power plant with cooling towers.

A nuclear power plant with cooling towers.

To address the training data challenge, OpenAI has reportedly established a “foundations team.” This team is exploring various strategies, including the use of synthetic data generated by models like Nvidia’s Nemotron family. They are also investigating methods to enhance model performance post-training. These efforts represent a shift in strategy as AI companies grapple with the limitations of readily available data.

A person working on a laptop with code displayed on the screen.

A person working on a laptop with code displayed on the screen.

While initially believed to be GPT-5, Orion is now expected to launch sometime in 2025. However, the question remains whether the necessary resources, particularly energy, will be available to support its deployment and further advancements in AI without straining global infrastructure. The future of AI development hinges on overcoming these challenges, ensuring both progress and sustainability.