When Microsoft introduced Copilot+ PCs, a key question arose: Why not run these AI apps directly on the GPU? At Computex 2024, Nvidia revealed their solution.

Nvidia and Microsoft are collaborating on an API that empowers developers to run AI-accelerated applications, including Copilot’s Small Language Models (SLMs) for features like Recall and Live Captions, directly on RTX graphics cards.

The Surface Laptop running local AI models.

The Surface Laptop running local AI models.

This toolkit enables local GPU execution, surpassing the limitations of NPU-only Copilot+ PCs. GPUs generally offer superior AI processing power compared to NPUs, promising more potent AI applications and broader hardware compatibility.

Currently, Copilot+ PCs mandate an NPU with at least 40 Tera Operations Per Second (TOPS), a requirement met solely by the Snapdragon X Elite. However, even entry-level GPUs boast around 100 TOPS, with high-end models significantly exceeding this benchmark.

The new API also integrates Retrieval-Augmented Generation (RAG) into the Copilot runtime, granting AI models access to local information for more effective responses, similar to Nvidia’s Chat with RTX showcased earlier this year.

Expanding AI Capabilities with the RTX AI Toolkit

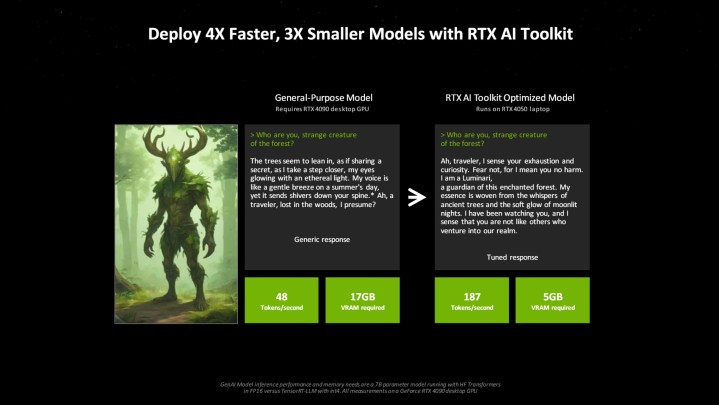

Beyond the API, Nvidia unveiled the RTX AI Toolkit at Computex. This developer suite, launching in June, combines various tools and SDKs for tailoring AI models to specific applications. Nvidia claims the toolkit can make models four times faster and three times smaller compared to open-source alternatives.

Performance comparison with the RTX AI toolkit.

Performance comparison with the RTX AI toolkit.

The Future of AI Applications

We’re witnessing a surge in developer tools for creating user-focused AI applications. While some are already integrated into Copilot+ PCs, we anticipate a substantial increase in AI applications by next year. The necessary hardware is available; now the software development needs to catch up.