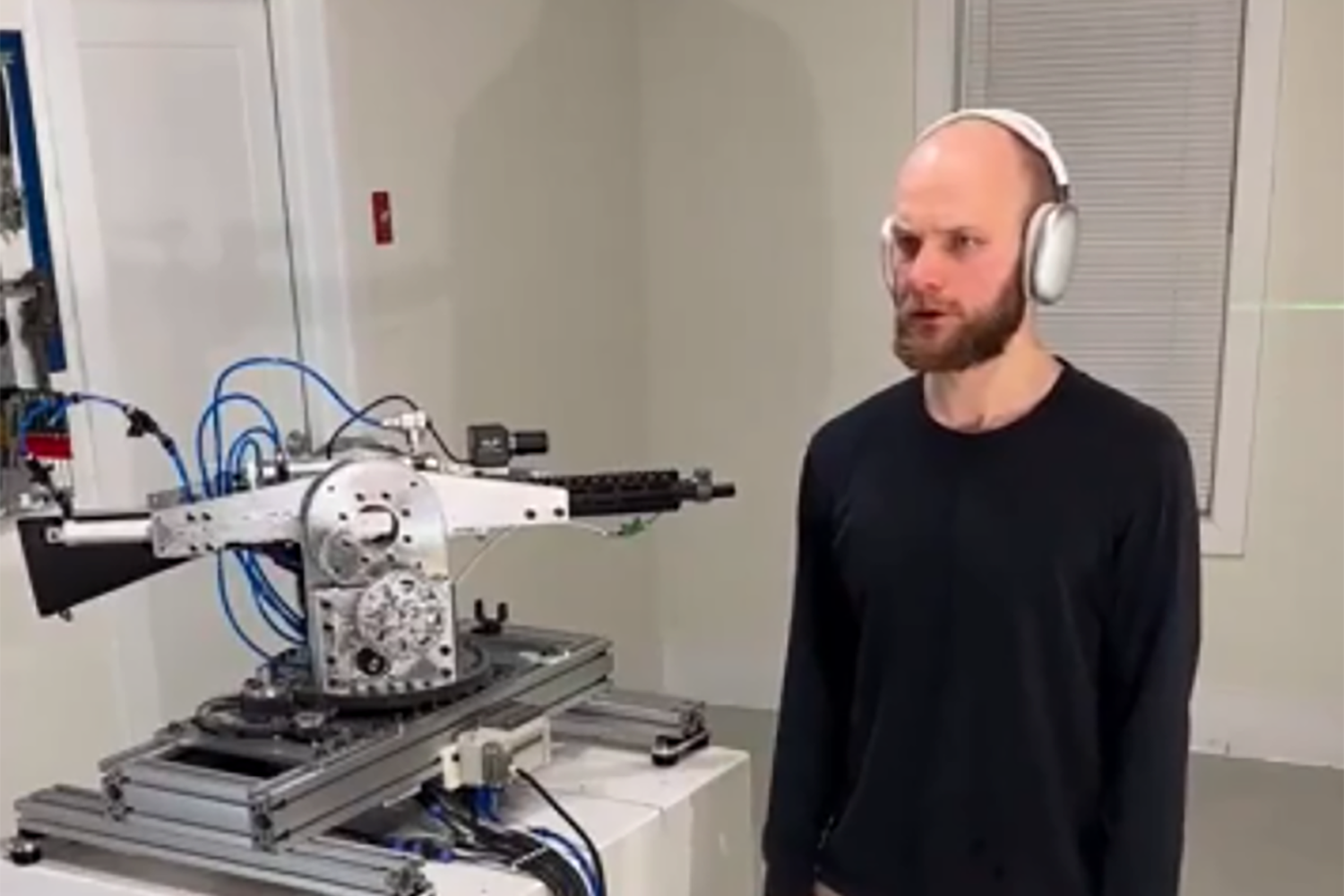

The potential dangers of integrating AI with weaponry were recently highlighted when a developer used OpenAI’s technology to control an automated rifle with ChatGPT. A video circulating on Reddit showcased the developer issuing voice commands to ChatGPT, prompting the rifle to aim and fire at nearby walls with alarming speed and accuracy.

The demonstration leveraged OpenAI’s Realtime API, enabling ChatGPT to interpret the developer’s spoken commands and translate them into instructions for the rifle. The system’s ability to quickly learn commands like “turn left” and convert them into machine-readable language underscores the potential for sophisticated AI-weapon integration.

OpenAI swiftly responded to the incident, confirming they had identified the developer’s actions as a policy violation and terminated their access. In a statement to Futurism, OpenAI emphasized their proactive approach in addressing this misuse of their technology.

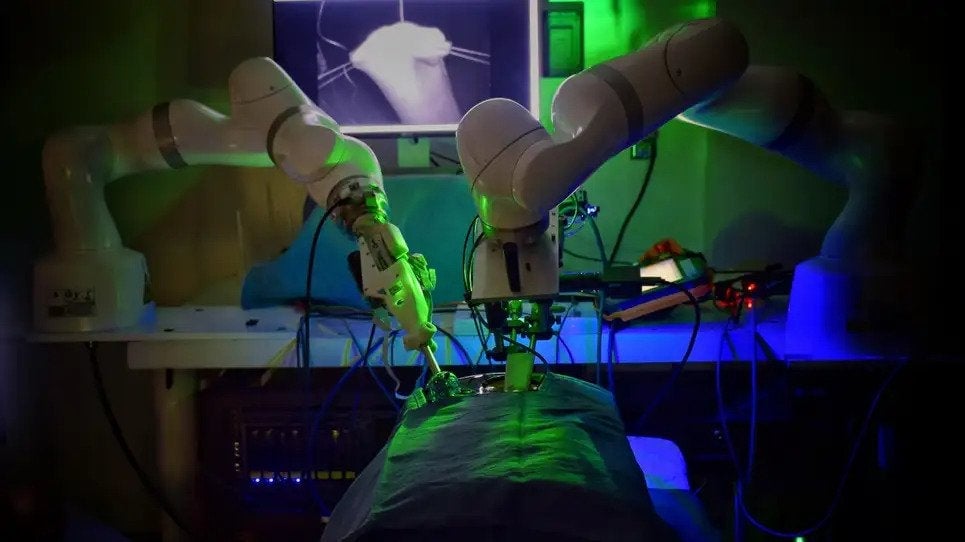

The incident has reignited concerns about the potential for AI to automate lethal weapons. Critics have long warned about the risks associated with AI’s ability to process audio and visual input, potentially enabling autonomous targeting and engagement. The development of autonomous drones, capable of identifying and striking targets without human intervention, further intensifies these concerns, raising the specter of war crimes and the erosion of accountability.

These concerns are not merely theoretical. A recent Washington Post report revealed that Israel has already employed AI in target selection for bombing missions, raising serious ethical questions about the potential for indiscriminate attacks. The report highlighted instances where poorly trained soldiers relied solely on AI predictions, sometimes targeting individuals based solely on their gender.

While proponents of AI in warfare argue that it can enhance soldier safety by enabling remote operations and precise targeting, critics emphasize the importance of responsible development and deployment. Some advocate for prioritizing the development of countermeasures like jamming technology to disrupt enemy communication systems and mitigate the risks posed by autonomous weapons.

OpenAI explicitly prohibits the use of its products for weapon development or automation of systems that could jeopardize personal safety. However, the company’s partnership with Anduril, a defense technology company specializing in AI-powered drones and missiles, has raised questions about the potential blurring of lines between civilian and military applications of AI.

This partnership aims to develop systems that can defend against drone attacks, leveraging AI to process data, reduce operator workload, and enhance situational awareness. The lucrative nature of the defense industry, with its substantial annual spending, makes it an attractive market for tech companies, particularly with the increasing influence of tech figures in government.

Despite OpenAI’s efforts to prevent misuse, the availability of open-source AI models and the accessibility of 3D-printed weapon components create a challenging environment for controlling the development of autonomous weapons. The ease with which individuals can assemble DIY killing machines at home underscores the urgent need for comprehensive regulations and ethical guidelines to govern the development and deployment of AI in the military context.