The mother of Suchir Balaji, a former OpenAI employee who publicly criticized the company’s data collection practices, is demanding an FBI investigation into his death. Balaji, 26, was found dead in his San Francisco apartment on November 26, 2024. While the city’s chief medical examiner ruled the death a suicide, his mother, Poornima Ramarao, contends that evidence suggests otherwise.

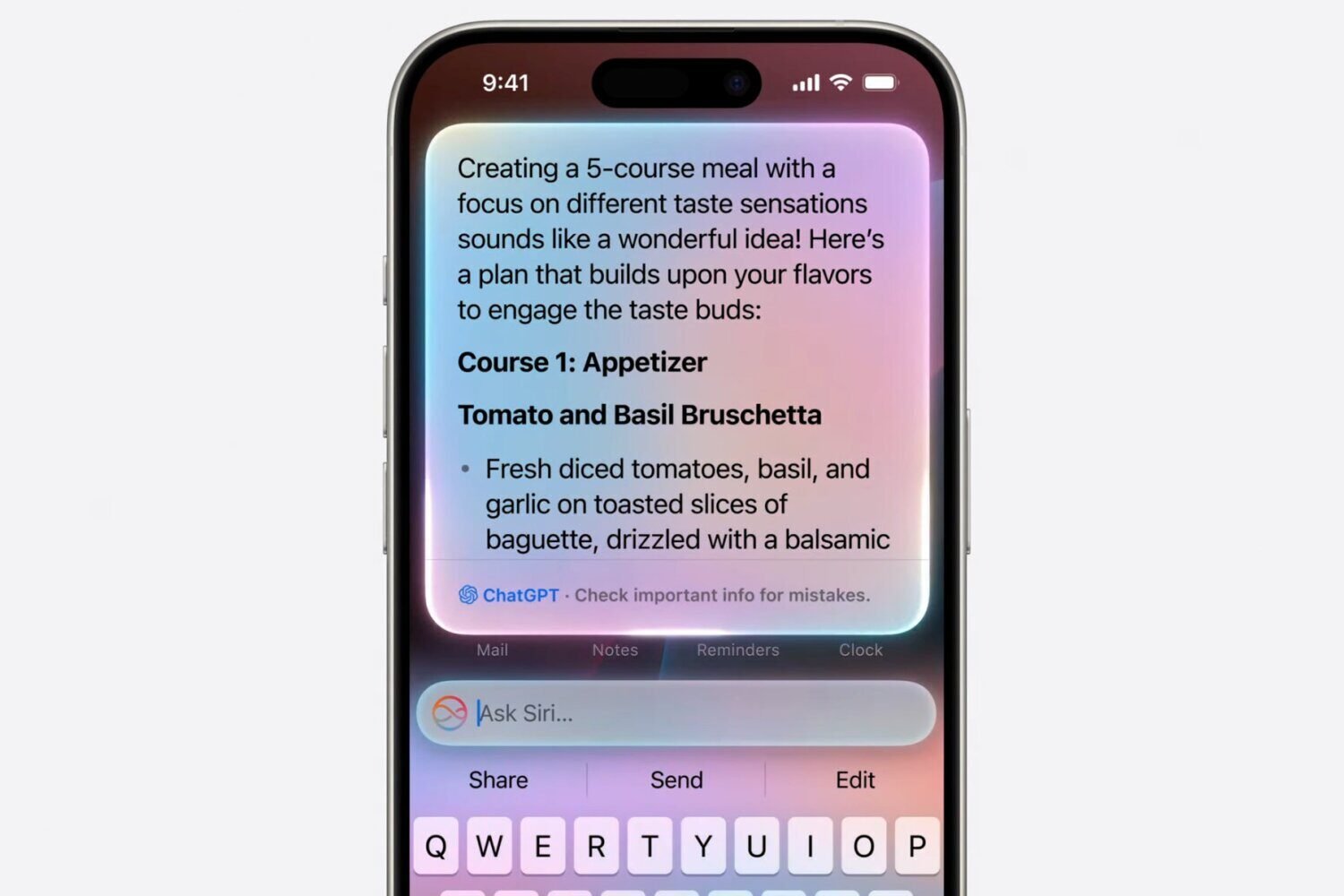

Balaji played a significant role in collecting training data for ChatGPT during his four years at OpenAI. He reportedly became disillusioned with the company’s transition from a non-profit research lab to a commercial enterprise. In August 2024, he resigned and subsequently spoke to the New York Times, alleging mass copyright infringement by OpenAI. This interview fueled the ongoing legal battle between the Times and OpenAI regarding the use of copyrighted articles in training ChatGPT.

Ramarao’s posts on X (formerly Twitter) allege that Balaji’s apartment was ransacked, and there were signs of a struggle in the bathroom, including bloodstains suggesting he was struck. She has hired a private investigator whose initial findings allegedly contradict the official suicide ruling. A GoFundMe campaign to finance further investigation has raised over $47,000. Elon Musk, OpenAI co-founder and currently involved in his own lawsuit against the company, commented on Ramarao’s post, stating, “This doesn’t seem like a suicide.”

OpenAI has offered condolences to the family but referred to a previous statement. MaagX reached out to Ramarao for comment but has not yet received a response.

Balaji’s career at OpenAI began with an internship in 2018, transitioning to a full-time position in 2021. According to a Business Insider interview with Ramarao, Balaji made substantial contributions to ChatGPT’s training methods and infrastructure. In 2022, he was tasked with gathering data from the internet for training GPT-4, the model powering ChatGPT’s launch later that year.

Balaji’s public statements positioned him as a prominent figure in the debate over AI companies’ use of web content. This practice, viewed as outright theft by some media companies and as fair use by tech industry insiders, represents a contentious issue with significant financial and technological implications. Large language models like ChatGPT rely heavily on vast amounts of training data, primarily text, to generate human-like responses.

OpenAI and other AI companies have been criticized for their lack of transparency regarding training data. While they keep this data confidential, their web crawlers have been detected scraping websites, leading platforms like Reddit and X to implement restrictions. Many large models utilize public databases like Wikipedia and Common Crawl, a repository of over 250 billion web pages collected since 2007. While this information is generally known, Balaji’s insider perspective added weight to his concerns.

Following his public criticism, Balaji likely faced online backlash and potential bullying. The pressure to succeed in Silicon Valley is notorious for contributing to stress and mental health issues. Whistleblowers also face risks like legal repercussions, job loss, career damage, and social isolation. Additionally, studies indicate that Asian Americans are less likely to seek mental health treatment due to cultural stigma.

While the possibility of Balaji being targeted for his actions exists, there is currently no concrete evidence to support this claim. The circumstances surrounding his death, including the pressures he faced and his potential struggles with mental health, could plausibly explain the tragedy.

The case of Ian Gibbons, the lead scientist at Theranos who reportedly took his own life after facing pressure from Elizabeth Holmes for raising concerns about the company’s blood tests, serves as a stark reminder of the potential consequences of whistleblowing.

Ramarao’s pursuit of answers and her disbelief in the official ruling are understandable. While further investigation may uncover additional details, at present, there’s no compelling reason to suspect foul play.