Stanford University professor Jeff Hancock, a leading expert in social media, has found himself in a peculiar situation. He’s been accused of using AI-generated misinformation while serving as an expert witness in a case about… AI-generated misinformation. Hancock, the founding director of Stanford’s Social Media Lab, provided expert testimony in Kohls v. Ellison, a lawsuit challenging Minnesota’s new law against deepfakes in elections.

The controversy revolves around a study Hancock cited in his expert opinion. He referenced research that supposedly found people struggle to differentiate between real and manipulated content, even when aware of deepfakes. However, the plaintiffs’ attorneys argue that the study, titled “The Influence of Deepfake Videos on Political Attitudes and Behavior” and purportedly published in the Journal of Information Technology & Politics, doesn’t exist.

The plaintiffs allege that the citation is an “AI hallucination,” likely generated by a large language model like ChatGPT. They’ve filed a motion to exclude Hancock’s expert opinion, arguing that the fabricated citation casts doubt on the entire document, particularly given the lack of methodology and analytical logic in his commentary. The Minnesota Reformer first reported the accusations. Maagx.com reached out to Hancock for comment, but he has not yet responded.

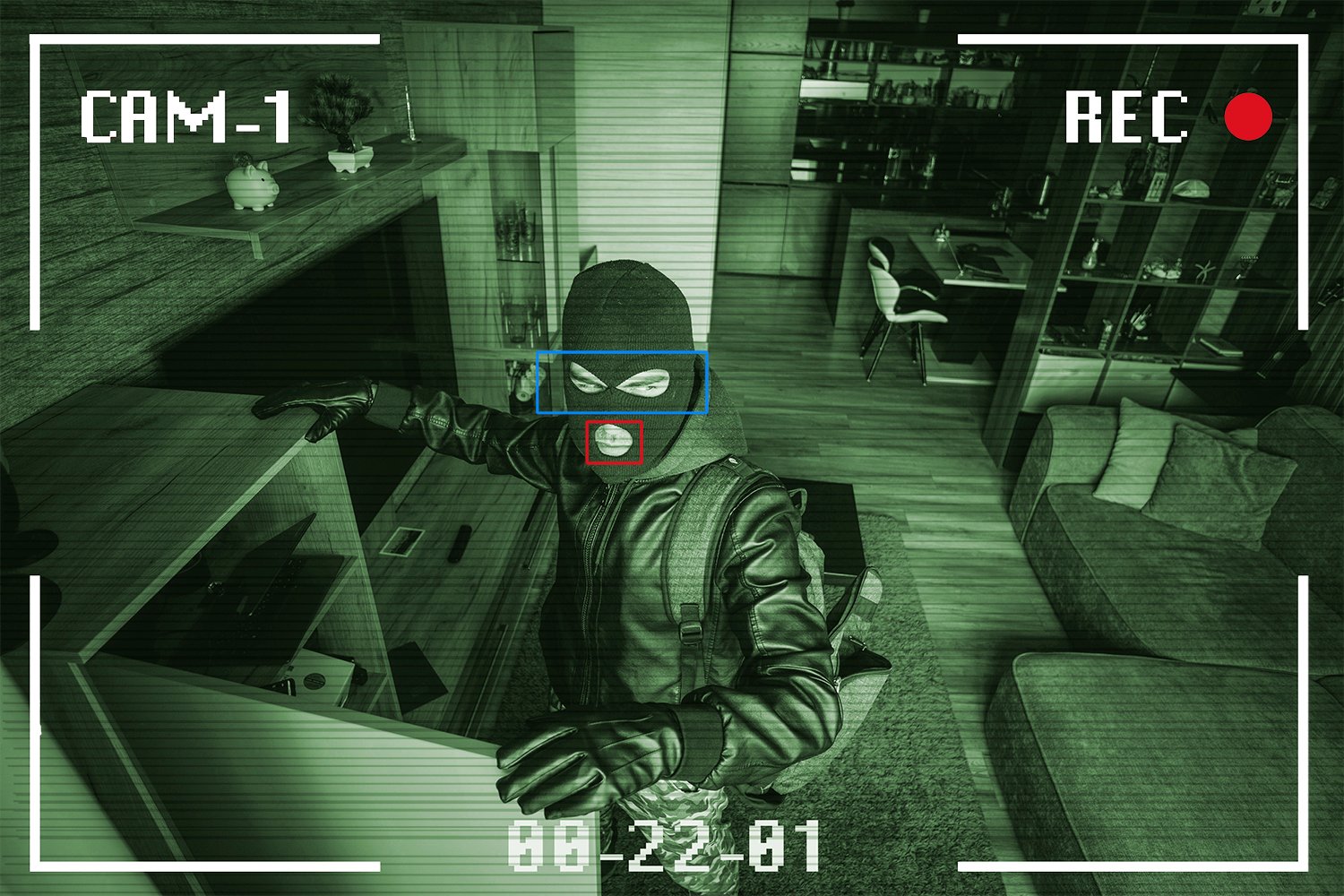

Minnesota is among 20 states that have enacted laws to regulate the use of deepfakes in political campaigns. The Minnesota law prohibits knowingly or recklessly disseminating deepfakes within 90 days of an election if they’re created without consent and intended to influence election outcomes.

The lawsuit challenging the law was filed by a conservative law firm representing Minnesota State Representative Mary Franson and Christopher Kohls, a YouTuber known as Mr. Reagan. Kohls has also filed a lawsuit against California’s election deepfake law, resulting in a preliminary injunction last month that prevented the law from taking effect.

This case highlights the increasing challenges posed by AI-generated misinformation, especially in the context of elections. The irony of a misinformation expert potentially relying on fabricated AI research underscores the complexity of navigating this evolving landscape. The outcome of this case could significantly impact the legal battles surrounding deepfakes and election integrity.