The AI landscape is rapidly evolving, with many familiar with cloud-based models like ChatGPT. However, running AI locally on your PC offers unique advantages, and Microsoft has just simplified this with its new Local AI tool. Announced at the Build conference, this command-line utility makes experimenting with local Large Language Models (LLMs) more accessible than ever, directly on your Windows machine.

What is Microsoft Local AI?

Microsoft Local AI is a command-line tool designed to streamline the process of running Large Language Models (LLMs) directly on your computer. Initially aimed at developers, its simplicity makes it approachable for anyone curious about local AI. Think of it like “winget,” Windows’ package manager: instead of complex setups, a few simple commands download and configure everything needed. This tool not only runs LLMs but also optimizes their performance for your specific PC hardware.

System Requirements for Running Microsoft Local AI

To use Microsoft Local AI, your PC should run Windows 10 or 11. Minimum specs are 8GB RAM (16GB recommended) and 3GB storage (15GB recommended for multiple models). While not essential, a dedicated Neural Processing Unit (NPU) or a modern GPU significantly boosts performance. This includes hardware in Copilot+ PCs, Nvidia RTX 2000 series, or AMD Radeon 6000 series and newer.

How to Install and Run Microsoft Local AI: A Step-by-Step Guide

Setting up Microsoft Local AI is straightforward. Follow these steps:

-

Open a Command-Line Terminal

Press the Windows key, type “terminal,” and select the Windows Terminal app (or Windows PowerShell) from the results. -

Install Microsoft Local AI

At the command prompt, type the following command and press Enter:winget install Microsoft.LocalWait a few moments for the necessary files to download and install. Microsoft handles the entire process automatically.

-

Download and Run Your First AI Model

Microsoft recommends starting with the Phi-3.5-mini model due to its small size and quick response times. To run it, type:foundry model run phi-3.5-miniThe terminal will then prompt you to enter questions, like “Is the sky blue on Mars?”

-

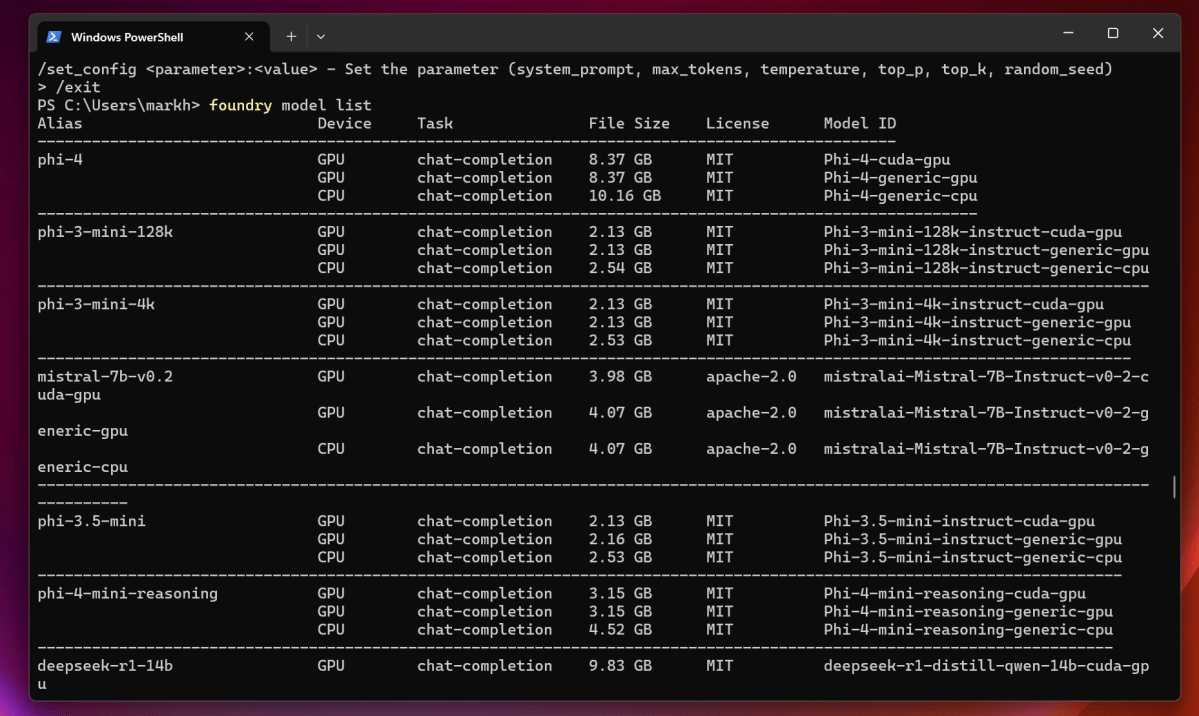

Explore Other Available Models

You’re not limited to Phi-3.5-mini. To see a list of other supported models, use this command:foundry model listTo run a different model, simply replace “phi-3.5-mini” in the previous run command with the name of your chosen model from the list.

List of available AI models like Phi-3 and CodeLlama in Microsoft Local AI displayed in a command-line interface.

List of available AI models like Phi-3 and CodeLlama in Microsoft Local AI displayed in a command-line interface.

Key Benefits and Current Capabilities of Local AI

Microsoft Local AI’s primary advantage is its on-device operation, offering enhanced privacy and offline access after model download. It’s engineered for speed and efficiency, automatically selecting the best model version for your PC’s hardware, including NPUs or GPUs. Currently, it functions as an LLM chatbot. Given its developer focus, future expansions could include more complex tasks, potentially integrating with system features like the Windows Snipping Tool’s text extraction capabilities.

Microsoft Local AI in the Broader AI Landscape

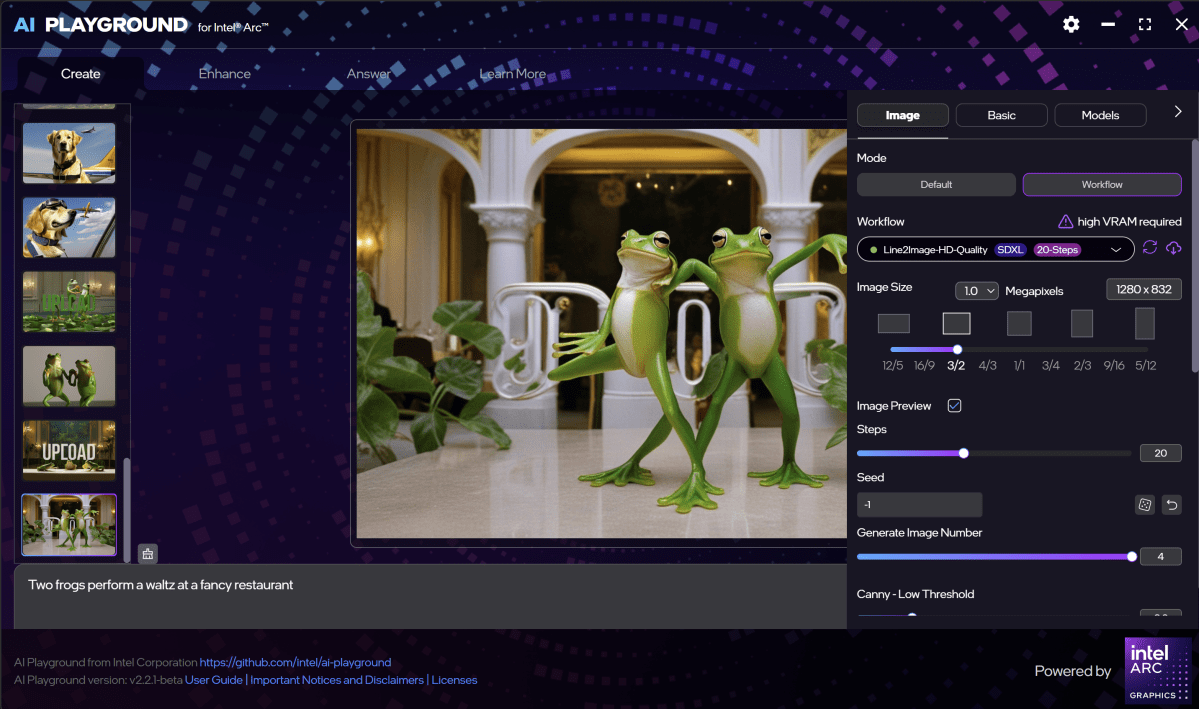

While Microsoft Local AI simplifies on-device LLMs, it’s distinct from feature-rich cloud services like ChatGPT or Gemini. Unlike Intel’s AI Playground, which offers image generation but is Intel-specific, Microsoft’s tool is hardware-agnostic for basic LLM chat, though currently text-only. It’s a foundational step, making local AI more accessible on a wider range of Windows PCs, though not yet a replacement for comprehensive cloud solutions.

Intel AI Playground interface showcasing settings for AI art generation, contrasting with Microsoft's text-based Local AI.

Intel AI Playground interface showcasing settings for AI art generation, contrasting with Microsoft's text-based Local AI.

Conclusion

Microsoft Local AI isn’t positioned to outperform established cloud AI giants yet. Its current strength lies in its remarkable simplicity and seamless integration into Windows, providing a straightforward, text-based local LLM experience. As Microsoft continues to develop this tool, its accessibility could pave the way for more powerful and diverse local AI applications on PCs. For now, it’s an exciting and easy way to explore the potential of on-device AI.