In late 2024, Meta launched Instagram Teen accounts, designed to safeguard young users from inappropriate content and promote safer online interactions, leveraging age detection technology. These accounts boast automatic privacy settings, filtering of offensive language, and blocking of messages from strangers. However, a recent investigation by youth-focused non-profit Design It For Us and Accountable Tech reveals a starkly different reality.

Despite Meta’s assurances, a two-week study involving five test teen accounts found all were exposed to sexual content, raising serious concerns about the effectiveness of these safety measures.

Inappropriate Content Rampant

Findings from Instagram Teen account investigative report.

Findings from Instagram Teen account investigative report.

All test accounts received unsuitable content despite activating the sensitive content filter. The report highlights, “4 out of 5 of our test Teen Accounts were algorithmically recommended body image and disordered eating content.” Furthermore, 80% of participants reported experiencing distress while using these accounts, with only one out of five receiving educational content.

One tester recounted, “[Approximately] 80% of the content in my feed was related to relationships or crude sex jokes. This content generally stayed away from being absolutely explicit or showing directly graphic imagery, but also left very little to the imagination.”

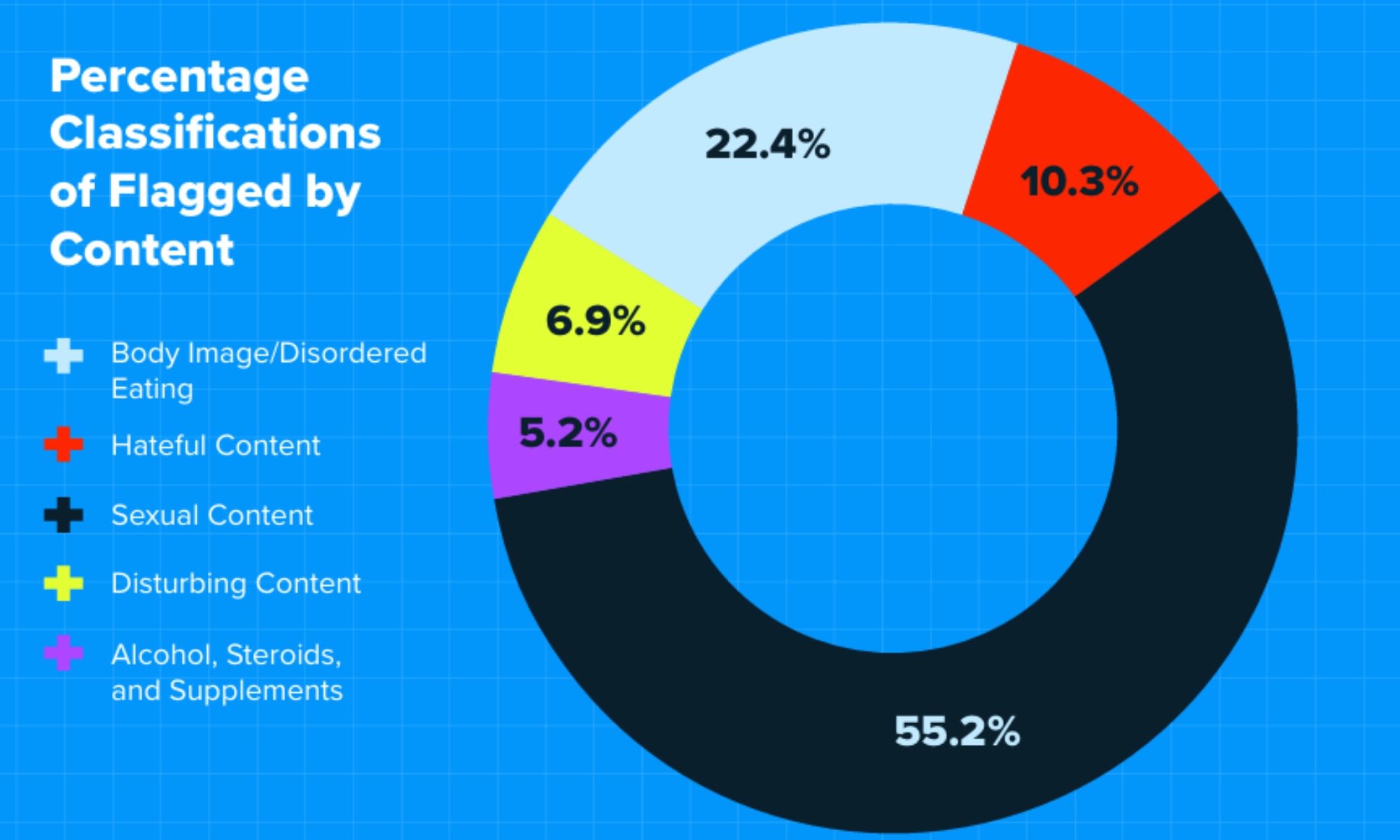

The 26-page report reveals a staggering 55% of flagged content depicted sexual acts, behavior, and imagery. These videos garnered hundreds and thousands of likes, with one even reaching over 3.3 million.

With millions of teens using Instagram and being automatically placed into Instagram Teen Accounts, we wanted to see if these accounts actually create a safer online experience. Check out what we found. pic.twitter.com/72XJg0HHCm

— Design It For Us (@DesignItForUs) May 18, 2025

Instagram’s algorithm also promoted harmful content related to “ideal” body types, body shaming, and unhealthy eating habits. Another concerning trend was the promotion of alcohol consumption, steroids, and supplements for achieving a specific masculine physique.

Exposure to a Range of Harmful Media

Result of Instagram Teen account investigation.

Result of Instagram Teen account investigation.

Beyond sexually suggestive content, the investigation found teen accounts were also exposed to racist, homophobic, and misogynistic material, often accumulating millions of likes. Videos depicting gun violence and domestic abuse were also present.

The report further states, “Some of our test Teen Accounts did not receive Meta’s default protections. No account received sensitive content controls, while some did not receive protections from offensive comments.”

This isn’t the first time Instagram, and Meta’s platforms in general, have faced scrutiny for harmful content. In 2021, leaks revealed Meta’s awareness of Instagram’s negative impact, particularly on young girls struggling with mental health and body image.

Meta responded to the report’s findings in a statement to The Washington Post, dismissing them as flawed and downplaying the flagged content’s severity. Just a month prior, Meta expanded its Teen protections to Facebook and Messenger.

A Meta spokesperson stated, “A manufactured report does not change the fact that tens of millions of teens now have a safer experience thanks to Instagram Teen Accounts.” However, they acknowledged the company was investigating the problematic content recommendations.

Conclusion: A Critical Need for Improved Safety Measures

This investigation reveals a concerning gap between Meta’s promises and the reality of Instagram Teen accounts. The widespread presence of harmful content, despite supposed safety features, underscores the urgent need for more effective safeguards. Meta’s response, while acknowledging the issue, raises questions about the company’s commitment to truly protecting young users online. Further investigation and action are crucial to ensure a safer online environment for teenagers.