Imagine dictating to your AI assistant: “Hey AI, navigate to the password field in the dialog box at the lower left of my screen, input CapitalP-ampersand-S-S-W-zero-R-D-asterisk-numberSign-exclamation, and then press Enter.” While the thought of AI flawlessly executing complex voice commands is enticing, the practicality often falls short. Would you announce your password aloud in a bustling co-working space, or even in the privacy of your home? Most likely, you’d opt for the familiar tactile feedback of your mouse and the click of your keyboard. This very scenario highlights a crucial question: despite the dazzling demonstrations of AI capabilities, is it truly poised to make our keyboards and mice relics of the past? While AI can book eight tickets to a Liverpool match at Anfield and get you to the checkout, the final, sensitive step of entering a password often brings us back to these fundamental input devices. The rapid advancements in AI agents and automation from tech giants like Google, OpenAI, and Anthropic fuel this speculation, yet the “last-mile” dependency on manual input remains a significant hurdle.

The Alluring Promise of AI-Driven Interaction

https://www.youtube.com/watch?v=JcDBFAm9PPI[/embed]

Artificial intelligence was undeniably the star of Google’s I/O event earlier this year, painting a future where Android smartphones, and indeed any platform touched by Gemini, would be fundamentally transformed. From Workspace applications like Gmail to in-car navigation via Google Maps, the integration of AI promised a paradigm shift. The demonstrations of Project Mariner and the research prototype Project Astra were particularly striking. These next-generation conversational assistants aim to enable users to accomplish real-world tasks through voice, potentially eliminating the need to tap screens or use keyboards. Imagine seamlessly transitioning queries from a product manual on a website to instructional YouTube videos without losing context – a true testament to AI’s evolving memory capabilities. In a web browser, such an AI could book your travel, presenting you with a final confirmation page, leaving only payment as your manual step. This sophisticated level of voice interaction naturally leads one to ponder if the keyboard and mouse are becoming antiquated concepts for digital input.

The “Last Mile” Problem and the Burden of Error

Gemini Live on a smartphone identifying headphones using camera and screen sharing capabilities.

Gemini Live on a smartphone identifying headphones using camera and screen sharing capabilities.

It’s worth noting that voice-based system navigation isn’t entirely new; Windows PCs and macOS have long included voice access tools within their accessibility suites, complete with customizable shortcuts. However, the current AI revolution suggests a broader ambition: to transition voice control from an assistive technology to the primary input method for everyone, effectively sidelining the keyboard and mouse.

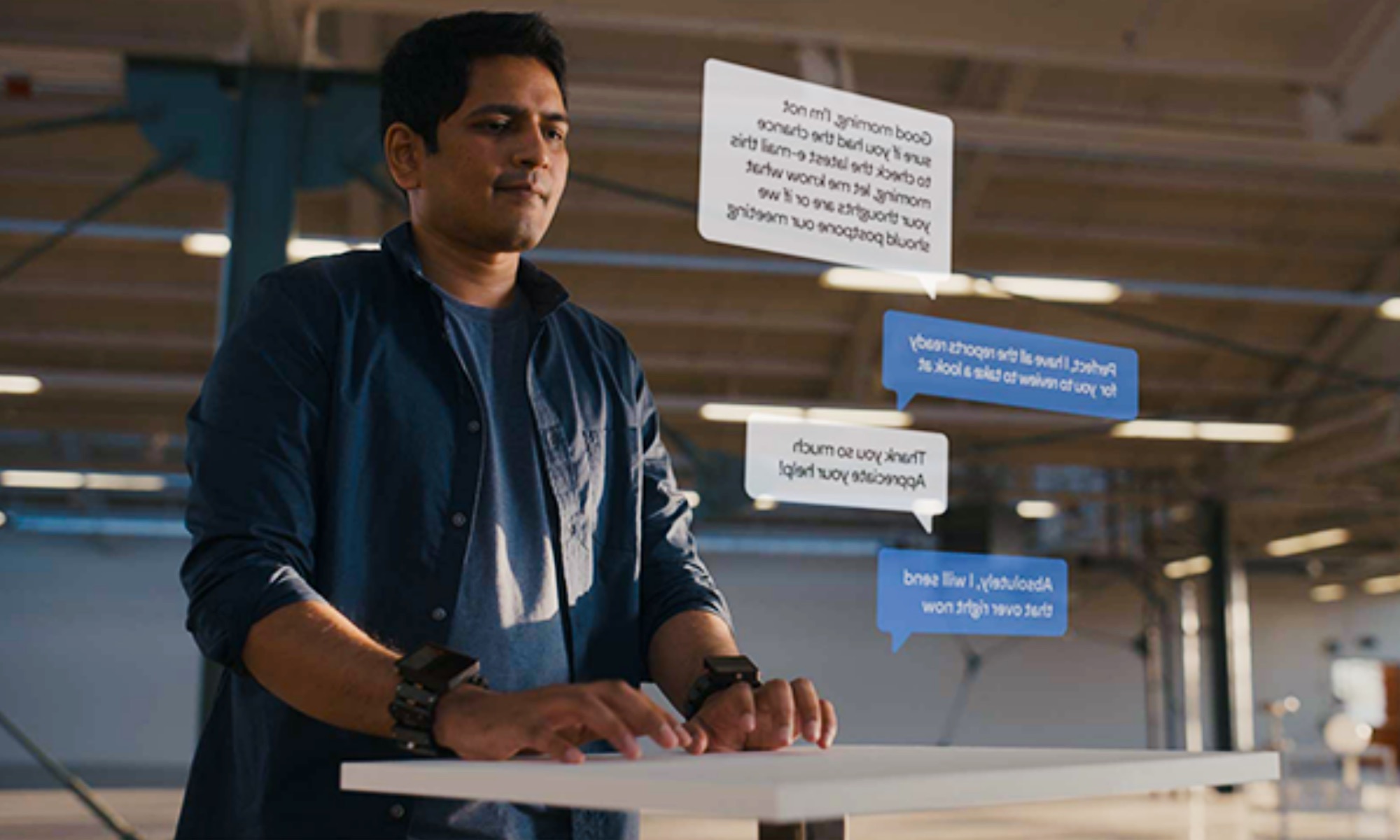

Consider the potential synergy between an AI like Anthropic’s Computer Use agent—which “use[s] computers the way people do—by looking at a screen, moving a cursor, clicking buttons, and typing text”—and the eye-tracked input demonstrated by Apple’s Vision Pro headset. One could envision giving voice commands to an AI, which then executes the task, with gestures or eye movements handling any final interactions. Away from specialized headsets, voice-controlled AI could still operate on standard computers. Hume AI, in collaboration with Anthropic, is developing the Empathetic Voice Interface 2 (EVI 2), designed to translate voice commands into traditional computer inputs, much like a more sophisticated Alexa.

Despite these impressive developments, practical challenges emerge. How effective would voice commands be for tasks requiring high precision, such as fine-tuning media edits, making minute adjustments in a coding environment, or populating spreadsheet cells? The instruction, “Hey Gemini, input four thousand eight hundred and ninety-five dollars into cell D5 and label it as ‘air travel expense,’” feels far more cumbersome than simply typing it.

Diagram of a self-adjusting virtual keyboard for in-air typing requiring motion sensing gear.

Diagram of a self-adjusting virtual keyboard for in-air typing requiring motion sensing gear.

Why Keyboards and Mice Endure for Critical Tasks

The allure of AI-driven automation, as seen in Google’s AI Mode in Search, Project Mariner, and Gemini Live, offers a glimpse into a voice-centric computing future. Yet, this convenience often hits a wall. Imagine the irritation of repeatedly verbalizing commands like, “Move to the dialog box in the top-left corner and left-click on the blue button that says Confirm.” Such interactions become tedious, especially when a simple mouse click or keyboard shortcut would suffice.

Gemini Live on a Google Pixel 9a smartphone screen displaying the Gemini Live AI assistant interface.

Gemini Live on a Google Pixel 9a smartphone screen displaying the Gemini Live AI assistant interface.

Furthermore, the inherent unreliability of AI cannot be ignored. Anthropic itself cautions that Claude Computer Use is “still experimental—at times cumbersome and error-prone.” Similar challenges face OpenAI’s Operator Agent and comparable tools in development. Removing the keyboard and mouse from an AI-enhanced computer is akin to enabling Tesla’s Full Self-Driving (FSD) but removing the steering wheel and pedals, leaving you with limited control if the system falters. You need the ability to take over, especially during unexpected events or when troubleshooting issues where direct manual control is paramount.

Even if an AI, driven primarily by voice, navigates you to the final step of a workflow, such as making a payment, manual intervention is often indispensable. With technologies like Passkeys, you still need to confirm your identity by entering a password, using an authenticator app, or scanning a fingerprint. No OS developer or app creator, particularly those dealing with identity verification, would cede complete control over such critical tasks to an AI model. The risk is simply too high. Ironically, for AI like Gemini to learn from your interactions and memory, it first needs to monitor your computer usage, which is fundamentally reliant on keyboard and mouse input, bringing us full circle.

User trying out the Apple Vision Pro headset for an immersive mixed reality experience.

User trying out the Apple Vision Pro headset for an immersive mixed reality experience.

Exploring Virtual Inputs: A Glimpse into the Future?

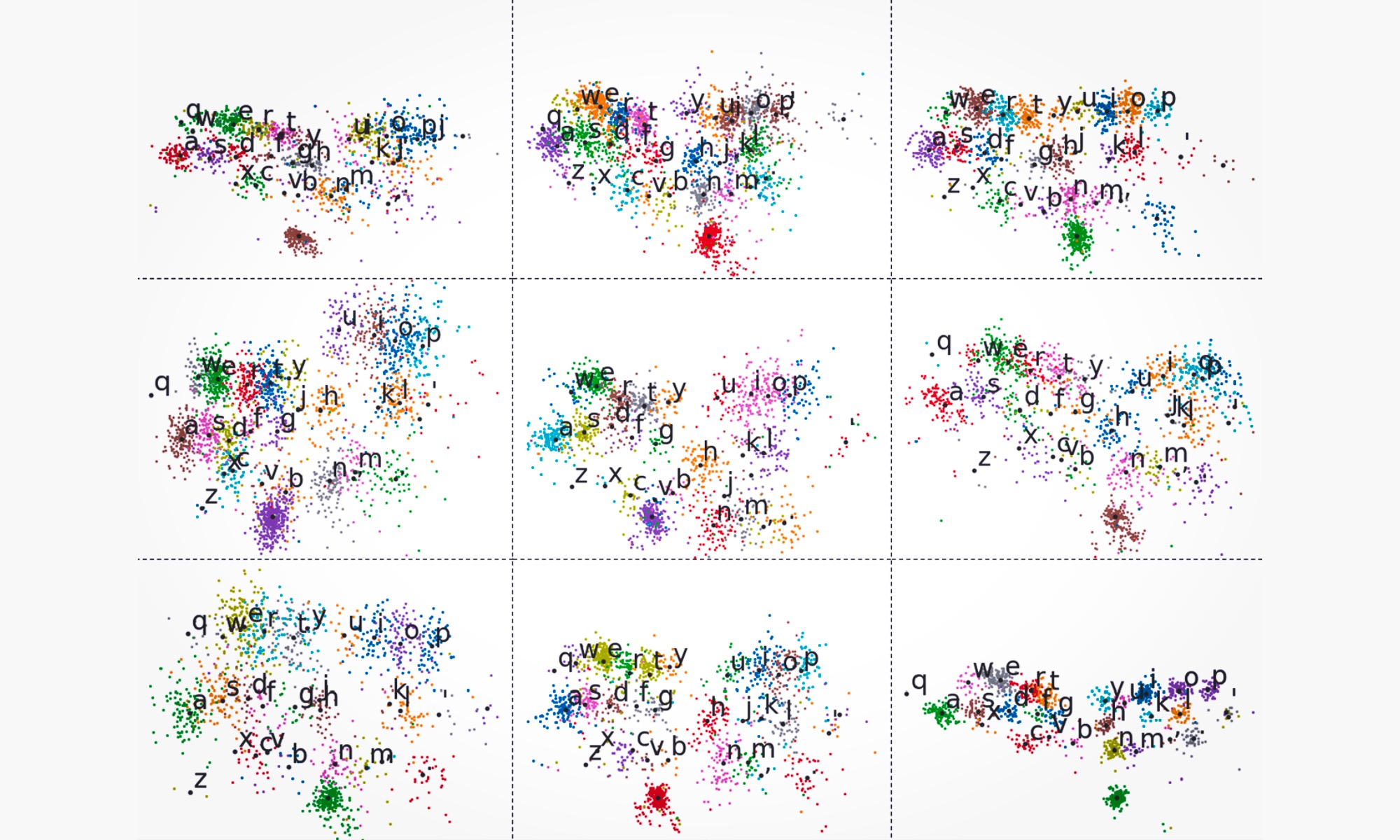

When discussing the replacement of physical keyboards and mice with AI or other advancements, we often find ourselves looking at proxies that lead back to familiar concepts. There’s a wealth of research and material available on virtual mice and keyboards, some dating back over a decade, long before the “transformers” paper catalyzed the current AI boom.

In 2013, DexType launched an app leveraging the Leap Motion sensor for an in-air virtual typing experience, without requiring touchscreens or laser projectors like the Humane AI Pin. While Leap Motion was discontinued in 2019, the underlying idea persisted. Meta is arguably at the forefront of developing alternative input-output methods for computing, what it terms human-computer interaction (HCI). The company has been exploring wrist-worn wearables that utilize electromyography (EMG) to translate electrical motor nerve signals from the wrist into digital commands for controlling devices. This technology aims to support cursor movement and keyboard input.

Concept image of Meta's neural interface wristband for virtual keyboard input.

Concept image of Meta's neural interface wristband for virtual keyboard input.

Meta suggests these gesture-based inputs could be faster than traditional key presses, as they rely on electrical signals traveling directly from the hand to the computer. “It’s a much faster way to act on the instructions that you already send to your device when you tap to select a song on your phone, click a mouse or type on a keyboard today,” Meta claims.

Person wearing Meta's EMG wristband designed for gesture-based device control.

Person wearing Meta's EMG wristband designed for gesture-based device control.

Repackaging, Not Replacing: The Current State of Play

Despite the futuristic appeal, Meta’s approach faces challenges, even before considering AI integration. The concepts of a cursor and a keyboard, albeit in digital form, remain central. We are essentially shifting from physical to virtual iterations of the same tools. While Meta’s Llama AI models could enhance these interactions, these wearable technologies are still largely confined to research labs. When they do become commercially available, they are unlikely to be inexpensive, at least initially. Even simpler third-party apps like WowMouse, which turns a smartwatch into a gesture controller, are often subscription-based and constrained by operating system limitations.

https://www.youtube.com/watch?v=uf57_Xi8gTo[/embed]

It’s difficult to imagine abandoning a reliable, inexpensive keyboard for an experimental voice or gesture-based input device that aims to replace the full functionality of keyboard and mouse for daily tasks. [internal_links] Crucially, widespread developer adoption of natural language-driven inputs into applications will be a slow and protracted process. Alternatives like WowMouse offer partial solutions, typically replacing cursor and tap gestures but not a comprehensive keyboard experience. Moreover, granting apps extensive access to keyboard input raises security concerns reminiscent of keyloggers, a risk operating system developers are keen to mitigate.

Ultimately, while the conversational and agentic capabilities of AI models are advancing rapidly, they are still likely to require users to complete critical steps with a mouse click or a few keystrokes. For many tasks, narrating a long series of voice commands remains far more cumbersome than a quick keyboard shortcut or mouse movement. In essence, AI is set to reduce our reliance on physical input devices, but it is unlikely to replace them entirely, especially for the mass market, in the foreseeable future.