Imagine having a personal AI assistant that has read all your important documents and can instantly answer questions like, “What’s the excess on my car insurance?” or “Does my supplementary dental insurance cover inlays?” This isn’t science fiction; a local AI for personal documents can make this a reality. Even board game enthusiasts can benefit by feeding game instructions to the AI and asking, “Where can I place tiles in Qwirkle?” We’ve put this concept to the test on typical home PCs to see how effectively you can create your own intelligent document query system.

Essential Requirements for Your Personal AI Document Assistant

To query your own documents using a completely local artificial intelligence, you primarily need three components: a local AI model, a database containing your documents, and a chatbot interface.

Fortunately, AI tools like Anything LLM and Msty provide these elements, and both are free of charge. You’ll need to install these tools on a PC equipped with at least 8GB of RAM and a relatively modern CPU. Ensure you have 5GB or more of free space on your SSD. For optimal performance, a powerful graphics card from Nvidia or AMD is highly recommended; consulting an overview of compatible GPU models can be beneficial.

Upon installing Anything LLM or Msty, you effectively get a chatbot on your computer. Post-installation, these tools load an AI language model, known as a Large Language Model (LLM). The specific AI model that runs within the chatbot will depend on your PC’s performance capabilities. Operating the chatbot is straightforward if you understand the basics, though the extensive setting options within these tools may require more expert knowledge for full customization.

However, even with their standard settings, these chat tools are user-friendly. In addition to the AI model and chatbot, Anything LLM and Msty also offer an embedding model. This component is crucial as it reads your documents and prepares them in a local database, making them accessible to the language model.

Why Bigger is Better: The Impact of AI Model Size

There are AI language models designed to run on less powerful hardware. In the context of local AI, “less powerful” refers to a PC with just 8GB of RAM, an older CPU, and lacking a high-performance Nvidia or AMD graphics card. AI models that can operate on such systems typically have 2 to 3 billion parameters (2B or 3B) and are often simplified through a process called quantization. While quantization reduces memory requirements and computational load, it also tends to degrade the quality of the results. Examples of such models include Gemma 2 2B or Llama 3.2 3B.

Although these language models are comparatively small, they can provide surprisingly good answers to a broad range of questions or generate usable text based on your specifications—all locally and within an acceptable timeframe.

A powerful Nvidia or AMD graphics card significantly speeds up local AI chatbot performance, impacting document embedding and response times.

A powerful Nvidia or AMD graphics card significantly speeds up local AI chatbot performance, impacting document embedding and response times.

However, when it comes to the local language model accurately considering your specific documents, these smaller models deliver results that range from “unusable” to “barely acceptable.” The quality of the answers depends on numerous factors, including the type of documents being processed.

In our initial tests with local AI and local documents, the results were so poor that we initially suspected an issue with the document embedding process. Only when we switched to a model with 7 billion parameters did the responses significantly improve. For comparison, using the online model ChatGPT 4o on a trial basis demonstrated just how good the responses could be, confirming that the embedding process itself wasn’t the primary issue.

Indeed, the most significant factor influencing the performance of a local AI for your own documents is the AI model itself. Generally, the larger the model (i.e., the more parameters it has), the better the results. Other components, such as the embedding model, the chatbot tool (Anything LLM or Msty), and the vector database, play a much smaller role in the final output quality.

Understanding Embedding and Retrieval Augmented Generation (RAG)

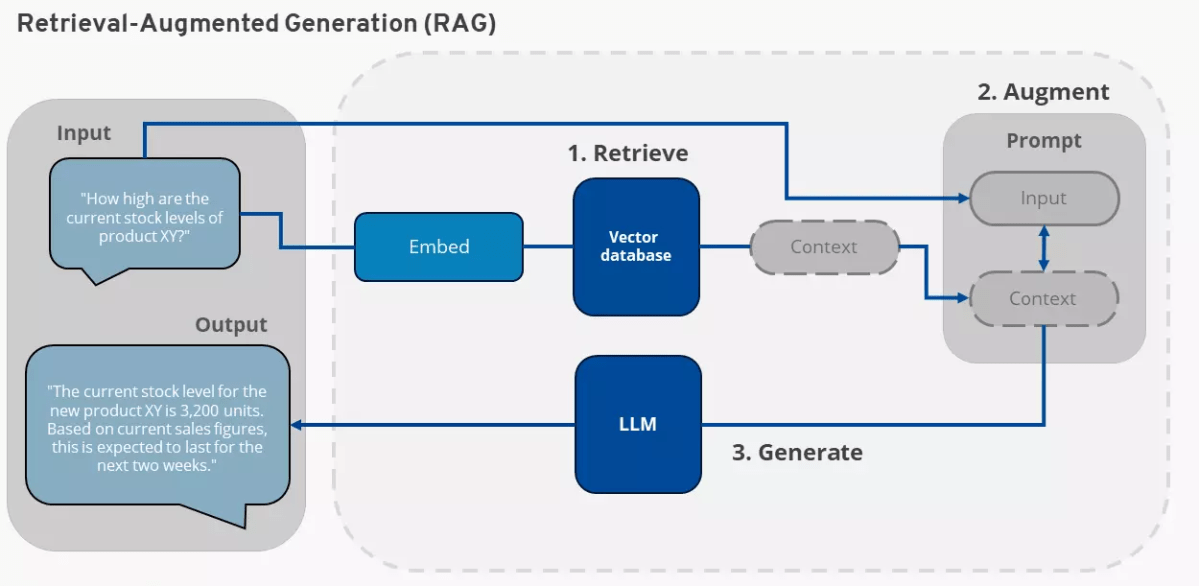

Diagram illustrating the Retrieval Augmented Generation (RAG) process, where an AI language model incorporates information from local documents into its responses.

Diagram illustrating the Retrieval Augmented Generation (RAG) process, where an AI language model incorporates information from local documents into its responses.

Your personal data is connected to the AI using a method involving embedding and Retrieval Augmented Generation (RAG). For tools like Anything LLM and Msty, this process works as follows: Your local documents are first analyzed by an embedding model. This model deconstructs the content of the documents into their semantic meaning and stores this meaning in the form of vectors. Instead of just documents, the embedding model can also process information from a database or other knowledge sources.

The outcome is always a vector database that contains the essence of your documents or other sources. The structure of this vector database enables the AI language model to efficiently find relevant information within it. This process is fundamentally different from a simple word search or a word index. A word index stores the position of important words in a document, whereas a vector database for RAG stores the underlying statements and meanings contained within a text.

This distinction means that a question like, “What is on page 15 of my car insurance document?” usually won’t work well with RAG. The information “page 15” is typically not stored as a core semantic element in the vector database. In most cases, such a query will cause the AI model to “hallucinate”—since it doesn’t know the answer, it invents one.

Creating the vector database, which is the process of embedding your own documents, is the first critical step. The subsequent information retrieval is the second step, known as RAG.

In Retrieval Augmented Generation:

- The user asks the chatbot a question.

- This question is converted into a vector representation and compared with the data in the vector database of the user’s documents (retrieve).

- The relevant results from the vector database are then passed to the chatbot’s AI model along with the original question (augment).

- The AI model generates an answer (generate), which is a synthesis of information from its pre-trained knowledge and the specific information retrieved from the user’s vector database.

Tool Showdown: Anything LLM vs. Msty

We tested two popular chatbots for local document analysis: Anything LLM and Msty. Both programs are functionally similar but differ significantly in the speed at which they embed local documents—that is, make them available to the AI. This embedding process is generally time-consuming.

In our tests, Anything LLM took approximately 10 to 15 minutes to embed a PDF file of around 150 pages. Msty, on the other hand, often required three to four times as long for the same task. We tested both tools with their default AI models for embedding: Msty uses “Mixed Bread Embed Large,” while Anything LLM defaults to “All Mini LM L6 v2.”

Although Msty requires considerably more time for embedding, it might still be the preferred choice for some users. It offers good user guidance and provides precise source information when citing. We recommend Msty for users with fast computers. If your hardware is less powerful, you should probably try Anything LLM first and assess whether you can achieve satisfactory results with this chatbot. Ultimately, the decisive factor for quality is the AI language model used in the chatbot, and both tools offer a comparable range of AI language models.

It’s worth noting that both Anything LLM and Msty allow you to select alternative embedding models. In some instances, however, this can make the configuration more complex. You can also opt for online embedding models, for example, from OpenAI, but you don’t have to worry about accidentally selecting an online model, as they require an API key for use.

Getting Started with Anything LLM: Simple and Fast

To begin, install the Anything LLM chatbot. Microsoft Defender Smartscreen might display a warning that the installation file is not secure; you can typically ignore this by clicking “Run anyway.”

After installation, select an AI language model in Anything LLM. We recommend starting with Gemma 2 2B. You can replace the selected model with a different one at any time (see “Change AI language model” below).

Next, create a “workspace” either through the configuration wizard or later by clicking “New workspace.” This is where you’ll import your documents. Give the workspace a descriptive name and click “Save.” The new workspace will appear in the left sidebar of Anything LLM. Click on the icon to the left of the cogwheel symbol next to your workspace name to import your documents. In the new window, click “Click to upload or drag & drop” and select your files.

After a few seconds, your documents will appear in the list above the button. Click on your document(s) again and select “Move to Workspace,” which will shift them to the right panel. A final click on “Save and Embed” initiates the embedding process. This may take some time, depending on the size of your documents and your PC’s speed.

Tip: Don’t attempt to process an entire archive, like 30 years of PCWorld PDFs, right away. Start with a simple text document to gauge how long your PC takes. This will help you quickly assess whether a more extensive scan is worthwhile.

Once the process is complete, close the window and ask the chatbot your first question. For the chatbot to consider your documents, you must select the workspace you created on the left and then enter your question in the main window at the bottom under “Send a message.”

Change the AI language model: If you wish to select a different language model in Anything LLM, click on the key symbol (“Open Settings”) at the bottom left, then click on “LLM.” Under “LLM provider,” choose one of the suggested AI models. Newer models like those from Deepseek are also offered. Clicking “Import model from Ollama or Hugging Face” gives you access to a vast array of current, free AI models. Downloading one of these models can take time, as they are often several gigabytes in size, and download server speeds can vary. If you prefer to use an online AI model, select it from the drop-down menu under “LLM provider.”

Tips for using Anything LLM: Some menus in Anything LLM can be a bit tricky. Changes to settings usually need to be confirmed by clicking “Save.” However, this button can easily scroll out of view on longer configuration pages, such as when changing AI models under “Open Settings > LLM.” If you forget to click the save button, your settings won’t be applied. Therefore, always look for a “Save” button after making configuration changes. Additionally, the user interface in Anything LLM can be partially switched to another language under “Open settings > Customize > Display Language.”

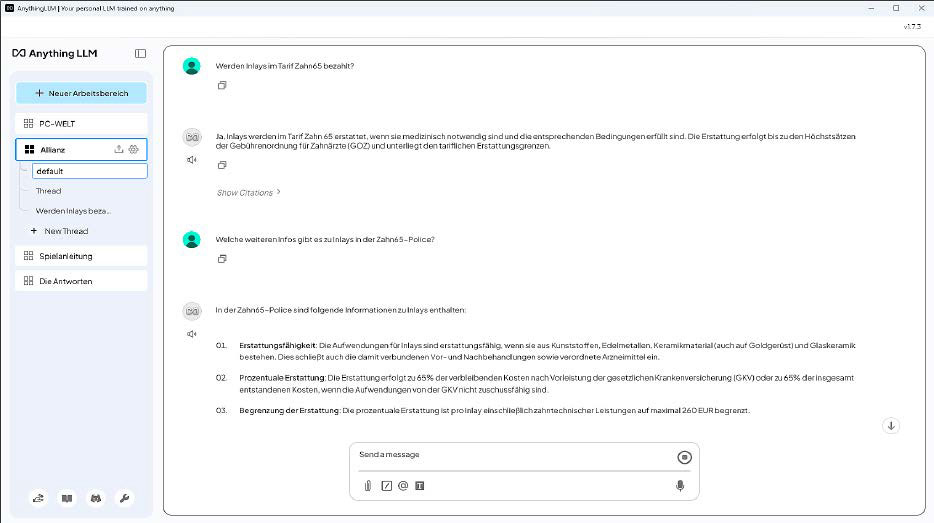

Screenshot showing perfect AI-generated responses from ChatGPT 4o regarding a supplementary dental insurance contract, demonstrating the potential of advanced language models.

Screenshot showing perfect AI-generated responses from ChatGPT 4o regarding a supplementary dental insurance contract, demonstrating the potential of advanced language models.

Msty: A Versatile Chatbot for Powerful Hardware

The Msty chatbot offers somewhat more flexibility than Anything LLM in terms of its potential uses. It can also function as a local AI chatbot without necessarily integrating your personal files. A key feature of Msty is its ability to load and use several AI models simultaneously. Installation and configuration are broadly similar to Anything LLM. [internal_links]

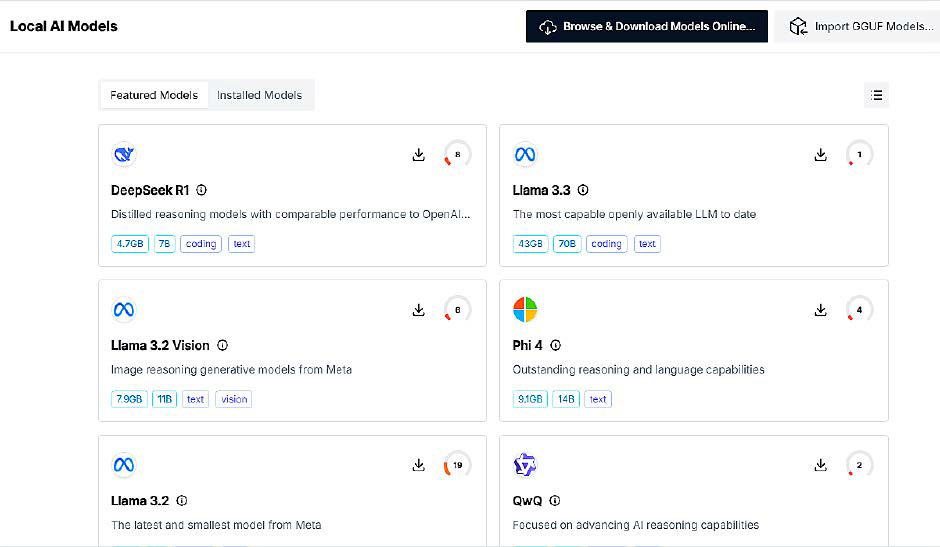

The Msty chatbot interface displaying easy selection of local AI language models and their hardware compatibility scores, with values below 75% being potentially problematic.

The Msty chatbot interface displaying easy selection of local AI language models and their hardware compatibility scores, with values below 75% being potentially problematic.

What Anything LLM calls a “Workspace,” Msty refers to as a “Knowledge Stack.” This is configured under the menu item of the same name, located at the bottom left of the interface. Once you have created a new knowledge stack and selected your documents, you initiate the embedding process by clicking “Compose.” This embedding can take a significant amount of time to complete. Back in the main window of Msty, you enter your question in the input field at the bottom. For the chatbot to consider your local documents, you must click on the knowledge stack symbol below the input field and place a checkmark next to the desired knowledge stack.

Troubleshooting: When Your AI Fails to Deliver

If the answers regarding your documents are unsatisfactory, the first step we recommend is to select a more powerful AI model. For example, if you started with Gemma 2 2B (2 billion parameters), try upgrading to Gemma 2 9B, or load Llama 3.1 with 8 billion parameters.

If this doesn’t bring sufficient improvement, or if your PC takes too long to generate responses, you might consider switching to an online language model. An online model would not directly “see” your local files or the vector database created from them. However, it will receive the specific parts of your vector database that are relevant to the question asked. With Anything LLM, you can make this change on a per-workspace basis. To do this, click on the cogwheel icon for a workspace and, under “Chat settings > Workspace LLM provider,” select a provider like “Open AI” to use a model such as ChatGPT.

Using such online models typically requires a paid API key from the provider (e.g., OpenAI). For instance, an OpenAI API key might cost around $12 to get started, and the number of responses you get for that cost will depend on the specific language model used. You can find an overview of pricing on the provider’s website, such as openai.com/api/pricing.

If switching to an online language model is not an option due to data privacy concerns, the troubleshooting guide provided by Anything LLM can be very helpful. It explains the fundamental possibilities of embedding and RAG and also highlights the smaller configuration adjustments you can make to potentially achieve better answers from your local setup.

Conclusion: Unlocking Your Personal Document Insights

Setting up a local AI for personal documents is an increasingly feasible endeavor, allowing you to create a powerful, private search engine for your own information. Tools like Anything LLM and Msty provide the necessary framework, but the quality of your AI assistant hinges significantly on the chosen AI model and your PC’s hardware capabilities. Larger models generally yield more accurate and nuanced results, particularly when querying specific details within your documents, though they demand more computational power.

Understanding the core concepts of embedding and Retrieval Augmented Generation (RAG) is key to appreciating how these systems work and for troubleshooting any issues. While the setup might require some initial effort and experimentation, the ability to converse with your documents and retrieve information instantly is a compelling prospect. We encourage you to explore these tools, start with simple documents to get a feel for the process, and discover the potential of having a personalized AI at your fingertips.

References

- OpenAI API Pricing: https://openai.com/api/pricing

- Anything LLM offers a troubleshooting guide on their platform for users seeking to optimize their local AI setup.